|

|

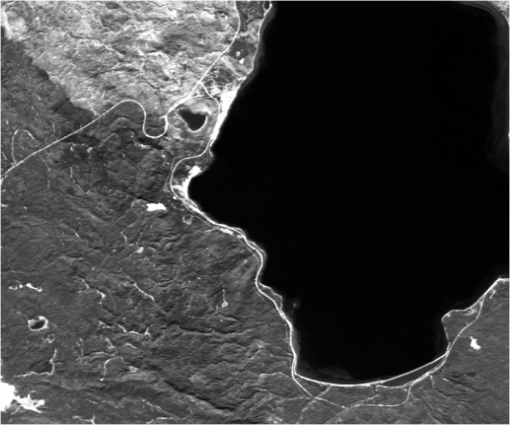

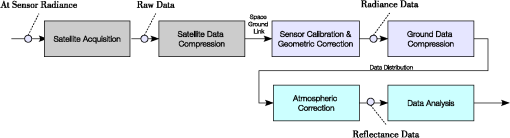

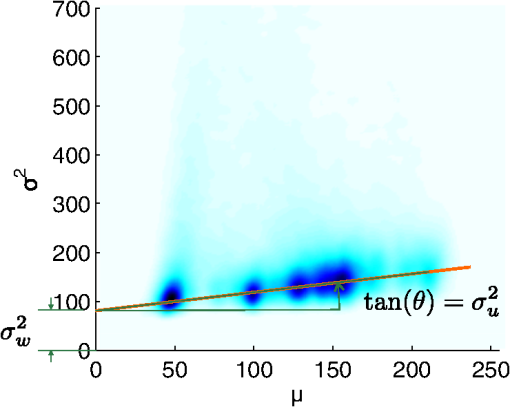

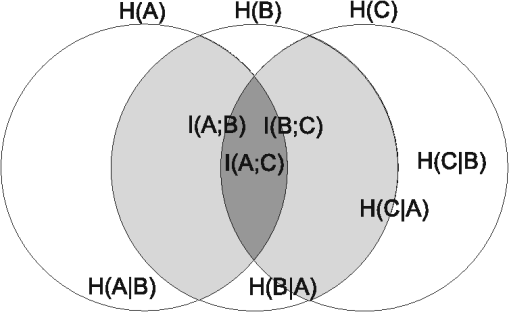

1.IntroductionTechnological advances in imaging spectrometry have led to acquisition of data that exhibit extremely high spatial, spectral, and radiometric resolution. As an example, a challenge of satellite hyperspectral imaging is data compression for dissemination to users and especially for transmission to a ground station from the orbiting platform. Data compression generally performs a decorrelation of the correlated information source, before entropy coding is carried out. To meet the quality issues of hyperspectral image analysis, differential pulse code modulation (DPCM) is usually employed for lossless/near-lossless compression, i.e., the decompressed data have a user-defined maximum absolute error, which is zero in the lossless case and nonzero otherwise.1 DPCM basically consists of a prediction followed by entropy coding of quantized differences between original and predicted values. A unity quantization step size allows reversible compression as a limit case. Several variants exist in DPCM prediction schemes, the most sophisticated being adaptive.2–8 When the hyperspectral imaging instrument is placed on a satellite, data compression is crucial.9–11 To meet the quality issues of hyperspectral imaging, DPCM is usually employed for either lossless or near-lossless compression. Lossless compression thoroughly preserves the information of the data but allows a moderate decrement in transmission rate to be achieved.12,13 The bottleneck of downlink to ground stations may hamper the coverage capabilities of modern satellite instruments. If strictly lossless techniques are not used, a certain amount of information of the data will be lost. However, such statistical information may be mostly due to random fluctuations of the instrumental noise. The rationale that compression-induced distortion may be less harmful in those bands, in which the noise is higher, constitutes the virtually lossless paradigm,14 which is accepted by several authors,15 but has never been demonstrated. This paper faces the problem of quantifying the trade-off between compression ratio (CR) and decrement in the spectral information content. A rate distortion model is used to quantify the compression of the noisy version of an ideal information source. The rationale of such a model is that lossy/near-lossless compression may be regarded as an additional source of noise. The problem of lossy compression becomes a transcoding problem (cascade of two coding stages interleaved by a decoding stage), which can be formulated as “Given a source that has been compressed with loss and decompressed, and hence it has already lost part of its information, what is the relationship between the compression ratio, or bit rate (BR), of the subsequent compression stage and the amount of the information of the original source that is available from the decompressed bit stream?” Fig. 1Flow chart of satellite hyperspectral processing chain comprising satellite segment, ground segment, and user segment. Data are compressed to pass from one segment to another and decompressed before the subsequent processing.  Starting from the observation that the onboard instrument introduces noise on the noise-free spectral radiance that is acquired and also that irreversible compression introduces a noise that determines the loss of information between uncompressed and compressed data, this paper gives evidences, both theoretically and through simulations and compression of raw hyperspectral data, that the variances of such noises add to each other because the two noise patterns are independent, thereby increasing the loss of information, but in a way that is predictable and hence controllable. We guess that this topic is of interest for whoever aims to design a lossy compressor that is to quantify the compression for any type of data. The theoretical model is first validated on simulated noisy data. Experiments carried out on airborne visible infrared imaging spectrometer (AVIRIS) 2006 raw data show that the proposed approach is practically feasible and yields results that are reasonably in agreement with those obtained on simulated data. In earlier papers by the authors,16,17 compression was not addressed, but only noisy acquisition. The problem was approached as an information-theoretic assessment of the imaging system,18 which means estimating mutual information between two dependent sources, one unobservable and another observable, from which the noise is preliminarily estimated. The former is the input of the imaging system, and the latter the output. In Refs. 16 and 17, the focus was on estimating the amount of information the source would exhibit if it were acquired without noise. While the earlier studies motivate progresses in designing instruments with less and less noise, the present study aims at providing theoretically sound objective criteria to set quantization step sizes of near-lossless DPCM coders for satellite hyperspectral imagers. 2.Hyperspectral Remote Sensing from SatelliteSince the pioneering mission Hyperion19 in 2001, which opened new possibilities of global Earth coverage, hyperspectral imaging from satellites has grown in interest, including motivating the upcoming EnMAP20,21 and PRISMA missions.22 The hyperspectral processing chain represented in Fig.1 consists of three segments: satellite segment, ground segment, and user segment. The on-board instrument produces data in raw format. Raw data are digital counts from the analog-to-digital converter (ADC), not yet diminished by the dark signal that has been averaged in time. Raw data are compressed, with or without loss, and downloaded to the ground station(s), where the data are decompressed, converted to radiance values, and corrected for instrumental effects (e.g., striping of push-broom sensors). The calibrated data are geometrically corrected for orbital effects, georeferenced, and possibly orthorectified. All geometric operations subsequent to calibration have little impact on the quality of data products. Eventually, data products are stored in archives, generally with highly redundant formats, e.g., double-precision floating point per pixel radiance value, with spectral radiance measured in . When the data are distributed to users, they are usually converted to radiance density values, which are better accommodated into fixed-point formats (e.g., 16-bit per component, including a sign bit). This conversion may lead to a loss of information. The radiance density unit in the fixed-point format is , so that any physically attainable value can be mapped with a 16-bit word-length (, because radiances may take small negative values after removal of time-averaged dark current from raw data). A finer step would be 10 times smaller and would require 19 to 20 bits instead of 16. Fixed-point radiance data are compressed, possibly with loss, and delivered to users. After decompression, in a typical user application, solar irradiance and atmospheric transmittance are corrected by users to produce reflectance spectra that may be matched to library spectra in order to recognize and classify materials. At this step, it may be useful to investigate the effects of a lossy compression of radiance data in terms of changes in spectral angle with respect to reflectance spectra obtained from uncompressed radiance data.23,24 A more general approach that is pursued in this work is investigating the loss in spectral information due to an irreversible compression.25 Such a study is complicated by the fact that the available data are a noisy realization of an unavailable ideal spectral information source that is assumed to be noise-free.26 3.Signal-Dependent Noise Modeling for Imaging SpectrometersA generalized signal-dependent noise model has been proposed to deal with several different acquisition systems. Many types of noise can be described by using the following parametric model:27 where is the pixel location, is the observed noisy image, is the noise-free image, modeled as a nonstationary correlated random process, is a stationary, zero-mean uncorrelated random process independent of with variance , and is electronics noise (zero-mean white and Gaussian, with variance ). For a great variety of images, this model has been proven to hold for values of the parameter such that . The additive term is the generalized signal-dependent noise. Since is generally nonstationary, the noise will be nonstationary as well. The term is the signal-independent noise component and is assumed to be Gaussian distributed.Equation (1) applies also to images produced by optoelectronic devices, such as charge-coupled device cameras, multispectral scanners, and imaging spectrometers. In that case, the exponent is equal to 0.5. The term stems from the Poisson-distributed number of photons captured by each pixel and is therefore denoted as photon noise.28 Let us rewrite Eq. (1) with : Equation (2) represents the electrical signal resulting from the photon conversion and from the dark current. The mean dark current has been preliminarily subtracted to yield . However, its statistical fluctuations around the mean constitute most of the zero-mean electronic noise . The term is the photon noise, whose mean is zero and whose variance is proportional to . It represents a statistical fluctuation of the photon signal around its noise-free value, , due to the granularity of photons originating electric charge. If the variance of Eq. (2) is calculated on homogeneous pixels, in which , by definition, thanks to the independence of , , and and the fact that both and have null mean and are stationary, we can write it as in which is the nonstationary mean of . The term equals , from Eq. (2). Equation (3) represents a straight line in the plane , whose slope and intercept are equal to and , respectively. The interpretation of Eq. (3) is that on statistically homogeneous pixels, the theoretical nonstationary ensemble statistics (mean and variance) of the observed noisy image lie upon a straight line. In practice, theoretical expectations are approximated with local averages. Hence, homogeneous pixels in the scene appear in the variance-versus-mean plane to be clustered along the line , in which and . Figure 2 shows an example of scatterplot.Fig. 2Calculation of slope and intercept of mixed photon/electronic noise model, with regression line superimposed.  The problem of measuring the two parameters of the optoelectronics noise model [Eq. (2)] has been stated to be equivalent to fitting a regression line to the scatterplot containing homogeneous pixels, or at least the most homogeneous pixels in the scene. The problem now is how to detect the (most) statistically homogeneous pixels in an imaged scene.29,30 In practical applications, the average signal-to-noise ratio (SNR) is used: where and are obtained by averaging the observed noisy image and its square, respectively, the noise being zero-mean. Either or may be set equal to zero, whenever the imagery is known to be dominated by electronic or photon noise, respectively.4.Information-Theoretic Problem StatementLet denote the unavailable source ideally obtained by means of an acquisition with a noiseless device. Quantization of the data produced by the on-board instrument is set on the basis of instrument noise and downlink constraints. For satellite imaging spectrometers, it is usually 12 to 14 bits per pixel per band (bpppb). Let denote the noisy acquired source, e.g., quantized with 12 bpppb. The unavailable noise-free source is assumed to be quantized with 12 bpppb as well. If the source is lossy compressed, a new source is obtained by decompressing the compressed bit stream at the ground station. If is converted to radiance values, band scaling gains and destriping coefficients are applied for each wavelength and the outcome is quantized to radiance density units. If the radiance source is denoted by , then radiance values are the result of reversible deterministic operations (calibration and destriping) which produce real-valued data, followed by quantization to an integer number of radiance units. If such an operation yields a one-to-one mapping between raw data and radiance values, then the radiance source coincides with . A simplified model of the rate distortion chain that does not include the loss possibly introduced by conversion of decompressed raw data to radiance units is displayed in Fig. 3. Fig. 3Rate-distortion representation for information-theoretic assessment of lossy compression of the noisy version of an information source.  The problem can be stated in terms of rate-distortion theory31 and constitutes what is denoted as information-theoretic assessment.16–18 When the source is corrupted by the instrumental noise to yield the observed source, , its entropy is partly lost. is the part of that gets lost after noise addition, if is no longer available and only the noisy source is known. is usually referred to as equivocation. The mutual information between and , , which is the part of the entropy of that is contained in [graphically, the intersection of the spheres and ], is calculated as in which the joint entropy is graphically denoted by the union of the spheres and . The addition of noise causes the overall entropy, , to increase because the entropy of the noise, , also denoted as irrelevance, is added to that of , i.e., to . The term irrelevance indicates that is not relevant to , which is the source of spectral information, but is a measure of the information due to the uncertainty of the random noise introduced by the instrument.The term is generally unknown, but the entropy of , , may be estimated by compressing the available observed source (raw data) without any loss, e.g., by means of an advanced DPCM compressor. Once the noise of has been modeled and measured, the irrelevance can be estimated as the entropy of the noise realization, which is unrelated to the spectral information, . can be calculated by simply decrementing by the irrelevance . Finding is the object of the information-theoretic assessment of an acquisition system,17 because represents the mean amount of useful “spectral” information coming from that is contained in and, if compression is strictly lossless, also in , because in that case . Now, let us consider the lossy case, i.e., . The lossy compression BR achieved by an optimized coder will approximate the mutual information between and , . It is noteworthy that , the equality holding for reversible compression only. The term may be estimated from the noise model and measurements of and from the model of the noise introduced by the irreversible compression process, assumed to be Gaussian for lossy compression or uniformly distributed for near-lossless compression. Eventually, , whenever of interest, can be estimated by following the procedure described in Refs. 16 and 17. 5.Simulation Results5.1.Synthetic DataThe proposed model has been first assessed on simulated noisy data. A test remote sensing image has been derived from a very high-resolution aerial photograph [pixel spacing 62.5 cm, , 8 bits per pixel (bpp)] by averaging blocks of pixels. The final pixel size is approximately 10 m. The averaging process abates the acquisition noise by over 20 dB, in such a way that the resulting image ( in size, 8 bpp) may be assumed as noise-free. Additive white Gaussian noise, spatially uncorrelated having and , has been superimposed on the test image to yield two noisy versions, having SNR [Eq. (4)] equal to 29.41 and 21.45 dB, respectively. Noisy pixel values are rounded to integers and clipped between 0 and 255. The original test image and its noisy version with are portrayed in Fig. 4. Fig. 4Test images for information-theoretic assessment: (a) Noise-free original. (b) Noisy version with additive white Gaussian noise having .  The optimized two-dimensional compression method is fuzzy matching pursuits encoder (FMP),32 which employs a parametrically tunable entropy coding33 and approaches the ultimate compression more and more closely as the computational cost increases. The BR of the noise-free image, by definition equal to the entropy of the noise-free source, is . As it appears from Fig. 4, the test image is highly textured and thus hard to compress. For each of the two noisy versions, the following amounts have been calculated varying with the allowed maximum absolute distortion (MAD), :

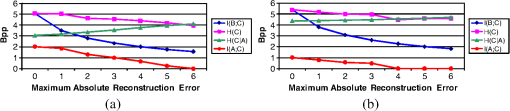

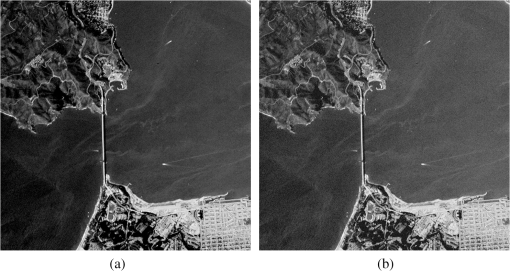

All the information parameters defining the model are plotted in Fig. 5 for the two noisy image versions. By watching the lossless case (), we can notice that the presence of the noise makes the original information (almost 5 bpp) produce a mutual information slightly higher than 2 bpp for and 1 bpp for . In substance, the blue plot [] represents what we pay in terms of compression BR, and the red plot [] represents what we get in terms of useful (spectral) information. It is noteworthy that vanishes for in the less noisy version of Fig. 5(a) and for in the noisier version of Fig. 5(b). It seems that the compression BR is first allocated to compress the noise with MAD equal to . Only if such a BR is high enough is the leftover part devoted to the spectral information. 5.2.AVIRIS 2006 DataA set of calibrated and raw images acquired in 2006 by AVIRIS has been provided by NASA/Jet Propulsion Laboratory to the Consultative Committee for Space Data Systems and is available for compression experiments. This dataset consists of five 16-bit calibrated and geometrically corrected images and the corresponding 16-bit raw images, not yet diminished by the dark signal, acquired over Yellowstone, Wyoming. Each image is composed of 224 bands and each scene (the scene numbers are 0, 3, 10, 11, 18) has 512 lines.7 All data have been clipped to 14 bits (a negligible percentage of outliers has been affected by clipping) and remapped to 12 bits to simulate a space-borne instrument, e.g., Hyperion. Figure 6 portrays a sample band (#20, wavelength between green and red). The operational steps for implementing the proposed rate-distortion model for quality assessment of on-board near-lossless compression are the following:

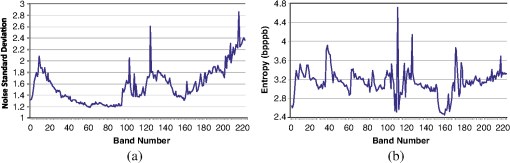

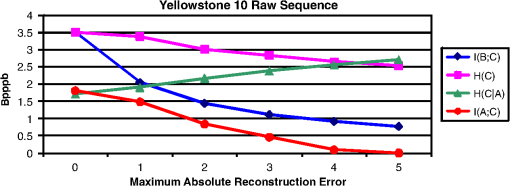

The noise standard deviation has been measured for Yellowstone 10 (clipped above 14 bpppb and remapped to 12 bpppb) by using the algorithm described in Ref. 35 and is reported in Fig. 7(a). Apart from marked increments at the edges of the spectral interval due to loss of sensibility of instruments, the noise standard deviation is approximately constant and always . The average is . However, only the electronic, or dark, component of the noise is reliably estimated because of the presence of the dark signal, which is usually removed on ground. The photon component is undetermined because bright areas do not produce appreciable clusters in the variance-to-mean scatterplot. The photon noise variance is expected to be lower than the electronic one for whisk-broom instruments, as AVIRIS is, but not for push-broom ones, at least in visible-near infrared (V-NIR) wavelengths.29,30 Fig. 7AVIRIS 2006 Yellowstone scene 10: (a) Measured noise standard deviation (thermal/electronic component only). (b) Bit rate, in bits per pixel per band (bpppb), produced by the S-RLP codec.  Figure 7(b) reports lossless compression BRs of AVIRIS 2006 Yellowstone raw scene 10 (clipped and remapped, as above). The compression algorithm is spectral-relaxation labeled predictor (S-RLP),6 which is an MAD-bounded, i.e., near-lossless algorithm, providing the ultimate compression attainable for hyperspectral data. In a possible on-board implementation, MMSE Adaptive-DPCM (MA-DPCM),36 a simplified version of S-RLP, would be preferable. However, S-RLP is used only to measure the entropy of the various sources and is not required for a practical implementation. Let denote the maximum absolute reconstruction error, also known as MAD or peak error. The maximum quantization step size yielding MAD equal to is and a quadratic distortion in which the term replaces whenever real values that have been previously quantized to integers are requantized with an integer step size .Figure 8 reports information parameters for the whole sequence of Yellowstone 10. Absorption bands, in which the model of noisy information source does not hold because the spectral signal is practically zero and only noise and dark signals are present, are removed before averaging the information measures over the spectral bands (all bands are compressed). Fig. 8Estimated entropy and mutual information parameters for AVIRIS 2006 Yellowstone 10, whole sequence.  When , , hence . is monotonically decreasing with the mean quadratic distortion, or better with the quantization step size , approximately as . depends on how much has been smoothed by the lossy compression of , or in other words on how the compression algorithm works. The term represents the entropy of the sum of the two events, both independent of , that are acquisition noise and compression errors, which have been verified to be independent of one another for DPCM compression in the tests on simulated data. Given the BRs produced by the optimized encoder S-RLP, CRs can be calculated relative to the uncompressed wordlength of 12 bits. The CR versus MAD characteristic of the actual on-board DPCM coder will be likely to yield a slightly lower CR. The analysis reported suffers from a main shortcoming. Photon noise has been disregarded since its estimation is difficult, also because of the presence of the dark signal, which is not to be considered in the model [Eq. (2)]. Actually the SNR of AVIRIS 2006 data is extremely high (over 50 dB, in average), which means that the noise is so weak that it can be reliably estimated only on the lake. Since the average level of the lake is significantly lower than the average level of the whole band, a non-negligible fraction of the photon noise term is neglected. However, the error on the overall noise variance estimation is expected to be by defect. In this case, the mutual information would be overestimated by . In a practical application scenario, suppose you cannot afford onboard lossless compression and you have to use lossy compression. For a sample hyperspectral raw image, the method provides the amount of useful spectral information , that is, information pertaining to the noise-free source and not the acquired noisy source, varying with the amount of distortion introduced by compression on the acquired noisy source. On the other side, distortion can be related to CR or coding BR, through the rate distortion (RD) characteristic of the coder used in the actual implementation, i.e., on-board. So, the coder can be designed to preserve a specified amount of information and not to yield a specified amount of distortion, as happens most usually. The proposed method can be applied to existing systems, provided that they use DPCM with quantization step sizes that can be uploaded from ground and allow lossless compression to be performed as a limit case. The procedure is summarized by the following steps:

6.Concluding RemarksThis study has proposed a rate-distortion model to quantify the loss of useful spectral information that gets lost after near-lossless compression, and its experimental setup, such that the information-theoretical model may become a computational model. The key ingredient of this recipe is an advanced hyperspectral image coder providing the ultimate compression regardless of time and computation constraints (such a coder is not necessary to perform on-board compression, but only for simulations). Noise estimation is crucial because of the extremely low noisiness of hyperspectral data that are also richly textured. Noise filtering is also required to extract noise patterns. The drawback is that denoising filters generally work properly if they know reasonably exact values of the parameters of the noise model they have been designed for. Preliminary results on AVIRIS 2006 Yellowstone 10 sequence are encouraging, although absorption/anomalous bands are likely to contradict the main assumptions underlying the proposed model and should not be considered. The main conclusion that can be drawn is that the noisier the data, the lower the CR that can be achieved in order to retain a prefixed amount of spectral information. This happens because the noise-free data can tolerate up to a certain amount of cumulative noise, i.e., instrument noise plus compression-induced noise. Thus, if instrument noise is higher, compression noise shall be lower, and vice versa. AcknowledgmentsThe authors are grateful to Consultative Committee for Space Data Systems for providing the AVIRIS raw data ( http://compression.jpl.nasa.gov/hyperspectral). ReferencesB. AiazziL. AlparoneS. Baronti,

“Near-lossless compression of 3-D optical data,”

IEEE Trans. Geosci. Remote Sens., 39

(11), 2547

–2557

(2001). http://dx.doi.org/10.1109/36.964993 IGRSD2 0196-2892 Google Scholar

B. Aiazziet al.,

“Lossless compression of multi/hyper-spectral imagery based on a 3-D fuzzy prediction,”

IEEE Trans. Geosci. Remote Sens., 37

(5), 2287

–2294

(1999). http://dx.doi.org/10.1109/36.789625 IGRSD2 0196-2892 Google Scholar

J. MielikainenP. Toivanen,

“Clustered DPCM for the lossless compression of hyperspectral images,”

IEEE Trans. Geosci. Remote Sens., 41

(12), 2943

–2946

(2003). http://dx.doi.org/10.1109/TGRS.2003.820885 IGRSD2 0196-2892 Google Scholar

E. MagliG. OlmoE. Quacchio,

“Optimized onboard lossless and near-lossless compression of hyperspectral data using CALIC,”

IEEE Geosci. Rem. Sens. Lett., 1

(1), 21

–25

(2004). http://dx.doi.org/10.1109/LGRS.2003.822312 IGRSBY 1545-598X Google Scholar

F. Rizzoet al.,

“Low-complexity lossless compression of hyperspectral imagery via linear prediction,”

IEEE Signal Process. Lett., 12

(2), 138

–141

(2005). http://dx.doi.org/10.1109/LSP.2004.840907 IESPEJ 1070-9908 Google Scholar

B. Aiazziet al.,

“Crisp and fuzzy adaptive spectral predictions for lossless and near-lossless compression of hyperspectral imagery,”

IEEE Geosci. Rem. Sens. Lett., 4

(6), 532

–536

(2007). http://dx.doi.org/10.1109/LGRS.2007.900695 IGRSBY 1545-598X Google Scholar

A. B. KielyM. A. Klimesh,

“Exploiting calibration-induced artifacts in lossless compression of hyperspectral imagery,”

IEEE Trans. Geosci. Rem. Sens., 47

(8), 2672

–2678

(2009). http://dx.doi.org/10.1109/TGRS.2009.2015291 IGRSD2 0196-2892 Google Scholar

B. AiazziL. AlparoneS. Baronti,

“Lossless compression of hyperspectral images using multiband lookup tables,”

IEEE Signal Process. Lett., 16

(6), 481

–484

(2009). http://dx.doi.org/10.1109/LSP.2009.2016834 IESPEJ 1070-9908 Google Scholar

B. Aiazziet al.,

“Advanced methods for onboard lossless compression of hyperspectral data,”

Proc. SPIE, 5208 117

–128

(2003). http://dx.doi.org/10.1117/12.507328 PSISDG 0277-786X Google Scholar

A. Abrardoet al.,

“Low-complexity and error-resilient hyperspectral image compression based on distributed source coding,”

Proc. SPIE, 7109 71090V

(2008). http://dx.doi.org/10.1117/12.799990 PSISDG 0277-786X Google Scholar

A. Abrardoet al.,

“Low-complexity approaches for lossless and near-lossless hyperspectral image compression,”

Satellite Data Compression, 47

–65 Springer, New York

(2011). Google Scholar

B. Aiazziet al.,

“Near-lossless compression of hyperspectral imagery through crisp/fuzzy adaptive DPCM,”

Hyperspectral Data Compression, 147

–177 Springer, Berlin, Heidelberg, New York

(2006). Google Scholar

B. AiazziL. AlparoneS. Baronti,

“On-board lossless hyperspectral data compression: LUT-based or classified spectral prediction?,”

in ESA Workshop Proceedings Publication WPP-285, On-Board Payload Data Compression Workshop,

(2008). Google Scholar

C. Lastriet al.,

“Virtually lossless compression of astrophysical images,”

EURASIP J. Appl. Signal Process., 2005

(15), 2521

–2535

(2005). http://dx.doi.org/10.1155/ASP.2005.2521 1110-8657 Google Scholar

S.-E. Qianet al.,

“Near lossless data compression onboard a hyperspectral satellite,”

IEEE Trans. Aerosp. Electron. Syst., 42

(3), 851

–865

(2006). http://dx.doi.org/10.1109/TAES.2006.248183 IEARAX 0018-9251 Google Scholar

B. Aiazziet al.,

“Information-theoretic assessment of sampled hyperspectral imagers,”

IEEE Trans. Geosci. Rem. Sens., 39

(7), 1447

–1458

(2001). http://dx.doi.org/10.1109/36.934076 IGRSD2 0196-2892 Google Scholar

B. Aiazziet al.,

“Information-theoretic assessment of multidimensional signals,”

Signal Process., 85

(5), 903

–916

(2005). http://dx.doi.org/10.1016/j.sigpro.2004.11.025 SPRODR 0165-1684 Google Scholar

F. O. Hucket al.,

“Information-theoretic assessment of sampled imaging systems,”

Opt. Eng., 38

(5), 742

–762

(1999). http://dx.doi.org/10.1117/1.602264 OPEGAR 0091-3286 Google Scholar

J. S. Pearlmanet al.,

“Hyperion, a space-based imaging spectrometer,”

IEEE Trans. Geosci. Rem. Sens., 41

(6), 1160

–1173

(2003). http://dx.doi.org/10.1109/TGRS.2003.815018 IGRSD2 0196-2892 Google Scholar

L. GuanterK. SeglH. Kaufmann,

“Simulation of optical remote-sensing scenes with application to the EnMAP hyperspectral mission,”

IEEE Trans. Geosci. Rem. Sens., 47

(7), 2340

–2351

(2009). http://dx.doi.org/10.1109/TGRS.2008.2011616 IGRSD2 0196-2892 Google Scholar

K. Seglet al.,

“Simulation of spatial sensor characteristics in the context of the EnMAP hyperspectral mission,”

IEEE Trans. Geosci. Rem. Sens., 48

(7), 3046

–3054

(2010). http://dx.doi.org/10.1109/TGRS.2010.2042455 IGRSD2 0196-2892 Google Scholar

D. Labateet al.,

“The PRISMA payload optomechanical design, a high performance instrument for a new hyperspectral mission,”

Acta Astronautica, 65

(9–10), 1429

–1436

(2009). http://dx.doi.org/10.1016/j.actaastro.2009.03.077 AASTCF 0094-5765 Google Scholar

L. Santurriet al.,

“Interband distortion allocation in lossy hyperspectral data compression,”

in ESA Workshop Proceedings Publication WPP-285, On-Board Payload Data Compression Workshop,

(2008). Google Scholar

B. Aiazziet al.,

“Spectral distortion in lossy compression of hyperspectral data,”

J. Electr. Comput. Eng., 2012 1

–8

(2012). http://dx.doi.org/10.1155/2012/850637 2090-0147 Google Scholar

B. Aiazziet al.,

“Information-theoretic assessment of lossy and near-lossless on-board hyperspectral data compression,”

in Proc. ESA OBPDC 2012, 3rd Int. Workshop on On-Board Payload Data Compression,

(2012). Google Scholar

B. Aiazziet al.,

“Estimating noise and information of multispectral imagery,”

Opt. Eng., 41

(3), 656

–668

(2002). http://dx.doi.org/10.1117/1.1447547 OPENEI 0892-354X Google Scholar

A. K. Jain, Fundamentals of Digital Image Processing, Prentice Hall, Englewood Cliffs, NJ

(1989). Google Scholar

J. L. StarckF. MurtaghA. Bijaoui, Image Processing and Data Analysis: The Multiscale Approach, Cambridge University Press, New York

(1998). Google Scholar

L. Alparoneet al.,

“Quality assessment of data products from a new generation airborne imaging spectrometer,”

in Proc. IEEE Int. Geoscience and Remote Sensing Symp.,

422

–425

(2009). Google Scholar

L. Alparoneet al.,

“Signal-dependent noise modelling and estimation of new-generation imaging spectrometers,”

in First Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing,

1

–4

(2009). Google Scholar

T. M. CoverJ. A. Thomas, Elements of Information Theory, 2nd ed.Wiley, New York

(2006). Google Scholar

B. AiazziL. AlparoneS. Baronti,

“Fuzzy logic-based matching pursuits for lossless predictive coding of still images,”

IEEE Trans. Fuzzy Systems, 10

(4), 473

–483

(2002). http://dx.doi.org/10.1109/TFUZZ.2002.800691 IEFSEV 1063-6706 Google Scholar

B. AiazziL. AlparoneS. Baronti,

“Context modeling for near-lossless image coding,”

IEEE Signal Process. Lett., 9

(3), 77

–80

(2002). http://dx.doi.org/10.1109/97.995822 IESPEJ 1070-9908 Google Scholar

F. ArgentiG. TorricelliL. Alparone,

“MMSE filtering of generalised signal-dependent noise in spatial and shift-invariant wavelet domains,”

Signal Process., 86

(8), 2056

–2066

(2006). http://dx.doi.org/10.1016/j.sigpro.2005.10.014 SPRODR 0165-1684 Google Scholar

B. Aiazziet al.,

“Noise modelling and estimation of hyperspectral data from airborne imaging spectrometers,”

Ann. Geophys., 41

(1), 1

–9

(2006). AGEPA7 0003-4029 Google Scholar

B. AiazziL. AlparoneS. Baronti,

“On-board DPCM compression of hyperspectral data,”

in Proc. ESA OBPDC 2010 Second Int. Workshop on On-Board Payload Data Compression,

(2010). Google Scholar

|