|

|

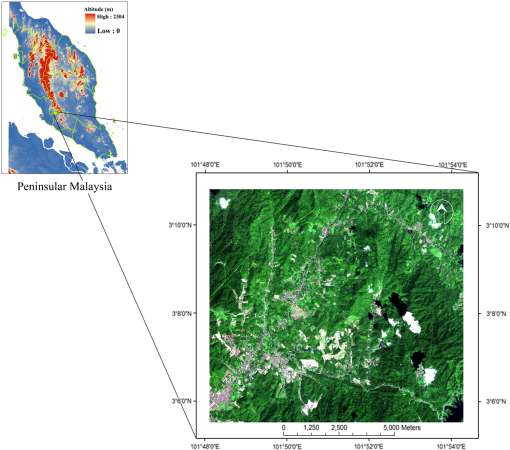

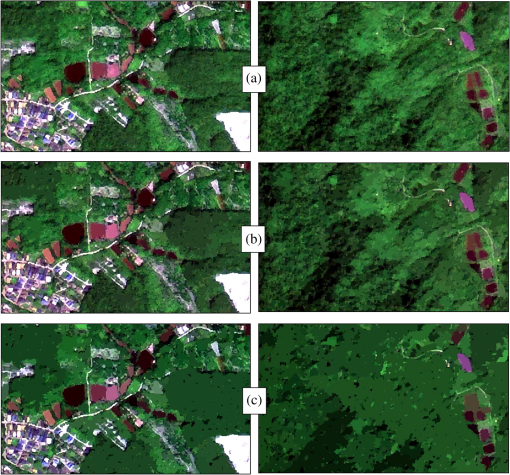

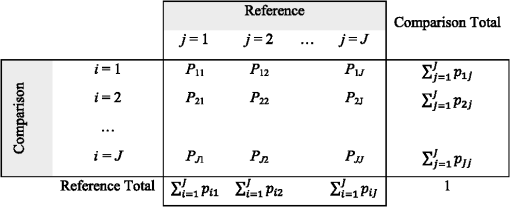

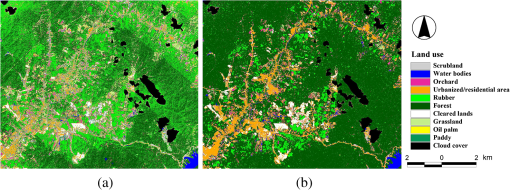

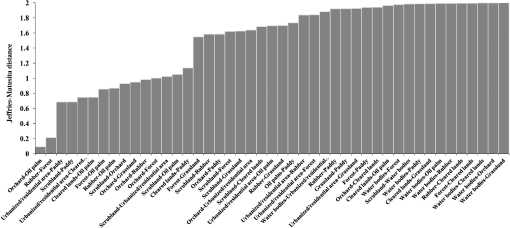

1.IntroductionDecision making in each country or region needs adequate information on many complex interrelated aspects of its activities. Land use is desired as one such aspect and necessary knowledge about land use and land cover has become increasingly important.1 Classification of land use and land cover based on remotely sensed imagery can be partitioned into two general image analysis methods. The first approach is based on pixels, which has long been employed for classifying remotely sensed imagery. The second approach is based on objects, which has become increasingly common over the last decade.2,3 The conventional pixel-based classification techniques, such as maximum likelihood classifier (MLC), have been extensively used for the extraction of thematic information since the 1980s.4,5 MLC, the most established approach of image classification,6,7 assumes a normal Gaussian distribution of multivariate data. In this method, pixels are allocated to the most likely output class or allocated to a class based on a posterior probability of membership and dimensions equal to the number of bands in the original image.8 This requires users to carefully determine the classification scheme, so that each class follows a Gaussian distribution, and MLC ideally has to be performed at the spectral class level.7 Some examples of MLC application for land use and land cover classification include comparison of MLC and artificial neural network in USA using Landsat Thematic Mapper (TM) data,9 the same comparison in Turkey using Landsat TM data,10 an evaluation of fuzzy classifier and MLC using Landsat Enhanced () data in Iran,11 and a comparison between object-oriented classification and MLC using Advanced Spaceborne Thermal Emission and Reflection Radiometer (ASTER) data in China.12 In terms of classification results, MLC is more suitable for remote-sensing imagery with medium and low spatial resolution, but it cannot exploit the full advantages of ground geometric structure and texture information contained in high-spatial-resolution imagery.12 The object-oriented classification method relies on the spectral characteristics of ground and makes further use of geometric and structural information.12,13 In this method, an object is a region of interest with spatial, spectral (brightness and color), and/or textural characteristics that define the region.14 Several studies have been conducted to compare object-based classification method with pixel-based techniques. For example, Yan et al.13 compared MLC with a -nearest neighbor (-NN) object-based analysis using ASTER imagery. They indicated that the overall accuracy of the object-based -NN classification considerably outperformed the pixel-based MLC classification in Wuda, China (83.25% and 46.48%, respectively). A comparison between MLC and -NN object-based classification method was performed using a decision tree approach based on high-spatial resolution digital airborne imagery.15 This study in northern California showed that -NN object-based classification with one nearest neighbor (NN) gave a higher performance than MLC by 17%. Another comparison in Gettysburg, Pennsylvania, between MLC and -NN object-based classifier was carried out by Platt and Rapoza16 using multispectral IKONOS imagery. They revealed that using expert knowledge the object-based -NN classifier had the best overall accuracy of 78%. The application of object-based classifier using pan-sharpened Quickbird imagery in agricultural environments led to a higher accuracy (93.69%) than MLC application (89.6%) in southern Spain.17 Myint et al.18 used Quickbird imagery to classify urban land cover in Phoenix, Arizona. They compared MLC with a -NN object-based classifier and concluded that object-based classifier with an overall accuracy of 90.4% outperformed MLC with an overall accuracy of 67.6%. In another study, application of object-based classifier on Système Pour l’Observation de la Terre (SPOT)-5 Panchromatic (PAN) imagery demonstrated more feasibility in the study site (Beijing Olympic Games Cottage) than conventional pixel-based approaches.5 The use of kappa index agreement19 along with “proportion correct” has become customary in remote-sensing literature for the purpose of accuracy assessment. Pontius20 and Pontius and Millones21 exposed some of the conceptual problems with the standard kappa and proposed a suite of variations on kappa to remedy the flaws of the standard kappa. Typically, the kappa statistic compares accuracy with a random baseline. According to Pontius and Millones,21 however, randomness is not a logical option for mapping. In addition, several kappa indices suffer from basic theoretical errors. Therefore, the standard kappa and its variants are more often than not complicated for computation, difficult to understand, and unhelpful to interpret.22,21 As such, in this study, two components of disagreement between classified and ground-truthed maps, in terms of the quantity and spatial allocation of the categories as suggested by Pontius and Millones,21 were employed. This work was aimed at evaluating the capability of MLC and -NN object-based classifier for land-use classification of the Langat basin that was captured on a SPOT-5 imagery. 2.Materials and Methods2.1.Study AreaThe Langat basin is located at the southern part of Klang Valley, which is the most urbanized river basin in Malaysia. In recent decades, the Langat basin has undergone rapid urbanization, industrialization, and agricultural development.23 The Langat basin is also a main source of drinking water for the surrounding areas, is a source of hydropower, and plays an important role in flood mitigation. Over the past four decades, the Langat basin has served 50% of the Selangor State population. Its average annual rainfall is 2400 mm. The basin has a rich diversity of landforms, surface features, and land cover.24,25 Due to the national importance of the Langat basin, a pilot region (upstream of the Langat river) with a total area of was selected for land-use classification using a SPOT-5 imagery (Fig. 1). 2.2.Data SetA system/map-corrected and pan-sharpened SPOT-5 image of the upstream area of Langat basin was acquired on September 20, 2006. SPOT-5 offers a resolution of 2.5 m in PAN mode and 10 to 20 m in multispectral mode. The multispectral mode comprises four bands. Corresponding wavelengths of each band are B1 (0.50 to 0.59 μm), B2 (0.61 to 0.68 μm), B3 (0.78 to 0.89 μm), and B4 (1.58 to 1.75 μm).26,27 The pan-sharpening procedure28 combines system/map-corrected multispectral image with PAN image to produce a high-resolution color image. Dark subtraction technique29 was applied for atmospheric scattering correction on the entire scene. Cloud cover was masked out during the classification process. Due to the higher spectral separability of signatures in 1-3-4 band combination, these layers were selected for further processing. The 2006 land-use map, obtained from the Department of Agriculture, Malaysia, was used as a reference for the definition of land-use classes and preparation of ground-truth maps. The study site is divided into 10 types of land use/cover which are (1) scrubland, (2) water bodies, (3) orchard, (4) urbanized/residential area, (5) rubber, (6) forest, (7) cleared lands, (8) grassland, (9) oil palm, and (10) paddy. 2.3.Maximum Likelihood ClassificationMLC uses the following discriminant function, which is maximized for the most likely class14,30,8: where is the class, is the -dimensional data (where is the number of bands), is the probability with which class occurs in the image and is assumed the same for all classes, is determinant of the covariance matrix of the data in class , is the inverse of matrix, denotes a vector transpose, and is the mean vector of class .Jeffries–Matusita distance8 was applied to compute spectral separability between training site pairs with different land uses. This measure ranges from 0 to 2.0, and the pairs with the distance infer low separability.14 2.4.Image SegmentationSegmentation, a fundamental first step in object-based image analysis,3 is the process of partitioning an image into segments by grouping neighboring pixels with similar feature values such as brightness, texture, and color. These segments ideally correspond to real-world objects.14 Environment for Visualizing Images EX employs an edge-based segmentation algorithm that is very fast and requires only one input parameter [scale level (SL)]. By suppressing weak edges to different levels, the algorithm can yield multiscale segmentation results from finer to coarser segmentation.14 The selection of an appropriate value for the SL is considered as the most important stage in object-based image analysis.3 SL is a measure of the greatest heterogeneity change when the two objects are merged, which is used as a threshold after calculation to terminate segmentation arithmetic.31,5 This value controls the relative size of the image objects, which has a direct impact on the classification accuracy of the final map.3,18 Generally, choosing a high SL causes fewer segments to be defined and choosing a low SL causes more segments to be defined.14 In this study, based on previous experiences and literature recommendations,32,5,15 three levels of scale factor, i.e., 10, 30, and 50, were used in image segmentation (Fig. 2). 2.5.-Nearest Neighbor ClassificationThe -NN classifier considers the Euclidean distance in -dimensional space of the target to the elements in the training data objects, where is defined by the number of object attributes (i.e., spatial, spectral, or textural properties of a vector object) used during classification.14,33 The -NN is generally more robust than a traditional nearest-neighbor classifier, since the -nearest distances are used as a majority vote to determine which class the target belongs to.34,14,33 The -NN is also much less sensitive to outliers and noise in the dataset, and generally produces a more accurate classification outcome when compared with traditional nearest-neighbor methods.14 The parameter is the number of neighbors considered during classification. The ideal choice for parameter depends on the selected dataset and the training data. Larger values tend to reduce the effect of noise and outliers, but they may cause inaccurate classification.14,33 In this article, values of 3, 5, and 7 were examined in each SL. As such, the following nine combinations of SL and NN were investigated: SL10-NN3, SL10-NN5, SL10-NN7, SL30-NN3, SL30-NN5, SL30-NN7, SL50-NN3, SL50-NN5, and SL50-NN7. 2.6.Accuracy AssessmentGround-truth map was prepared based on the observed data (2006 land-use map) and field survey in about 10% of the total area. Disagreement parameters determine the disagreement between simulated and observed maps.22,21,35,36 Quantification error [quantity disagreement (QD)] happens when the quantity of cells of a category in the simulated map is different from the quantity of cells of the same category in the reference map. Location error [allocation disagreement (AD)] occurs when location of a class in the simulated map is different from location of that class in the reference map.21 2.6.1.Disagreement ComponentsTable 1Format of estimated population matrix (adapted from Ref. 21). In reference to Table 1, refers to the number of categories and number of strata in a typical stratified sampling design. Each category in the comparison map is indexed by , which ranges from 1 to . The number of pixels in each stratum is denoted by . Each observation is recorded based on its category in the comparison map () and the reference map (). The number of these observations is summed as the entry in row and column of the contingency matrix. Proportion of the study area (), i.e., category in the simulated map and category in the observed map, is estimated by the following equation22,21: QD () for an arbitrary category is calculated as follows: Overall QD, which incorporates all categories, is calculated as follows: Calculation of AD () for an arbitrary category is shown in Eq. (5). The first argument within minimum function is the omission of category , while the second argument is the commission of category . Overall AD is calculated as follows: Proportion of agreement () is calculated as follows: Total disagreement (), the sum of overall quantity of disagreement and overall allocation of disagreement, is computed as follows: 3.Results and DiscussionFigure 3 illustrates the maps classified using MLC and SL30-NN5 (object-based classifier). Table 2 gives the disagreement components, calculated for each land-use category and the total landscape, based on MLC and object-based classification. QD and AD in the total landscape using MLC were 11.66% and 22.38%, respectively. In comparison with object-based image classifiers, MLC resulted in the lowest QD. Nevertheless, due to its highest AD, MLC resulted in a higher total disagreement in the total landscape. The ratio of QD to areal proportion (AP) and AD to AP of each land-use category gives a better inference about the contribution of each land acreage unit toward error production. From Table 2, paddy, oil palm, and grassland yielded the highest QD/AP using ML classifier, which indicates the lowest accuracy in terms of quantity of classified pixels. Scrubland, orchard, and oil palm yielded the highest AD/AP, which indicates the lowest accuracy in terms of location of the classified pixels. Among paired land-use categories, orchard/oil palm showed the lowest spectral separability with a Jeffries–Matusita distance of 0.1. As such, it would be challenging to discriminate between orchard and oil palm stands using MLC. The spectral separability between scrubland and paddy was also comparatively low, i.e., 0.7. Paired land-use categories with low spectral separability can be expected to demonstrate higher QDs and ADs. Table 2Accuracy assessments of different land-use categories derived using maximum likelihood classifier (MLC) and object-based classification.

Among the object-based image classifiers, SL30-NN5 showed the highest accuracy with a QD of 15% and an AD of 6.33% in the total landscape (Table 2). Using SL30-NN5, oil palm, paddy, and scrubland yielded the highest QD/AP, i.e., 0.8%, 0.77%, and 0.72%, respectively. Orchard and grassland with an AD/AP of 0.76% and 0.44%, respectively, yielded the highest allocation error. SL30-NN5 resulted in spatial and/or spectral similarity on the image, which exerted some difficulty in accurately discriminating between rubber and forest, orchard and oil palm, and paddy and scrubland (Table 3). These results are supported by the Jeffries–Matusita distance values (Fig. 4). As indicated in Table 2, SL30-NN5 reduced allocation error by 250% as compared with MLC. However, MLC showed 22% improvement in quantity accuracy as compared to SL30-NN5. Table 3Spatial, spectral, and textural properties used in object-based image classification (extracted from the SL30-NN5-classified image).

Notes: Rect_Fit: a shape measure that indicates how well the shape is described by a rectangle; Avgband_x: average value of pixels comprising the region in band x; Stdband_x: standard deviation value of pixels comprising the region in band x; Tx_Range: Average data range of pixels comprising the region inside the kernel; BandRatio:(B4−B3)/(B4+B3+eps). Results suggest that object-based classification, in comparison with pixel-based classification, offered a more realistic and accurate land-use map. This finding is in conformity with previous reports documented by Wang et al.,5 Yan et al.,13 Chen et al.,37 Gao et al.,38 and Myint et al.18 Despite the higher capability of object-oriented approach in image classification, differences in execution time between pixel- and object-based image analysis still remain an issue, especially for large areas.3 Future development of more quantitative methods for selecting optimal image segmentation parameters, especially at the SL as demonstrated by Costa et al.39 and Drăgut et al.,40 will hopefully reduce the required time for object-oriented classification.3 This work demonstrated the utility of disagreement components in validating land-use classification approaches, which has been confirmed by Memarian et al.22 and Pontius and Millones.21 Based on the results obtained in this study and previous investigations on object-based image classification reported by Yu et al.,15 Platt and Rapoza,16 and Duro et al.,3 the following refinements are recommended for future work in obtaining a more precise land-use map:

4.ConclusionIn comparison with object-based image classification, the MLC resulted in a higher total disagreement in total landscape. Image classification employing the MLC yielded a high ratio of QD to AP in land-use categories such as paddy, oil palm, and grassland and consequently low accuracy in terms of quantity of classified pixels. Meanwhile, categories such as scrubland, orchard, and oil palm, which showed a high ratio of AD to AP, registered low accuracy in terms of location of classified pixels. These results were supported by low separation distance between paired classes. Object-based image classifier with the SL of 30 and the -value of 5 (SL30-NN5) showed the highest classification accuracy. Using the SL30-NN5, oil palm, paddy, and scrubland yielded high QD/AP values, while orchard and grassland showed the highest allocation error. Nevertheless, SL30-NN5 resulted in spatial and/or spectral similarity that caused difficulty in discriminating between rubber and forest, orchard and oil palm, and paddy and scrubland. Evidently, SL30-NN5 reduced allocation error by 250% as compared with MLC. However, MLC showed 22% improvement in quantity accuracy as compared with SL30-NN5. This work has demonstrated higher performance and utility of object-based classification over the traditional pixel-based classification in a tropical landscape, i.e., Malaysia’s Langat basin. AcknowledgmentsThe authors gratefully acknowledge Universiti Putra Malaysia for procuring land-use maps and satellite imagery and Mr. Hamdan Md Ali (ICT Unit, Faculty of Agriculture, Universiti Putra Malaysia) for hardware and software assistance. ReferencesJ. R. Andersonet al.,

“A land use and land cover classification system for use with remote sensor data,”

(2012) http://landcover.usgs.gov/pdf/anderson.pdf October ). 2012). Google Scholar

T. Blaschke,

“Object based image analysis for remote sensing,”

ISPRS J. Photogramm. Rem. Sens., 65

(1), 2

–16

(2010). http://dx.doi.org/10.1016/j.isprsjprs.2009.06.004 IRSEE9 0924-2716 Google Scholar

D. C. DuroS. E. FranklinM. G. Dube,

“A comparison of pixel-based and object-based image analysis with selected machine learning algorithms for the classification of agricultural landscapes using SPOT-5 HRG imagery,”

Rem. Sens. Environ., 118 259

–272

(2012). http://dx.doi.org/10.1016/j.rse.2011.11.020 RSEEA7 0034-4257 Google Scholar

A. Singh, M. J. EdenJ. T. Parry,

“Change detection in the tropical forest environment of northeastern India using Landsat,”

Remote Sensing and Land Management, 237

–253 John Wiley & Sons, London

(1986). Google Scholar

Z. Wanget al.,

“Object-oriented classification and application in land use classification using SPOT-5 PAN imagery,”

in Geosci. Rem. Sens. Symp.,

3158

–3160

(2004). Google Scholar

J. Jensen, Introductory Digital Image Processing: A Remote Sensing Perspective, 3rd ed.Prentice Hall, Upper Saddle River, NJ

(2005). Google Scholar

B. W. SzusterQ. ChenM. Borger,

“A comparison of classification techniques to support land cover and land use analysis in tropical coastal zones,”

Appl. Geogr., 31

(2), 525

–532

(2011). Google Scholar

J. A. RichardsX. Jia, Remote Sensing Digital Image Analysis, 4th ed.Springer-Verlag, Berlin, Heidelberg

(2006). Google Scholar

J. D. PaolaR. A. Schowengerdt,

“A detailed comparison of backpropagation neural network and maximum-likelihood classifiers for urban land use classification,”

IEEE Trans. Geosci. Rem. Sens., 33

(4), 981

–996

(1995). http://dx.doi.org/10.1109/36.406684 IGRSD2 0196-2892 Google Scholar

F. S. ErbekC. ÖzkanM. Taberner,

“Comparison of maximum likelihood classification method with supervised artificial neural network algorithms for land use activities,”

Int. J. Rem. Sens., 25

(9), 1733

–1748

(2004). http://dx.doi.org/10.1080/0143116031000150077 IJSEDK 0143-1161 Google Scholar

A. AkbarpourM. B. SharifiH. Memarian,

“The comparison of fuzzy and maximum likelihood methods in preparing land use layer using ETM+ data (Case study: Kameh watershed),”

Iran. J. Range Desert Res., 15

(3), 304

–319

(2006). Google Scholar

J. LiX. LiJ. Chen,

“The study of object-oriented classification method of remote sensing image,”

in Proc. 1st Int. Conf. Information Science and Engineering (ICISE2009),

1495

–1498,

(2009). Google Scholar

G. Yanet al.,

“Comparison of pixel-based and object-oriented image classification approaches—a case study in a coal fire area, Wuda, Inner Mongolia, China,”

Int. J. Rem. Sens., 27

(18), 4039

–4055

(2006). http://dx.doi.org/10.1080/01431160600702632 IJSEDK 0143-1161 Google Scholar

ITT Visual Information Solutions, ENVI Help System, USA

(2010). Google Scholar

Q. Yuet al.,

“Object-based detailed vegetation classification with airborne high spatial resolution remote sensing imagery,”

Photogramm. Eng. Rem. Sens., 72

(7), 799

–811

(2006). Google Scholar

R. V. PlattL. Rapoza,

“An evaluation of an object-oriented paradigm for land use/land cover classification,”

Prof. Geogr., 60

(1), 87

–100

(2008). http://dx.doi.org/10.1080/00330120701724152 0033-0124 Google Scholar

I. J. Castillejo-Gonzálezet al.,

“Object- and pixel-based analysis for mapping crops and their agro-environmental associated measures using QuickBird imagery,”

Comput. Electron. Agric., 68

(2), 207

–215

(2009). http://dx.doi.org/10.1016/j.compag.2009.06.004 CEAGE6 0168-1699 Google Scholar

S. W. Myintet al.,

“Per-pixel vs. object-based classification of urban land cover extraction using high spatial resolution imagery,”

Rem. Sens. Environ., 115

(5), 1145

–1161

(2011). http://dx.doi.org/10.1016/j.rse.2010.12.017 RSEEA7 0034-4257 Google Scholar

J. Cohen,

“A coefficient of agreement for nominal scales,”

Educ. Psychol. Meas., 20

(1), 37

–46

(1960). http://dx.doi.org/10.1177/001316446002000104 EPMEAJ 0013-1644 Google Scholar

R. G. Pontius Jr.,

“Quantification error versus location error in comparison of categorical maps,”

Photogramm. Eng. Rem. Sens., 66

(8), 1011

–1016

(2000). Google Scholar

R. G. Pontius Jr.M. Millones,

“Death to kappa: birth of quantity disagreement and allocation disagreement for accuracy assessment,”

Int. J. Rem. Sens., 32

(15), 4407

–4429

(2011). http://dx.doi.org/10.1080/01431161.2011.552923 IJSEDK 0143-1161 Google Scholar

H. Memarianet al.,

“Validation of CA-Markov for simulation of land use and cover change in the Langat Basin, Malaysia,”

J. Geogr. Inf. Syst., 4

(6), 542

–554

(2012). http://dx.doi.org/10.4236/jgis.2012.46059 IJGSE3 0269-3798 Google Scholar

H. Memarianet al.,

“Hydrologic analysis of a tropical watershed using KINEROS2,”

Environ. Asia, 5

(1), 84

–93

(2012). Google Scholar

H. Memarianet al.,

“Trend analysis of water discharge and sediment load during the past three decades of development in the Langat Basin, Malaysia,”

Hydrol. Sci. J., 57

(6), 1207

–1222

(2012). http://dx.doi.org/10.1080/02626667.2012.695073 HSJODN 0262-6667 Google Scholar

H. Memarianet al.,

“KINEROS2 application for LUCC impact analysis at the Hulu Langat Basin, Malaysia,”

Water Environ. J.,

(2012). http://dx.doi.org/10.1111/wej.12002 WEJAAB 1747-6585 Google Scholar

R. A. Schowengerdt, Remote Sensing Models and Methods for Image Processing, 3rd ed.Elsevier, Oxford, UK

(2007). Google Scholar

J. YangY. Wang,

“Classification of 10 m-resolution SPOT data using a combined Bayesian network classifier-shape adaptive neighborhood method,”

ISPRS J. Photogramm. Rem. Sens., 72 36

–45

(2012). http://dx.doi.org/10.1016/j.isprsjprs.2012.05.011 IRSEE9 0924-2716 Google Scholar

P. S. Chavez,

“An improved dark-object subtraction technique for atmospheric scattering correction of multi-spectral data,”

Rem. Sens. Environ., 24

(3), 459

–479

(1988). http://dx.doi.org/10.1016/0034-4257(88)90019-3 RSEEA7 0034-4257 Google Scholar

G. Rees, The Remote Sensing Data Book, Cambridge University Press, Cambridge

(1999). Google Scholar

L. DurieuxE. LagabrielleA. Nelson,

“A method for monitoring building construction in urban sprawl areas using object-based analysis of Spot 5 images and existing GIS data,”

ISPRS J. Photogramm. Rem. Sens., 63

(4), 399

–408

(2008). http://dx.doi.org/10.1016/j.isprsjprs.2008.01.005 IRSEE9 0924-2716 Google Scholar

R. MathieuJ. AryalA. K. Chong,

“Object-based classification of Ikonos imagery for mapping large-scale vegetation communities in urban areas,”

Sensors, 7

(11), 2860

–2880

(2007). http://dx.doi.org/10.3390/s7112860 SNSRES 0746-9462 Google Scholar

J. KimB. KimS. Savarese,

“Comparison of image classification methods: -nearest neighbor and support vector machines,”

in Proc. 6th WSEAS Int. Conf. Circuits, Systems, Signal and Telecommunications,

133

–138

(2012). Google Scholar

T. CoverP. Hart,

“Nearest-neighbor pattern classification,”

21

–27

(1967). Google Scholar

R. G. Pontius Jr.et al.,

“Comparing the input, output, and validation maps for several models of land change,”

Ann. Reg. Sci., 42

(1), 11

–37

(2008). http://dx.doi.org/10.1007/s00168-007-0138-2 0570-1864 Google Scholar

R. G. Pontius Jr.S. PeethambaramJ. C. Castella,

“Comparison of three maps at multiple resolutions: a case study of land change simulation in Cho Don District, Vietnam,”

Ann. Assoc. Am. Geogr., 101

(1), 45

–62

(2011). http://dx.doi.org/10.1080/00045608.2010.517742 AAAGAK 0004-5608 Google Scholar

M. Chenet al.,

“Comparison of pixel-based and object-oriented knowledge-based classification methods using SPOT5 imagery,”

in Proc. WSEAS Transactions on Information Science and Applications,

477

–489

(2009). Google Scholar

Y. GaoJ. F. MasA. Navarrete,

“The improvement of an object-oriented classification using multi-temporal MODIS EVI satellite data,”

Int. J. Digit. Earth, 2

(3), 219

–236

(2009). http://dx.doi.org/10.1080/17538940902818311 Google Scholar

P. G. A. O. Costaet al.,

“Genetic adaptation of segmentation parameters,”

Object-Based Image Analysis, 679

–695 Springer, Berlin, Heidelberg

(2008). Google Scholar

L. DrăgutD. TiedeS. R. Levick,

“ESP: a tool to estimate scale parameter for multiresolution image segmentation of remotely sensed data,”

Int. J. Geogr. Inf. Sci., 24

(6), 859

–871

(2010). http://dx.doi.org/10.1080/13658810903174803 1365-8816 Google Scholar

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||