|

|

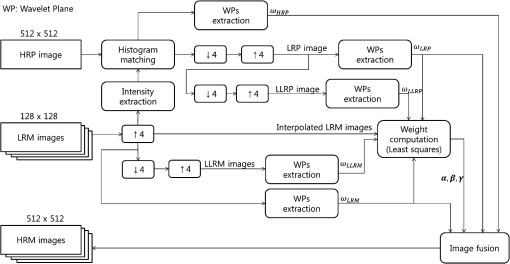

1.IntroductionFor decades, various image fusion techniques have been developed to obtain a high-resolution multispectral (HRM) satellite image from a set of sensor data: a high-resolution panchromatic (HRP) image containing only intensity information and several low-resolution multispectral (LRM) images with color information.1 Among them, the intensity-hue-saturation (IHS) domain method and principal component analysis (PCA) method replace the intensity component of LRM images with that of an HRP image and a PCA-applied HRP image, respectively.1,2 These methods are relatively simple and easy to implement but known to cause distortion in color information. Recently, wavelet decomposition was adopted for the satellite image fusion, which is reported to have less color distortion.3–5 In the conventional wavelet-based methods, the “à trous” algorithm is known to be suitable for obtaining wavelet planes of satellite images,3,6 where the wavelet plane is computed from the difference between the given image and its filtered image with the cubic spline function. The wavelet-based methods are generally classified into the substitution method [substitute wavelet (SW)] and the additive method [additive wavelet (AW)] according to the merging strategy of wavelet planes.3 In the substitution method, the wavelet planes of LRM images are substituted by the wavelet planes of a panchromatic image, whereas those are added by the wavelet plane of a panchromatic image in the additive method. Since the SW discards high frequency components of the LRM images, it usually fails to attain sufficient high frequency details and sometimes loses the information in the LRM images. In contrast, the AW considers the high frequency components of all the LRM images and the HRP image, and thus possibly introduces excessive high frequency details in the synthesized image. Accordingly, there have been efforts to overcome these drawbacks using some improved wavelet-domain methods utilizing weighted merging.4,5 Otazu et al.4 proposed a method in which the wavelet plane of the HRP image is added to each LRM image in proportion to its color intensity value [AW-luminance proportional (AWLP)], whereas Kim et al.5 proposed to add the difference between the wavelet planes of HRP image and each LRM image with or without considering the relative radiometric signature of the LRM images [improved AW (IAW) and IAW proportional (IAWP)]. To develop a more optimized way of adding the wavelet planes, we propose a generalized fusion equation in the form of a weighted composition of wavelet planes, where the weights are determined by the least-squares method. The proposed fusion equation includes wavelet planes of HRP, LRM, and degraded HRP images to control the high frequency injection. In the experiments with IKONOS and QuickBird satellite images, the proposed method is compared with the conventional methods in terms of various objective quality metrics. The results show that the proposed method does not introduce noticeable color distortion and enhances the details better than the conventional methods. 2.Proposed Wavelet-Based Fusion MethodWe propose a wavelet-based image fusion method which extracts wavelet planes by the “à trous” algorithm3 and combines them using a generalized fusion equation. The weights included in the fusion equation are determined by the least-squares method. 2.1.Generalized Fusion EquationThe generalized fusion equation includes wavelet planes and is defined as where is the ’th HRM image that we want to obtain, is the ’th LRM image, and , , are the weights for the ’th multispectral image fusion. In this fusion equation, the low-resolution panchromatic (LRP) image is a spatially degraded image of HRP through the decimation (by four) and interpolation (by four), and is the wavelet plane of the image , e.g., is the wavelet plane of the HRP image. Each LRM image is interpolated (by four) and then added to the weighted sum of the HRP wavelet planes. The weighted LRM wavelet planes and LRP wavelet planes are added or subtracted to inject high frequency components without introducing excessive high frequency components. The “generalized” equation means that our fusion Eq. (1) includes the conventional wavelet-based fusion methods by setting the weights as shown in Table 1.Table 1Various wavelet-based fusion algorithms derived from Eq. (1).

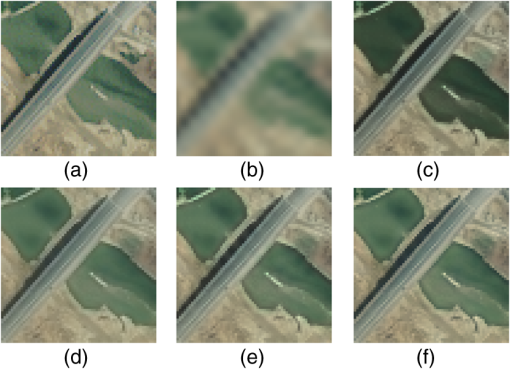

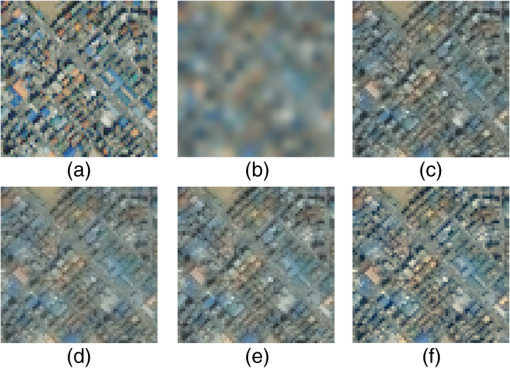

As shown in Fig. 1, the LRM images are enlarged to the size of the HRP, and then histogram matching between the HRP and the intensities of the LRM is performed. For the wavelet decomposition of these images, we adopt the “à trous” algorithm as presented in Refs. 3–6. The “à trous” algorithm is known to be more suitable for image fusion than the Mallat algorithm in terms of artifacts and structure distortion, because the “à trous” algorithm is an undecimated and dyadic algorithm which preserves the structure continuity, whereas the Mallat algorithm is a decimated algorithm which causes the loss of linear continuity.3,6 2.2.Computation of Weights by Least-Squares MethodThe fusion equation (1) can be rewritten with matrices as which is in the form of . Based on the matrix representation, finding weights (, , ) is an over-determined problem and the weight vector can be computed by using the pseudoinverse asIn the actual implementation, since the HRM image is not available, we perform the estimation in the lower resolution. This approach is motivated by an example-based super-resolution, where the relation between an original image patch and the corresponding high-resolution one is extracted using the relation between the original image patch and its degraded (i.e., blurred and downsampled) one.7 This process is explained in Fig. 1, which shows that the LRP and LRM images are degraded by decimation and interpolation to generate LLRP and LLRM images (lower resolution images of LRP and LRM), respectively, and is built in this lower resolution. In the computation of weights by the least-squares method in the lower resolution, however, the relation between the HRM images and LRM images is possibly different from that between the LRM images and the LLRM images, which might degrade the quality of the fused multispectral images. To compensate for the discrepancy, we adopt a scaling factor which is multiplied by the weights. That is, the weights , , and are computed in the lower resolution and then multiplied by the scaling factor. 3.Experimental ResultsTwenty-eight IKONOS and 10 QuickBird images were used to evaluate the performance of the proposed fusion method. The spatial resolutions for the HRP and LRM images are 1 and 4 m, respectively. The HRP size is and the LRM size is . It is difficult to measure the quality of the synthesized HRM images objectively since the original HRM images for the reference do not exist. For the quantitative comparison of the fused image, we used the original LRM images with a 4-m resolution as the reference HRM images. Then, the reference HRM images are compared to the HRM images which are obtained by fusing the degraded HRP and LRM images from the original satellite images to 4 and 16-m resolution, respectively. Figures 2 and 3 show the HRM images synthesized by the proposed method and the existing algorithms, such as IHS,2 substitute wavelet intensity (SWI),8 and IAWP.5 The reference HRM and input LRM images are also included. In these figures, the input LRM images are upsampled by four and interpolated for the purpose of comparison. In Fig. 2, color distortion is noticeable for the IHS method, whereas it is not noticeable for the wavelet-based methods (SWI, IAWP), including the proposed method. It can also be seen that the details are well restored in the synthesized HRM image by the proposed algorithm when compared with the others (Fig. 3). For the objective evaluation, we compute various visual quality metrics,1 such as correlation coefficient (CC), root mean squared error (RMSE), mean structural similarity (MSSIM), universal image quality index (UIQI), quality nonrequiring reference (QNR), spectral angle mapper (SAM), ERGAS, and peak signal-to-noise ratio (PSNR). For the metrics CC, UIQI, MSSIM, QNR, and PSNR, a larger value means a better performance, whereas a smaller value implies better performance for RMSE, ERGAS, and SAM. The overall visual quality assessments are summarized in Tables 2 and 3. The CPU times for the MATLAB® implementation on a personal computer (Intel Core i5 CPU 750 @2.67 GHz) are also measured for the assessment of the computational complexity. Approximately 80% of the time for the proposed algorithm is occupied by the extraction of the wavelet planes for the HRP, LRM, and LRP images. IHS, Gram-Schmidt adaptive (GSA), GIHSA, AdapIHS, AdapCS, and MMSE are not based on the wavelet decomposition, whereas SWI, AWLP, IAWP, NAW, and the proposed algorithms are based on the wavelet decomposition. In Tables 2 and 3, the best two results for each assessment are highlighted in bold. The assessments show that the proposed algorithm is included in the best two for all the objective evaluation metrics except for the UIQI and QNR tests in the QuickBird images, where the proposed algorithm takes third place for both cases. The performance of the proposed method is comparable to the MMSE method9 for the IKONOS data and the proposed method is slightly better than the MMSE except for UIQI in the QuickBird test. The full-size synthesized images for all the algorithms used in the comparison and MATLAB p-codes for the proposed algorithm are available at http://ispl.snu.ac.kr/~idealgod/image_fusion. Fig. 2Results of fusing IKONOS1 images [cropped images () from fused images ()]. (a) Reference HRM, (b) input LRM, (c) IHS, (d) SWI, (e) IAWP, (f) proposed method.  Fig. 3Results of fusing IKONOS2 images [cropped images () from fused images ()]. (a) Reference HRM, (b) input LRM, (c) IHS, (d) SWI, (e) IAWP, (f) proposed method.  Table 2The spectral quality assessment of image fusion methods (averaged values for 28 IKONOS images). The best two results for each assessment are highlighted in bold.

Table 3The spectral quality assessment of image fusion methods (averaged values for 10 QuickBird images). The best two results for each assessment are highlighted in bold.

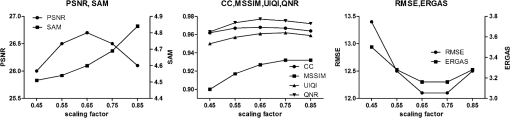

The scaling factor multiplied by the weights is set to 0.65, which is experimentally chosen and applied to all the tested images. Figure 4 shows the performance variation with respect to the scaling factor for IKONOS images. As the scaling factor increases, more high frequency components are injected, whereas a small scaling factor induces less sharpened results. We choose 0.65 as the optimal scaling factor since a scaling factor of 0.65 yields the lowest accumulated ranking values () for all the evaluation metrics (cf. 15 for 0.75, 22 for 0.55). 4.ConclusionIn this article, we have proposed a wavelet domain image fusion method, which can be considered a generalization of the existing methods. The fusion equation consists of the weighted composition of wavelet planes, which includes the terms for enhancing the details and avoiding excessive high frequency components as well. The weights are computed by the least-squares method in the low resolution, and the scaling factor is exploited to compensate the discrepancy incurred in the lower resolution computation of the weights. Experimental results show that the proposed method reduces color distortion and provides high-resolution details. AcknowledgmentsThis work was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Science, ICT And Future Planning (2009-0083495). ReferencesI. Amroet al.,

“A survey of classical methods and new trends in pansharpening of multispectral images,”

EURASIP J. Adv. Signal Process., 79

(2011). http://dx.doi.org/10.1186/1687-6180-2011-79 Google Scholar

T.-M. Tuet al.,

“A fast intensity-hue-saturation fusion technique with spectral adjustment for IKONOS imagery,”

IEEE Geosci. Remote Sens. Lett., 1

(4), 309

–312

(2004). http://dx.doi.org/10.1109/LGRS.2004.834804 IGRSBY 1545-598X Google Scholar

J. Nunezet al.,

“Multiresolution-based image fusion with additive wavelet decomposition,”

IEEE Trans. Geosci. Remote Sens., 37

(3), 1204

–1211

(1999). http://dx.doi.org/10.1109/36.763274 IGRSD2 0196-2892 Google Scholar

X. Otazuet al.,

“Introduction of sensor spectral response into image fusion methods. Application to wavelet-based methods,”

IEEE Trans. Geosci. Remote Sens., 43

(10), 2376

–2385

(2005). http://dx.doi.org/10.1109/TGRS.2005.856106 IGRSD2 0196-2892 Google Scholar

Y. Kimet al.,

“Improved additive-wavelet image fusion,”

IEEE Geosci. Remote Sens. Lett., 8

(2), 263

–267

(2011). http://dx.doi.org/10.1109/LGRS.2010.2067192 IGRSBY 1545-598X Google Scholar

M. Gonzlez-Audcanaet al.,

“Comparison between Mallat’s and the trous discrete wavelet transform based algorithms for the fusion of multispectral and panchromatic images,”

Int. J. Remote Sens., 26

(3), 595

–614

(2005). http://dx.doi.org/10.1080/01431160512331314056 IJSEDK 0143-1161 Google Scholar

D. GlasnerS. BagonM. Irani,

“Super-resolution from a single image,”

in 2009 IEEE 12th Int. Conf. on Computer Vision,

349

–356

(2009). Google Scholar

M. Gonzalez-Audicanaet al.,

“Fusion of multispectral and panchromatic images using improved IHS and PCA mergers based on wavelet decomposition,”

IEEE Trans. Geosci. Remote Sens., 42

(6), 1291

–1299

(2004). http://dx.doi.org/10.1109/TGRS.2004.825593 IGRSD2 0196-2892 Google Scholar

A. GarzelliF. NenciniL. Capobianco,

“Optimal MMSE pan sharpening of very high resolution multispectral images,”

IEEE Trans. Geosci. Remote Sens., 46

(1), 228

–236

(2008). http://dx.doi.org/10.1109/TGRS.2007.907604 IGRSD2 0196-2892 Google Scholar

B. AiazziS. BarontiM. Selva,

“Improving component substitution pan-sharpening through multivariate regression of MS+pan data,”

IEEE Trans. Geosci. Remote Sens., 45

(10), 3230

–3239

(2007). http://dx.doi.org/10.1109/TGRS.2007.901007 IGRSD2 0196-2892 Google Scholar

S. Rahmaniet al.,

“An adaptive IHS pan-sharpening method,”

IEEE Geosci. Remote Sens. Lett., 7

(4), 746

–750

(2010). http://dx.doi.org/10.1109/LGRS.2010.2046715 IGRSBY 1545-598X Google Scholar

J. ChoiK. YuY. Kim,

“A new adaptive component-substitution-based satellite image fusion by using partial replacement,”

IEEE Trans. Geosci. Remote Sens., 49

(1), 295

–309

(2011). http://dx.doi.org/10.1109/TGRS.2010.2051674 IGRSD2 0196-2892 Google Scholar

B. Sathya Bamaet al.,

“New additive wavelet image fusion algorithm for satellite images,”

Pattern Recognit. Mach. Intell., Lect. Notes Comput. Sci., 8251 313

–318

(2013). http://dx.doi.org/10.1007/978-3-642-45062-4_42 LNCSD9 0302-9743 Google Scholar

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||