|

|

1.IntroductionCreators of LULC classifications have long utilized remote sensing data and methods due to the broad spatial coverage of aerial photographs and satellite imagery versus ground-based inventories.1–3 However, the methods used in their creation have dramatically changed over time as advancements in sensor technology have allowed for increased spectral, spatial, and temporal resolutions of base imagery.4–7 A variety of pixel-driven analysis methods have historically been the industry standard for creating LULC classifications and may still be adequate for use with moderate to low resolution imagery, in homogeneous landscapes, or in areas where high resolution imagery is costly or nonexistent.8–10 Object-based image analysis (OBIA) methods have recently proven more appropriate for LULC mapping, especially with high resolution imagery and in heterogeneous landscapes such as urban areas where a greater level of classification detail is essential.5,11–18 This form of feature extraction allows the use of additional variables such as shape, texture, and contextual relationships to classify fine image features, and has been shown to improve classification accuracy over pixel-based methods.12,19,20 Application of the OBIA technique is no longer an exploratory research direction; rather it has been implemented operationally14,17,21–27 and continues to grow in popularity among urban forestry practitioners and land use planners. OBIA-driven classifications allow users greater information extraction from these “maps” than just the distribution and percentage of various classes. These highly detailed maps could prove useful for monitoring urban forest stand health28 and extracting landscape-scale indicators of ecosystem health.29,30 Thus, an evaluation of the opportunities this approach provides is crucial. Land use/land cover (LULC) classifications created using OBIA methods on high resolution imagery has not been without complications, especially in cases where either spectral or spatial resolution is still a major limiting factor of the imagery. For example, using imagery that has a high spatial resolution () but a low spectral resolution (RGB only, or no IR bands) can make it difficult to differentiate different types of vegetation, such as trees, shrubs, and grasses, or between different impervious surfaces, such as roads and buildings.14,22,27 Even using imagery with one or more IR bands but collected at a time of year when all vegetation is photosynthetically active, it can be challenging to distinguish between different vegetation classes.14 In high-density urban areas, or landscapes having dramatic topography, separating these classes using high spectral/high spatial resolution imagery can be quite problematic due to shadowing.31–34 Thus, including remotely sensed data capable of capturing topography, canopy, and building heights in an LULC classification is a logical method for improving accuracies of imagery-driven data analysis. Light detection and ranging (LiDAR) datasets have repeatedly been used to produce accurate terrain models and height measurements of forest canopy, buildings, and other landscape structures through active sensing of three-dimensional (3-D) objects on the ground surface. Early applications of LiDAR data included topographic models for high resolution bare ground digital elevation models, vegetation height and cover estimates, and forest structural characteristics including leaf area index and aboveground biomass.35–37 Although OBIA methods were originally developed for use with imagery, new research has begun to combine LiDAR data with high resolution imagery for mapping urban street trees obscured by building shadows,31,33 ground impervious surfaces,16 floodplains,38 canopy cover,33 forest stand structure,39–41 and transportation and utility corridors.42 Further, the addition of LiDAR data to an OBIA classification algorithm has been shown to improve classification results, especially for distinguishing between trees, shrubs, and grasses, and also between buildings and ground impervious surfaces.4,13,17,39,43,44 As LiDAR technologies continue to improve, allowing for faster and more cost-efficient data acquisition, it is expected that the availability of public-domain LiDAR data will improve, and thus, the need to understand how these data can enhance OBIA-driven mapping efforts is essential. Often a certain amount of manual postclassification takes place during the classification process, no matter which method is used. In the case of OBIA, postclassification typically involves reclassifying segments classified in error to the correct class based on the analyst’s knowledge of the region and level of image interpretation skills. However, we currently do not know how much manual postclassification contributes to classification accuracies. Image interpretation skills are an integral part of the OBIA method, so it is reasonable to suggest that taking a certain amount of time to utilize these expert skills to address the most obvious errors should increase the producer’s accuracy of the classes as well as the overall classification accuracy. This operator-machine connection is the key technological concept behind OBIA, and it is this ability to emulate the human concept of context that drives the object segmentation process. Utilizing the strengths of the OBIA method and the expertise of humans, the classification should result in a detailed, accurate, high quality dataset useful for a variety of applications. Based on what we have learned from this and other OBIA-based projects in other study areas,14,45–48 we would like to suggest a methodology framework that others, especially new users, might consider using. We believe a comprehensive classification can be created by a trained individual using freely available high spatial resolution imagery, LiDAR data, and remote sensing software packages. While others have shown the applicability of nonpublicly available data, or data flown for a specific mission, we demonstrate that these data can be used for other (postmission) purposes. None of the data used in this study were specifically flown for this project, or even for the purpose of LULC mapping. The overall goal of this project was to test a commonly used OBIA method for creating an LULC classification using imagery and LiDAR () data, examine overall and class accuracies, and determine any sources of error observed in the resulting product. Based on the above goal, the specific objectives of this study were to

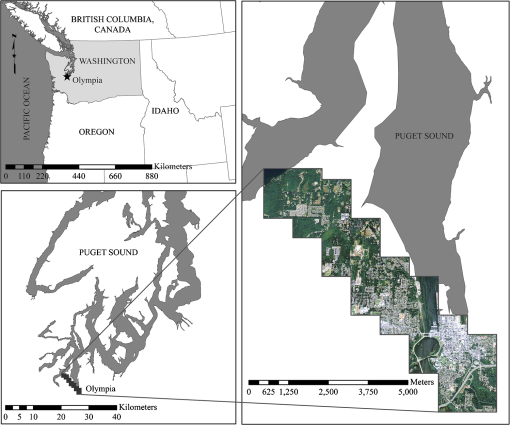

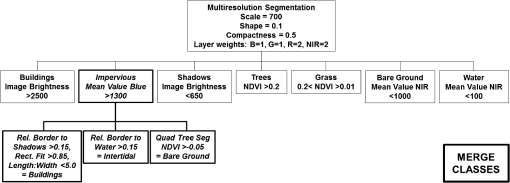

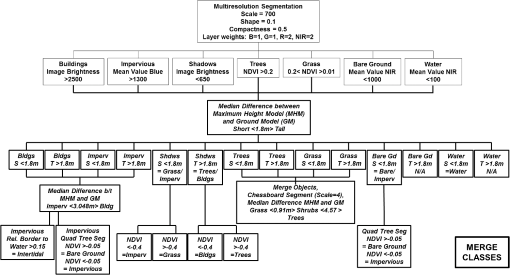

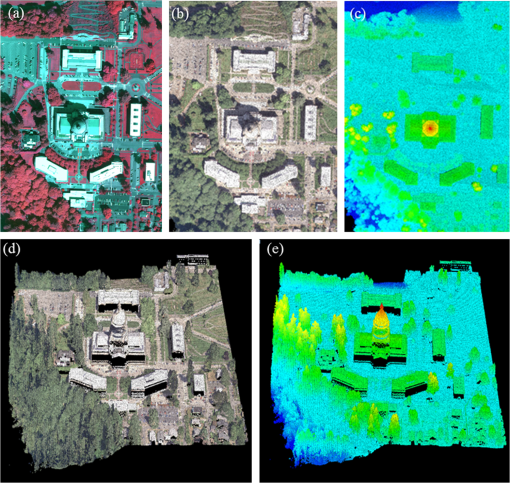

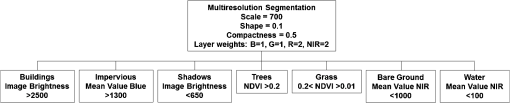

2.Methods2.1.Study AreaThe study area is a transect along a rural to urban gradient, located within the city of Olympia, Washington, USA (Fig. 1). The city of Olympia is located at the southern end of Puget Sound and is experiencing a moderate population growth with a population that is expected to double in 30 to 40 years.49 Puget Sound provides numerous ecosystem services to the region’s residents and Olympia’s urban tree canopy, detectable through LULC mapping, plays a vital role. 2.2.Remote Sensing DatasetsIn this work, we used 16-bit, four-band, color infrared imagery acquired in March of 2008 with a pixel resolution of 0.12 m (0.4 feet). On March 2 to 3, 2008 discrete-point LiDAR data were also acquired over the study area with the Optech 3100 sensor at a survey altitude of 899.16 m (2950 feet). Accuracy of the LiDAR data conforms to Puget Sound LiDAR Consortium standards50 with an absolute vertical accuracy of 0.03 m RMSE (0.11 feet RMSE). The data point cloud was collected at 4 pulse returns with a density of . Figure 2 shows the various images representing the remote sensing datasets used in this study. Fig. 2Remotely sensed data used to develop the object-based image analysis land use/land cover (LULC) algorithm: (a) false-color near-infrared aerial imagery, (b) LiDAR point cloud colored by true color RGB values, (c) LiDAR data colored by height, (d) LiDAR extruded by height and colored by true color RGB values from the coregistered imagery, and (e) LiDAR point cloud colored by LiDAR height; all scenes show the Washington State Capitol Building.  We generated a 1-m per-pixel spatial resolution LiDAR data-based digital terrain model (DTM) using the freely available FUSION software developed by the USDA Forest Service, Pacific Northwest Research Station.51,52 To accomplish this, we used the groundfilter algorithm within FUSION to filter the likely ground points from the LiDAR point cloud, and then created a smoothed raster from that point cloud at a 1-m resolution. We then applied the ground model to produce the same resolution standardized canopy height metrics which, in conjunction with the ground model, were the key LiDAR-based variables in our OBIA-driven classification. 2.3.Land Use/Land Cover Classification2.3.1.Developing a basic classificationWe originally developed an OBIA algorithm for a separate LULC classification project in Seattle, Washington,14 and wanted see if it would work in different locations within the same ecoregion (i.e., urbanized Pacific Northwest coastal areas) with limited modification. The LULC classes we selected are prominent throughout the greater Pacific Northwest coastal region and although certain classes are under-represented in this specific study area (e.g., intertidal and bare ground), we wanted to keep these classes in the algorithm for future OBIA analyses in different study areas across the Pacific Northwest coast.47 Two different LULC classification approaches were used in this study—one approach used imagery alone (IA) while the second used data. We used Trimble’s® eCognition® software program to create a segmentation and basic classification for each of the two datasets (IA and ). In both cases, the classification process began with a multiresolution segmentation of the four-band aerial imagery, using a scale parameter of 700, a shape parameter of 0.1, a compactness parameter of 0.5, and layer weights of , , , and (Fig. 3). These specific parameters were selected based on skilled visual interpretation of the image segmentations that resulted from using different scale parameters. Although we do recognize the inherent subjectivity in segmentation scale selection and the resulting image object size, an in-depth discussion on that topic is beyond the scope of our study. Several other published studies have focused on segmentation scale selection optimization and have documented how widely these parameters can vary depending on the object(s) of interest.53–55 Fig. 3Decision tree for the initial basic classifications for both imagery alone (IA) and imagery and LiDAR.  We used a combination of logical values, the feature space optimization tool, and two-dimensional (2-D) feature space plots in eCognition®, as well as expert interpretation to select threshold values. LiDAR data thresholds were assigned based on logical height cutoff points for distinguishing between tall and short objects. The mean brightness of each object, computed as the mean of the four bands, was used to classify buildings and shadows at each brightness extreme (i.e., light and dark). Normalized difference vegetation index (NDVI) thresholds were based on logical breakpoints between photosynthetically active tree vegetation, senescent grass, and nonphotosynthetic impervious surfaces. Feature space optimization and 2-D feature space plots allowed us to find appropriate thresholds using brightness for rooftops, darkness for shadows, darkness in the near-infrared for water, darkness in near-infrared for bare ground, and brightness in the blue band for impervious surfaces. Finally, the classification was further improved by implementing several hierarchical rules to remove small misclassified objects enclosed by other objects (e.g., cars on freeways). 2.3.2.Classification using imagery aloneAt the end of the processes described above, we had a basic classification based solely on imagery; however, it contained multiple objects misclassified as shadows, did not discriminate well between buildings and ground impervious surfaces, and misclassified intertidal areas as ground impervious. Several extra rules were written into the algorithm to address these errors (Fig. 4). The rule relative border to shadows () was used to identify and classify buildings incorrectly assigned to the ground impervious class. This worked well for us because buildings cast shadows, but ground impervious surfaces do not. Next, building shadows were classified by finding the object with the largest classified border, and classifying the shadow by that object’s class. A second rule for buildings was then run based on finding ground impervious objects with a large rectangular fit () and a small length:width ratio (). This located objects that had a general square shape, and helped to find buildings that did not have large relative borders to shadows (such as those surrounded by trees). Intertidal areas misclassified as ground impervious surfaces were reclassified based on their relative border to water (). Finally, bare ground was located by performing a quad tree segmentation on the ground impervious surfaces layer and classifying objects with an NDVI value greater than as bare ground. A final set of filtering algorithms filtered out any remaining small misclassified objects. 2.3.3.Classification using imagery and LiDAR dataWe also needed to apply additional rules to an classification to compensate for objects classified in error (Fig. 5). The median difference between the maximum height model and the ground model was computed for all objects. This value represented a good approximation of the height of each object above ground, and a threshold of 1.8 m (6 feet) was used to separate tall objects such as buildings and trees, from short objects such as grass and ground impervious surfaces. Shadows were first classified into temporary classes based on median difference. Using an NDVI threshold () tall temporary objects were classified as trees or buildings, and short temporary objects as grass or ground impervious. A chessboard segmentation was then run on the grass and tree classes, followed by a reclassification based on median height. The median height value was better at explaining differences between these two classes because irrigated lawns created confusion when using NDVI alone. Median height was used to reclassify ground impervious surfaces and buildings. Intertidal areas misclassified as ground impervious were reclassified based on their relative border to water (). As in the IA classification, bare ground was located using a quad tree segmentation and an NDVI threshold () of the ground impervious class. Lastly, a set of filtering algorithms removed small misclassified objects. 2.3.4.Manual postclassification of land use/land cover classificationsEfforts to improve the classifications included systematic manual reclassification; the analyst deployed the postclassification in a gridded pattern from west to east and north to south to achieve this process. Postclassification for the IA classification took longer than for the classification, as it contained more errors. The IA postclassification took approximately 12 h to complete, while the postclassification took only a third of the time (). The greatest time sink for manual postclassification in both datasets was correcting confusion between driveways and houses. The segmentation included small sections of driveway with houses, forcing us to manually reclassify driveways as ground impervious rather than as buildings. There was not a good way to differentiate between ground impervious and buildings in the IA classification, and it was time consuming to postclassify areas where there were residential developments. It was also difficult to separate grass and trees in the IA classification, so we spent a good bit of time manually reclassifying irrigated lawns as grass rather than trees. Although it might be relatively easy to adjust the algorithm to better distinguish between driveways and houses using LiDAR data (i.e., making the segmentation size smaller and relying on height differences), it would be challenging to do for the IA classification. For this imagery, there is not enough spectral information to differentiate trees from grass and buildings from ground impervious surfaces. Texture might have been able to help discriminate the trees, but it is so memory intensive (i.e., it would have taken weeks to run the algorithm) it was not used in this project. Further, the amount of time it would have taken the texture algorithm to run could likely equal or exceed the amount of time it took to manually reclassify objects, and improvement in classification accuracy is not as certain. Our approach to test the postclassification impacts on the OBIA-driven LULC classification results was fairly simple; however, it provides a general overview of how postclassification affects accuracy. 2.4.Accuracy AssessmentAn accuracy assessment was conducted for the four different classifications: IA and , each with and without postclassification. Accuracy assessment methods were based on the guidelines of Foody2,56 and Congalton and Green.57 First, the area of each class was quantified using polygons from the segmentation algorithm (without manual postclassification) for all tiles in study area. These were used to determine the number of points that should fall in each class. Buffering was not used as we did not want to bias our analysis toward large objects which tend to be classified correctly by OBIA methods. We then generated a total of 300 stratified random points, which were distributed proportionally by area for each class, for visual accuracy assessment of the classifications by an expert image analyst. Each point was then visually classified by a trained image analyst using 12 cm color infrared imagery and a canopy/building surface model created using the LiDAR data. The classes used are described in Table 1. Table 1Land use/land cover class descriptions.

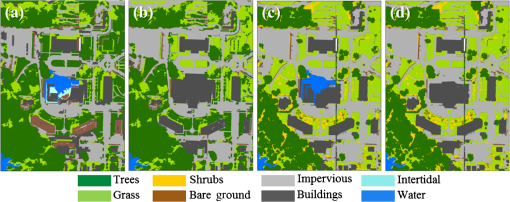

3.Results and Discussion3.1.Accuracy for Imagery Alone ClassificationThe OBIA method produced sufficient results using the aerial IA; overall accuracy was 71.3% (). The method was effective at extracting trees/shrubs, and to a lesser degree, ground impervious surfaces (e.g., roads, driveways, paved parking lots). With the exception of water, tree canopy was classified most effectively, and had a producer’s accuracy of 92.7% and a user’s accuracy of 77.4% (). Since the producer’s accuracy was greater than the user’s accuracy, these results suggest that we have captured the finest amount of detail in our classification these data will allow. This also indicates that the classification algorithm has overclassified tree canopy, and points were generally misclassified as grass. The ground impervious class had a producer’s accuracy of 66% and user’s accuracy of 75.6% (), indicating we may have been able to optimize the algorithm further to achieve a higher producer’s accuracy. Ground impervious surface points were misclassified across all classes except water. Producer’s accuracy for the remaining classes was near or below 50%, excluding water. 3.2.Accuracy for Imagery Alone Classification with PostclassificationThe overall classification accuracy slightly improved to 74.7% (). Again, the method appeared to be effective at extracting tree canopy and ground impervious surfaces. Producer’s accuracy was greater than user’s accuracy for the tree canopy and building classes. Although producer’s accuracy decreased by for tree/shrubs, there was an increase in producer’s accuracy for the grass class, improving from 36% to 42%. Manual postclassification also increased producer’s accuracy for ground impervious (from 66% to 72.3%), buildings (from 52% to 68%), and water (from 96% to 100%). 3.3.Accuracy for Imagery and LiDAR ClassificationThe addition of LiDAR data to the LULC classification process improved the overall classification accuracy to 79.7% (), and enabled us to separate the tree/shrub class into two distinct tree and shrub classes. Interestingly, this method appeared to be more effective at extracting grass and buildings than trees and ground impervious surfaces, as was the case with the IA classification algorithm. The grass class had a producer’s accuracy of 90%, but a user’s accuracy of 64.3%, as some of these points still appear as trees, shrubs, or even bare ground. Producer’s and user’s accuracies for the building class were equal at 88%, and demonstrate the improvement in accuracy the LiDAR data provided for this class. For both tree and ground impervious classes, user’s accuracy was greater than producer’s accuracy, which was 92.7% versus 84.17% and 88.10% versus 78.7%, respectively. Although separated from the tree class, the shrub class had a low producer’s accuracy of 52.9% (user’s accuracy 50%; ). 3.4.Accuracy for Imagery and LiDAR Classification with PostclassificationThe small amount of time taken to postclassify the classification () resulted in a 1% improvement of overall classification accuracy from 79.7% to 80.7%. Since the LiDAR data appeared to have cleared up most of the points classified in error on the IA classification, there were fewer points of error in the classification. Manual postclassification slightly improved the producer’s accuracy of the ground impervious and water classes from 78.7% and 96% to 83% and 100%, respectively. The manual postclassification also had the benefit of resolving large, obvious errors that comprised a small percentage of the landscape, but were especially glaring when viewing the final map, thus improving the overall quality of the product. 3.5.Comparisons Between the Four ClassificationsAs is evident in Table 2, the overall accuracy increased with each classification iteration. Two of our main objectives were to determine the contribution of LiDAR data to classification accuracies and assess the impact of manual postclassification on classification accuracies. Manual postclassification was more time intensive for the IA classification; of work resulted in a 3.3% increase in overall classification accuracy. Most of this improvement was reflected in increases in the building and ground impervious class accuracies. When LiDAR data were added to the classification, overall accuracy increased an additional 5%. Substantial increases in producer’s accuracy are observed in the grass, building, and ground impervious classes. The addition of LiDAR data enabled us to divide the tree/shrub class into separate tree and shrub classes, which resulted in a higher level of classification detail but lower class accuracies. We assume this is in part due to the confusion between low tree crown edges and actual shrubs, but also to the inability of the image interpreter to determine object height (i.e., trees versus shrubs) on the 2-D imagery used as ground truth. Although producer’s accuracy in the tree class decreased in the classifications, user’s accuracy significantly increased. Table 2Overall and producer’s accuracies for all four classifications, and percent improvement in accuracies over imagery alone (IA) for each subsequent classification iteration [i.e., IA with postclassification (PC), imagery and LiDAR (I+L, and I+L with PC].

Manual postclassification of the classification took and improved the overall accuracy 1%. The overall difference from the IA classification (without manual postclassification) and the data classification (with manual postclassification) was 9.4%, improving from 71.3% to 80.7% (Table 2). Manual postclassification did not significantly increase the accuracy of our classifications; however, we believe any amount of increase in accuracy is desirable, and based on the short amount of time it takes to do, it is worth the investment. Not only is the accuracy of the map improved but a more visually pleasing product is created as well. For example, in the absence of manual postclassification, we would have shown the State Capitol Building as covered and surrounded by water, which in actuality is merely a shadow cast by the Capitol rotunda (see Fig. 6). Fig. 6LULC classifications: (a) IA, (b) IA with postclassification, (c) imagery and LiDAR (), (d) imagery and LiDAR with postclassification. Notes: (a) and (b) do not contain the shrub class. Misclassification errors in (a) and (b) visually illustrate how the addition of LiDAR can increase class accuracies.  3.6.Error Attribution3.6.1.Error in the imagery alone classificationExamination of each individual erroneous point helped us determine specific sources of error in the classifications. In the IA classification, there were discrepancies for 85 of the 300 total accuracy assessment points, most of which fell into four categories. The main source of misclassification, accounting for half of our error (43 points), was due to our inability to discriminate buildings versus ground impervious surfaces and trees versus grass using spectral values alone. Irrigated lawns, having high NDVI values which make it difficult to separate them from trees without height information, accounted for 27 of these erroneous points. Several of these points (as well as a few others) were confounded by errors related to spectral thresholds, where objects were brighter or darker in specific bands than they were expected to be. One example is buildings with asphalt roofs that had a similar brightness values to ground impervious surfaces and thus, were included in the ground impervious class. An additional 13 points were located on objects that fell in shadows, resulting in inaccurate classification of those objects. Eleven points were located along the edge, or borderline, of two classes; in these cases, the points have about an equal chance of being assigned to either class by both the image analyst and the image interpreter (i.e., the point could belong to either class, depending on individual opinion). Several class-specific sources of error are also worth noting. The bare ground class had the highest error (0% accuracy), but that appears to be a consistent outcome in LULC mapping.14 A secondary source of class error is confusion between the water and intertidal classes. It would be relatively easy to include the intertidal areas in the water class, but since these areas are unique ecosystems high in biodiversity we wanted to keep them in a separate class. On an interesting note, one point classified as grass was actually a building that had a “green roof.” This could be an important feature to remember as the green roofs and living buildings gain popularity, especially in urban areas. Finally, 7 of the 85 error points were due to differences in image interpretation between the image analyst that created the classification and the image interpreter that conducted the accuracy assessment; 5 of these were water versus intertidal. 3.6.2.Error in the imagery and LiDAR classificationThere were discrepancies for 61 of the 300 points in the classification. A majority of these points also fit into four categories of error, but these are different types of errors than those observed in the IA classification. As expected, the addition of LiDAR data substantially helped differentiate spectrally similar objects of varying heights. However, the main sources of error were still due to confusion between trees, shrubs, and grass, and it would be more obvious if the shrub class did not have errors of its own. This error resulted from a combination of two very different issues: (1) the difficulty associated with assessing an object’s specific height based on a 2-D image and (2) the shrub class existing at the edge of two height classes and its relative narrow range in height values. The first of these represents an unavoidable incongruity associated with using 2-D imagery to ground truth a 3-D dataset. The second issue deals with edge effects, from which multiple different types of errors result. We anticipated that adding LiDAR data to the image classification would help in distinguishing between trees, shrubs, and grasses, and buildings and ground impervious surfaces. The inclusion of LiDAR data did indeed help in the classification process, added a greater level of detail, and improved the classification accuracies, but was not error-free. Several of these errors (6 of the 61 points) were directly due to edge effects associated with LiDAR data and the OBIA technique. An additional 28 “borderline” points could also be attributed to edge effects. There are several ways in which edges affect how objects are classified (or misclassified) and contribute to error in the LULC classification. One of these deals with issues related to the combination of spatial resolution of the imagery used and the object’s size. If spatial resolution is low, pixel resolution is larger than an object’s edge. In other words, you will have mixed pixels within an object, no matter its size. For example, a single pixel (or object) may contain both the edge of a building and the edge of a sidewalk, leaving the algorithm to select whether that pixel (or object) is either a building or ground impervious surface. Spectral complexity, a second source contributing to edge errors, is an additional complication of using high spatial resolution imagery. Consider the roof of a downtown building: it is usually composed of different materials such as metal flashing and asphalt, and can contain objects like HVAC vents and communications equipment, may have planters, outdoor furniture, or parking for cars, and each of these objects and the building itself casts shadows. Although we can recognize the single, large object as a building based on its shape and context, the computer has a more difficult time “seeing” all of these smaller objects as multiple components of a single building object. A third issue we encountered related to object edges is when the outer edge of a tree canopy reaches near or touches the ground, it is classified as shrub or grass due to the height of the LiDAR data points returned from that location. We suggest that including some sort of crown segmentation could help with this problem.58 We also observed instances where there was a mismatch between datasets due to the radial distortion inherent in orthophotos (but not in LiDAR data) resulting in the canopy being slightly off. This problem has been well-documented in the literature and it has been suggested that LiDAR-based orthorectification can minimize these misalignment issues.59–64 3.7.Other IssuesOther than those issues described above, the only other error apparent in our classification is due to the presence of artifacts at the LiDAR data seams, or tile boundaries (see vertical artifact in Fig. 6). This can be resolved by a few different tiling and stitching processes, depending on the size of the full dataset. One option is to stitch all of the tiles together to create one, large seamless tile. However, for large study areas, the stitched file can be over a terabyte and taxes the analytical capabilities of most desktop workstations. Another, more reasonable, approach is to make the LiDAR data tiles larger than the imagery tiles and merge overlapping areas to reduce edge effects and make the tiles appear seamless. We did not perform any tiling and stitching processes on these datasets because both the LiDAR data and imagery were tiled for us by the vendor. It was not until the classification processes were complete that this error became apparent. However, since this was a methods-based study, we did not attempt to reconcile these errors in the present classifications but rather have taken note of this as an issue to resolve in future studies. 3.8.Toward an Object-Based Methodology FrameworkBased on the strengths and limitations we have recognized through the completion of this and other OBIA-based projects in other study areas,14,45–48 we would like to suggest a methodology framework for OBIA-based LULC classification development in urban areas. By following this framework, some of the errors present in this case study can be avoided. It is our aim to share what we have learned from these experiments and suggest ways to improve upon the methods we employed herein. We hope this information can serve as a starting point for others attempting an OBIA-based LULC classification for the first time, no matter their budget or experience level. When planning to create an OBIA-based LULC classification, first check to see what remote sensing datasets already exist for your study area. OBIA methods can be used on free, publicly available, high spatial resolution imagery such as U.S. National Agriculture Imagery Program (NAIP) imagery, which has a national extent and has been successfully classified to achieve detailed LULC maps for urban planning, management, and scientific research.14,15,17 NAIP imagery can be accessed through the United States Department of Agriculture (USDA) Geospatial Data Gateway.65 OBIA methods can also be used on high spatial resolution satellites with global coverage, such as Quickbird or GeoEye, although these data are not typically free of cost. Additionally, LiDAR data consortia, devoted to develop public-domain, high resolution LiDAR datasets would facilitate these efforts as well. These datasets can be accessed through the United States Geological Survey (USGS), the lead federal agency coordinating efforts toward a National LiDAR dataset.66 It has been shown that LiDAR data is especially useful for classifying areas falling within shadows and distinguishing between objects of similar spectral reflectance but different heights.31,33 However, LiDAR data may not always be available. In this case, some of the issues inherent to using IA can be solved through the use of ancillary datasets. Many municipal agencies have previously invested a lot of time and money into creating parcel datasets, building footprints, transportation routes, utility corridors, flood zones, and other geospatial data layers. In many locales these datasets are freely available to the public, and when added to an LULC classification they not only help create visually pleasing products, but can also be utilized in class discrimination which can save the overall amount of time spent on classification development.14 It could be as simple as masking out a water class by using a coastline or open water body boundary file, or a utilizing a building footprint’s layer to help discriminate buildings from ground impervious surfaces such as roads, driveways, or patios. Likely, a good bit of pre-processing will need to be completed prior to beginning the segmentation and classification processes. Industry standard pre-processing techniques include atmospheric and radiometric correction, orthorectification, georeferencing, and mosaicking images, as well as creating LiDAR-based DTMs, canopy height models, and other raster files for height categories of interest. Based on our experience, other pre-processing might include tiling and stitching imagery and LiDAR datasets to reduce edge effects67 and creating a tree crown segmentation layer to minimize misclassification of tree canopy edges as shrubs or grass.58 The software you choose to use to create your classification will be the next decision. There are several software packages and add-on modules currently available to perform the OBIA method. Examples of these include the freely available SPRING software developed by Brazil’s National Institute for Space Research (INPE) and the industry-leading proprietary eCognition® software, a product of Trimble®. Although anyone can learn to use either of these or other OBIA-based software programs, there can be a greater learning curve with proprietary programs which typically have many more algorithm development options. For those interested in starting an OBIA project, we have created a guided analytical training workshop which is freely available online to anyone interested.68 Postclassification is generally a part of the LULC classification creation process, no matter which method is used, especially when obvious errors might render the final product unacceptable for the end user. For OBIA-based projects, postclassification usually involves reclassifying segments classified in error to the correct class based on the analyst’s expert skills and knowledge of the region. It should only take a short amount of time to manually postclassify OBIA-based classifications, making the increase in accuracy worth the time. It is certainly appropriate to capitalize on the intimate connection between operator and computer that is fundamental to the OBIA method, especially considering this procedure has no major implications to accuracy but does improve the quality of your classification. Finally, an accuracy assessment is requisite to any LULC classification project. The authors have found the methods suggested in the book by Congalton and Green,57 collected from highly regarded sources in the remote sensing community, to be of the highest standards. There are many ways to conduct an accuracy assessment and these will vary according to the location and scope of the project and the type and resolution of the datasets used. Although no industry standard has been set, 85% seems to be the most universally acceptable accuracy level1,57 even though there is nothing specifically significant about this number and this threshold continues to be debated.69,70 Further, methods for conducting a statistically sound accuracy assessment for OBIA-based classifications are still currently being developed and refined, as disparate accuracy levels can result from using points versus polygons (i.e., objects),71 uncertainties about object boundary locations on the ground,72 and object segment area and location goodness of fit.73 Last, it is also worth noting that ground truthing your accuracy assessment in urban areas can be quite problematic since a large proportion of urban lands are private property, making accessibility to randomly generated ground control points a huge issue. In these cases, it is often necessary to substitute a ground truth survey with very high spatial resolution imagery.15,17,23 4.ConclusionsThis study quantifies the additional benefit of LiDAR data and manual postclassification important for initial planning of LULC mapping in urban areas. Data acquisition costs are an important factor when planning an LULC classification and jurisdictions may have trouble justifying the high cost of acquiring LiDAR data. Combining discrete-point high density aerial LiDAR data and OBIA-based classification methods have been shown to improve LULC classification accuracy, and is especially suitable for distinguishing between trees, shrubs, and grasses, and buildings and ground impervious surfaces.31,33 Results from this study corroborate those findings and indicate LiDAR data does indeed contribute to improved overall and class accuracies. When LiDAR data were added to our LULC classification, overall accuracy increased 5%. Substantial increases in producer’s accuracy were observed in the grass, building, and ground impervious classes. The addition of LiDAR data enabled us to divide trees and shrubs into separate classes, which resulted in a higher level of detail. Manual postclassification also improved overall accuracy and improved visual appeal of the final product. Image interpretation is integral to the OBIA method, promoting an intimate connection between operator and machine. It is thus appropriate to capitalize on these strengths to correct obvious postclassification errors, since this procedure does not have major implications for accuracy but does improve the quality of the classification. It would take much more time and effort to train the algorithm to perform as well. AcknowledgmentsFunding for this research was provided through a Joint Venture Agreement between the University of Washington (UW) Remote Sensing and Geospatial Analysis Laboratory and the USDA Forest Service Pacific Northwest Research Station, as well as the UW Precision Forestry Cooperative through Corkery Family Chair Fellowships. We also thank the City of Olympia for providing the 2008 Olympia imagery and LiDAR datasets, flown by Watershed Sciences. Finally, we kindly thank the anonymous reviewers who helped us to improve this paper. ReferencesJ. R. Andersonet al.,

“A land use and land cover classification system for use with remote sensor data,”

Washington, D.C.

(1976). Google Scholar

G. M. Foody,

“Status of land cover classification accuracy assessment,”

Remote Sens. Environ., 80

(1), 185

–201

(2002). http://dx.doi.org/10.1016/S0034-4257(01)00295-4 RSEEA7 0034-4257 Google Scholar

J. T. KerrM. Ostrovsky,

“From space to species: ecological applications for remote sensing,”

Trends Ecol. Evol., 18

(6), 299

–305

(2003). http://dx.doi.org/10.1016/S0169-5347(03)00071-5 TREEEQ 0169-5347 Google Scholar

A. S. AntonarakisK. S. RichardsJ. Brasington,

“Object-based land cover classification using airborne LiDAR,”

Remote Sens. Environ., 112

(6), 2988

–2998

(2008). http://dx.doi.org/10.1016/j.rse.2008.02.004 RSEEA7 0034-4257 Google Scholar

Y. Chenet al.,

“Hierarchical object oriented classification using very high resolution imagery and LIDAR data over urban areas,”

Adv. Space Res., 43

(7), 1101

–1110

(2009). http://dx.doi.org/10.1016/j.asr.2008.11.008 ASRSDW 0273-1177 Google Scholar

C. Cleveet al.,

“Classification of the wildland-urban interface: a comparison of pixel- and object-based classifications using high-resolution aerial photography,”

Comput. Environ. Urban Syst., 32

(4), 317

–326

(2008). http://dx.doi.org/10.1016/j.compenvurbsys.2007.10.001 0198-9715 Google Scholar

L. Guoet al.,

“Relevance of airborne lidar and multispectral image data for urban scene classification using Random Forests,”

ISPRS J. Photogramm., 66

(1), 56

–66

(2011). http://dx.doi.org/10.1016/j.isprsjprs.2010.08.007 IRSEE9 0924-2716 Google Scholar

G. Duveilleret al.,

“Deforestation in Central Africa: estimates at regional, national and landscape levels by advanced processing of systematically-distributed Landsat extracts,”

Remote Sens. Environ., 112

(5), 1969

–1981

(2008). http://dx.doi.org/10.1016/j.rse.2007.07.026 RSEEA7 0034-4257 Google Scholar

C. Song,

“Spectral mixture analysis for subpixel vegetation fractions in the urban environment: how to incorporate endmember variability?,”

Remote Sens. Environ., 95

(2), 248

–263

(2005). http://dx.doi.org/10.1016/j.rse.2005.01.002 RSEEA7 0034-4257 Google Scholar

T. R. Tookeet al.,

“Extracting urban vegetation characteristics using spectral mixture analysis and decision tree classifications,”

Remote Sens. Environ., 113

(2), 398

–407

(2009). http://dx.doi.org/10.1016/j.rse.2008.10.005 RSEEA7 0034-4257 Google Scholar

A. S. Antonarakis,

“Evaluating forest biometrics obtained from ground lidar in complex riparian forests,”

Remote Sens. Lett., 2

(1), 61

–70

(2011). http://dx.doi.org/10.1080/01431161.2010.493899 2150-704X Google Scholar

T. Blaschke,

“Object based image analysis for remote sensing,”

ISPRS J. Photogramm., 65

(1), 2

–16

(2010). http://dx.doi.org/10.1016/j.isprsjprs.2009.06.004 IRSEE9 0924-2716 Google Scholar

Y. KeL. J. QuackenbushJ. Im,

“Synergistic use of QuickBird multispectral imagery and LIDAR data for object-based forest species classification,”

Remote Sens. Environ., 114

(6), 1141

–1154

(2010). http://dx.doi.org/10.1016/j.rse.2010.01.002 RSEEA7 0034-4257 Google Scholar

L. M. MoskalD. M. StyersM. A. Halabisky,

“Monitoring urban tree cover using object-based image analysis and public domain remotely sensed data,”

Remote Sens., 3

(10), 2243

–2262

(2011). http://dx.doi.org/10.3390/rs3102243 17LUAF 2072-4292 Google Scholar

R. V. PlattL. Rapoza,

“An evaluation of an object-oriented paradigm for land use/land cover classification,”

Prof. Geogr., 60

(1), 87

–100

(2008). http://dx.doi.org/10.1080/00330120701724152 Google Scholar

W. ZhouA. Troy,

“An object-oriented approach for analysing and characterizing urban landscape at the parcel level,”

Int. J. Remote Sens., 29

(11), 3119

–3135

(2008). http://dx.doi.org/10.1080/01431160701469065 IJSEDK 0143-1161 Google Scholar

W. ZhouA. Troy,

“Development of an object-based framework for classifying and inventorying human-dominated forest ecosystems,”

Int. J. Remote Sens., 30

(23), 6343

–6360

(2009). http://dx.doi.org/10.1080/01431160902849503 IJSEDK 0143-1161 Google Scholar

W. ZhouA. TroyJ. M. Grove,

“Object-based land cover classification and change analysis in the Baltimore metropolitan area using multitemporal high resolution remote sensing data,”

Sensors, 8

(3), 1613

–1636

(2008). http://dx.doi.org/10.3390/s8031613 SNSRES 0746-9462 Google Scholar

M. E. NewmanK. P. MclarenB. S. Wilson,

“Comparing the effects of classification techniques on landscape-level assessments: pixel-based versus object-based classification,”

Int. J. Remote Sens., 32

(14), 4055

–4073

(2011). http://dx.doi.org/10.1080/01431161.2010.484432 IJSEDK 0143-1161 Google Scholar

L. D. RobertsonD. J. King,

“Comparison of pixel- and object-based classification in land cover change mapping,”

Int. J. Remote Sens., 32

(6), 1505

–1529

(2011). http://dx.doi.org/10.1080/01431160903571791 IJSEDK 0143-1161 Google Scholar

Y. H. ArayaP. Cabral,

“Analysis and modeling of urban land cover change in Setúbal and Sesimbra, Portugal,”

Remote Sens., 2

(6), 1549

–1563

(2010). http://dx.doi.org/10.3390/rs2061549 17LUAF 2072-4292 Google Scholar

P. HofmannJ. StroblA. Nazarkulova,

“Mapping green spaces in Bishkek—how reliable can spatial analysis be?,”

Remote Sens., 3

(6), 1088

–1103

(2011). http://dx.doi.org/10.3390/rs3061088 17LUAF 2072-4292 Google Scholar

R. PuS. LandryQ. Yu,

“Object-based urban detailed land cover classification with high spatial resolution IKONOS imagery,”

Int. J. Remote Sens., 32

(12), 3285

–3308

(2011). http://dx.doi.org/10.1080/01431161003745657 IJSEDK 0143-1161 Google Scholar

D. Stowet al.,

“Object-based classification of residential land use within Accra, Ghana based on QuickBird satellite data,”

Int. J. Remote Sens., 28

(22), 5167

–5173

(2007). http://dx.doi.org/10.1080/01431160701604703 IJSEDK 0143-1161 Google Scholar

M. G. TewoldeP. Cabral,

“Urban sprawl analysis and modeling in Asmara, Eritrea,”

Remote Sens., 3

(10), 2148

–2165

(2011). http://dx.doi.org/10.3390/rs3102148 17LUAF 2072-4292 Google Scholar

J. S. WalkerJ. M. BriggsT. Blaschke,

“Object-based land-cover classification for the Phoenix metropolitan area: optimization vs. transportability,”

Int. J. Remote Sens., 29

(7), 2021

–2040

(2008). http://dx.doi.org/10.1080/01431160701408337 IJSEDK 0143-1161 Google Scholar

Q. Weng,

“Remote sensing of impervious surfaces in the urban areas: requirements, methods, and trends,”

Remote Sens. Environ., 117 34

–49

(2012). http://dx.doi.org/10.1016/j.rse.2011.02.030 RSEEA7 0034-4257 Google Scholar

D. M. Styerset al.,

“Developing a land-cover classification to select indicators of forest ecosystem health in a rapidly urbanizing landscape,”

Landscape Urban Plann., 94

(3–4), 158

–165

(2010). http://dx.doi.org/10.1016/j.landurbplan.2009.09.006 LUPLEZ 0169-2046 Google Scholar

K. B. Joneset al.,

“An ecological assessment of the United States mid-Atlantic region: a landscape atlas,”

72

(1997). Google Scholar

D. M. Styerset al.,

“Scale matters: indicators of ecological health along the urban–rural interface near Columbus, Georgia,”

Ecol. Indic., 10

(2), 224

–233

(2010). http://dx.doi.org/10.1016/j.ecolind.2009.04.018 EICNBG 1470-160X Google Scholar

P. M. Dare,

“Shadow analysis in high-resolution satellite imagery of urban areas,”

Photogramm. Eng. Remote Sens., 71

(2), 169

–177

(2005). http://dx.doi.org/10.14358/PERS.71.2.169 PGMEA9 0099-1112 Google Scholar

R. MathieuJ. AryalA. K. Chong,

“Object-based classification of Ikonos imagery for mapping large-scale vegetation communities in urban areas,”

Sensors, 7

(11), 2860

–2880

(2007). http://dx.doi.org/10.3390/s7112860 SNSRES 0746-9462 Google Scholar

J. O’Neil-Dunneet al.,

“Object-based high-resolution land-cover mapping operational considerations,”

in 17th Int. Conf. on Geoinformatics,

1

–6

(2009). Google Scholar

S. TuominenA. Pekkarinen,

“Performance of different spectral and textural aerial photograph features in multi-source forest inventory,”

Remote Sens. Environ., 94

(2), 256

–268

(2005). http://dx.doi.org/10.1016/j.rse.2004.10.001 RSEEA7 0034-4257 Google Scholar

K. A. HartfieldK. I. LandauW. J. D. van Leeuwen,

“Fusion of high resolution aerial multispectral and LiDAR data: land cover in the context of urban mosquito habitat,”

Remote Sens., 3

(11), 2364

–2383

(2011). http://dx.doi.org/10.3390/rs3112364 17LUAF 2072-4292 Google Scholar

M. A. Lefskyet al.,

“Lidar remote sensing for ecosystem studies,”

BioScience, 52

(1), 19

–30

(2002). http://dx.doi.org/10.1641/0006-3568(2002)052[0019:LRSFES]2.0.CO;2 BISNAS 0006-3568 Google Scholar

S. E. ReutebuchH.-E. AndersenR. J. McGaughey,

“Light detection and ranging (LIDAR): an emerging tool for multiple resource inventory,”

J. For., 103

(6), 286

–292

(2005). JFUSAI 0022-1201 Google Scholar

M. E. Hodgsonet al.,

“An evaluation of lidar-derived elevation and terrain slope in leaf-off conditions,”

Photogramm. Eng. Remote Sens., 71

(7), 817

–823

(2005). http://dx.doi.org/10.14358/PERS.71.7.817 PGMEA9 0099-1112 Google Scholar

G. Chenet al.,

“A multiscale geographic object-based image analysis to estimate lidar-measured forest canopy height using Quickbird imagery,”

Int. J. Geogr. Inf. Sci., 25

(6), 877

–893

(2011). http://dx.doi.org/10.1080/13658816.2010.496729 1365-8816 Google Scholar

J. J. RichardsonL. M. Moskal,

“Strengths and limitations of assessing forest density and spatial configuration with aerial LiDAR,”

Remote Sens. Environ., 115

(10), 2640

–2651

(2011). http://dx.doi.org/10.1016/j.rse.2011.05.020 RSEEA7 0034-4257 Google Scholar

A. A. Sullivanet al.,

“Object-oriented classification of forest structure from light detection and ranging data for stand mapping,”

Western J. Appl. For., 24

(4), 198

–204

(2009). WJAFEK Google Scholar

T. C. WeberW. L. Allen,

“Beyond on-site mitigation: an integrated, multi-scale approach to environmental mitigation and stewardship for transportation projects,”

Landscape Urban Planning, 96

(4), 240

–256

(2010). http://dx.doi.org/10.1016/j.landurbplan.2010.04.003 LUPLEZ 0169-2046 Google Scholar

T. Hermosillaet al.,

“Evaluation of automatic building detection approaches combining high resolution images and LiDAR data,”

Remote Sens., 3

(6), 1188

–1210

(2011). http://dx.doi.org/10.3390/rs3061188 17LUAF 2072-4292 Google Scholar

M. VossR. Sugumaran,

“Seasonal effect on tree species classification in an urban environment using hyperspectral data, LiDAR, and an object-oriented approach,”

Sensors, 8

(5), 3020

–3036

(2008). http://dx.doi.org/10.3390/s8053020 SNSRES 0746-9462 Google Scholar

M. HalabiskyL. M. H. MoskalA. Sonia,

“Object-based classification of semi-arid wetlands,”

J. Appl. Remote Sens., 5

(1), 053511

(2011). http://dx.doi.org/10.1117/1.3563569 1931-3195 Google Scholar

L. M. MoskalM. E. Jakubauskas,

“Monitoring post disturbance forest regeneration with hierarchical object-based image analysis,”

Forests, 4

(4), 808

–829

(2013). http://dx.doi.org/10.3390/f4040808 FOPEA4 Google Scholar

L. M. MoskalD. M. StyersJ. Kirsch,

“Project Report: Tacoma Canopy Cover Assessment,”

in University of Washington, Remote Sensing and Geospatial Analysis Laboratory,

7

(2011). Google Scholar

J. J. RichardsonL. M. Moskal,

“Uncertainty in urban forest canopy assessment: lessons from Seattle, WA, USA,”

Urban For. Urban Greening, 13

(1), 152

–157

(2014). http://dx.doi.org/10.1016/j.ufug.2013.07.003 1618-8667 Google Scholar

Washington State Department of Ecology, “Saving Puget Sound,”

(2014) http://www.ecy.wa.gov/puget_sound/overview.html February ). 2014). Google Scholar

R. Haugerudet al.,

“A proposed specification for lidar surveys in the Pacific Northwest,”

in Puget Sound Lidar Consortium,

12

(2008). Google Scholar

R. J. McGaughey,

“FUSION: Software for LIDAR Data Analysis and Visualization,”

in USDA Forest Service, Pacific Northwest Research Station,

31

(2008). Google Scholar

S. E. Reutebuchet al.,

“Accuracy of a high-resolution LIDAR terrain model under a conifer forest canopy,”

Can. J. Remote Sens., 29

(5), 527

–535

(2003). http://dx.doi.org/10.5589/m03-022 CJRSDP 0703-8992 Google Scholar

L. DraguţD. TiedeS. R. Levick,

“ESP: a tool to estimate scale parameter for multiresolution image segmentation of remotely sensed data,”

Int. J. Geogr. Inf. Sci., 24

(6), 859

–871

(2010). http://dx.doi.org/10.1080/13658810903174803 1365-8816 Google Scholar

J. ImJ. R. JensenJ. A. Tullis,

“Object-based change detection using correlation image analysis and image segmentation,”

Int. J. Remote Sens., 29

(2), 399

–423

(2008). http://dx.doi.org/10.1080/01431160601075582 IJSEDK 0143-1161 Google Scholar

A. Smith,

“Image segmentation scale parameter optimization and land cover classification using the Random Forest algorithm,”

J. Spatial Sci., 55

(1), 69

–79

(2010). http://dx.doi.org/10.1080/14498596.2010.487851 1449-8596 Google Scholar

G. M. Foody,

“Assessing the accuracy of remotely sensed data: principles and practices,”

Photogramm. Rec., 25

(130), 204

–205

(2010). http://dx.doi.org/10.1111/phor.2010.25.issue-130 PGREAY 0031-868X Google Scholar

R. G. CongaltonK. Green, Assessing the Accuracy of Remotely Sensed Data: Principles and Practices, CRC Press, Taylor & Francis Group, Boca Raton, Florida

(2009). Google Scholar

D. Leckieet al.,

“Combined high-density lidar and multispectral imagery for individual tree crown analysis,”

Can. J. Remote Sens., 29

(5), 633

–649

(2003). http://dx.doi.org/10.5589/m03-024 CJRSDP 0703-8992 Google Scholar

A. Katoet al.,

“Capturing tree crown formation through implicit surface reconstruction using airborne lidar data,”

Remote Sens. Environ., 113

(6), 1148

–1162

(2009). http://dx.doi.org/10.1016/j.rse.2009.02.010 RSEEA7 0034-4257 Google Scholar

P. Rönnholmet al.,

“Calibration of laser-derived tree height estimates by means of photogrammetric techniques,”

Scand. J. For. Res., 19

(6), 524

–528

(2004). http://dx.doi.org/10.1080/02827580410019436 SJFRE3 0282-7581 Google Scholar

B. St-Ongeet al.,

“Mapping canopy height using a combination of digital stereo-photogrammetry and lidar,”

Int. J. Remote Sens., 29

(11), 3343

–3364

(2008). http://dx.doi.org/10.1080/01431160701469040 IJSEDK 0143-1161 Google Scholar

A. F. HabibE.-M. KimC.-J. Kim,

“New methodologies for true orthophoto generation,”

Photogramm. Eng. Remote Sens., 73

(1), 25

–36

(2007). http://dx.doi.org/10.14358/PERS.73.1.25 PGMEA9 0099-1112 Google Scholar

J. RauN. ChenL. Chen,

“True orthophoto generation of built-up areas using multi-view image,”

Photogramm. Eng. Remote Sens., 68

(6), 581

–588

(2002). PGMEA9 0099-1112 Google Scholar

G. Zhouet al.,

“A comprehensive study on tuban true orthorectification,”

IEEE Trans. Geosci. Remote Sens., 43

(9), 2138

–2147

(2005). http://dx.doi.org/10.1109/TGRS.2005.848417 IGRSD2 0196-2892 Google Scholar

, “Geospatial Data Gateway,”

(2014) http://datagateway.nrcs.usda.gov/ January ). 2014). Google Scholar

, “Center for LIDAR Information Coordination and Knowledge,”

(2014) http://lidar.cr.usgs.gov/ January ). 2014). Google Scholar

C. Wu,

“Normalized spectral mixture analysis for monitoring urban composition using ETM+ imagery,”

Remote Sens. Environ., 93

(4), 480

–492

(2004). http://dx.doi.org/10.1016/j.rse.2004.08.003 RSEEA7 0034-4257 Google Scholar

, “Geospatial Canopy Cover Assessment Workbook,”

(2014) http://depts.washington.edu/rsgalwrk/canopy/ January ). 2014). Google Scholar

K. S. FassnachtW. B. CohenT. A. Spies,

“Key issues in making and using satellite-based maps in ecology: a primer,”

Forest Ecol. Manage., 222

(1–3), 167

–181

(2006). http://dx.doi.org/10.1016/j.foreco.2005.09.026 FECMDW 0378-1127 Google Scholar

G. M. Foody,

“Harshness in image classification accuracy assessment,”

Int. J. Remote Sens., 29

(11), 3137

–3158

(2008). http://dx.doi.org/10.1080/01431160701442120 IJSEDK 0143-1161 Google Scholar

D. LiuF. Xia,

“Assessing object-based classification: advantages and limitations,”

Remote Sens. Lett., 1

(4), 187

–194

(2010). http://dx.doi.org/10.1080/01431161003743173 2150-704X Google Scholar

F. AlbrechtS. LangD. Hölbling,

“Spatial accuracy assessment of object boundaries for object-based image analysis,”

Int. Society for Photogrammetry and Remote Sensing, GEOBIA 2010: Geographic Object-Based Image Analysis, 6 Ghent, Belgium(2010). Google Scholar

N. Clintonet al.,

“Accuracy assessment measures for object-based image segmentation goodness,”

Photogramm. Eng. Remote Sens., 76

(3), 289

–299

(2010). http://dx.doi.org/10.14358/PERS.76.3.289 PGMEA9 0099-1112 Google Scholar

BiographyDiane M. Styers is an assistant professor in the Department of Geosciences and Natural Resources at Western Carolina University. She received her MA in geography from Georgia State University in 2005, PhD in forestry from Auburn University in 2008, and completed a postdoc in the University of Washington Remote Sensing and Geospatial Analysis Laboratory in 2011. Her research involves using remote sensing to analyze changes in ecosystem structure and function, particularly in response to disturbances. L. Monika Moskal is an associate professor in the School of Environmental and Forest Sciences at University of Washington and principal investigator in the Remote Sensing and Geospatial Analysis Laboratory. She received her MS and PhD in geography from University of Calgary (2000) and University of Kansas (2005). Her research involves developing tools to analyze remotely sensed data by exploiting the spatial, temporal, and spectral capabilities of data and using those to investigate landscape structure. Jeffrey J Richardson is a postdoc in the Remote Sensing and Geospatial Analysis Laboratory at the University of Washington. He received his BS in creative writing and biology from Kalamazoo College in 2003, his MS in remote sensing from the University of Washington in 2008, and his PhD in interdisciplinary science from the University of Washington in 2011. His research involves solving problems related to forests, energy, and food with remote sensing and geospatial analysis. Meghan A. Halabisky is a PhD student in the Remote Sensing and Geospatial Analysis Laboratory at the University of Washington, where she is advised by Dr. L. Monika Moskal. She completed concurrent degrees, a master of science and a master of public affairs, in 2010 at the University of Washington. Her research interests include development of high resolution remote sensing techniques for spatiotemporal analysis of wetland dynamics, landscape change, and climate change impacts. |