|

|

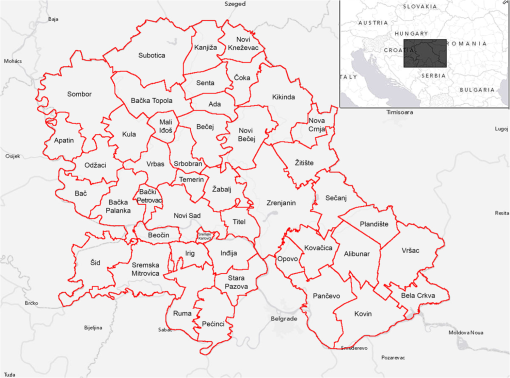

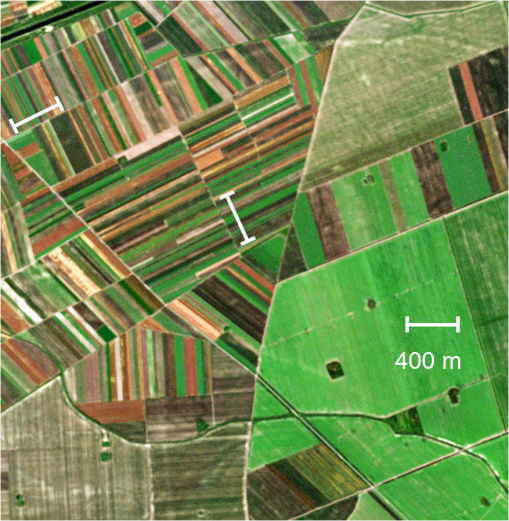

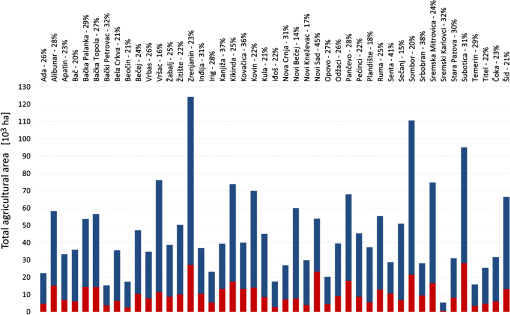

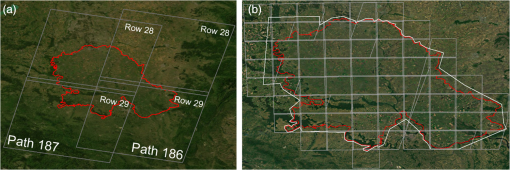

1.IntroductionThe automatic cropland classification based on the data from spaceborne imagery is one of the most important sources of valuable information about the composition and the development of a variety of crops grown in different agricultural regions around the world. A general goal is to be able to estimate the area of specific crops,1,2 monitor their health, and predict their yield.3,4 A fundamental task in such applications is to determine the type of crop that is grown on a specific stretch of land.5–7 The result is that the cropland classification has a significant role in the proper monitoring and management of land use on a local as well as a global level and represents an important factor in the overall agricultural policy making.8 Therefore, it is essential to make such tools more accessible to different parties involved in the agricultural market and offer them the expertise acquired from the case studies of their deployment and development. The recent advances in the satellite imaging technology provide researchers and practitioners with ever more data in both quantitative and qualitative ways. This opens new opportunities for the extraction of meaningful and useful information, but it also creates new challenges regarding the choice and the development of appropriate methods for their processing. While satellite imagery for crop identification and crop-covered area estimation is a practice with more than 30 years of tradition,9,10 the sensors have only recently gained resolution that allows for precision monitoring in the case of agriculture practices based on small land parcels.6,11,12 The fact that most of these satellite imagery systems are commercial represents one of the obstacles for their widespread use and produces the need for the effective and efficient use of a vast amount of freely available data, like the high-quality data already available through the Landsat program13 or the data that are planned to be published under similar terms by the upcoming Sentinel missions.14 In line with these efforts, this paper presents a pixel-based cropland classification study that utilizes a time series of multispectral images with different properties which were acquired at different resolutions by different imaging instruments—Landsat-815 and RapidEye.16 It also explores the capabilities of the proposed data fusion method for their combination with the aim of overcoming the shortcomings of different instruments in the particular cropland classification scenario characterized by the specific size of crop fields over the chosen agricultural region situated in the plains of Vojvodina in northern Serbia (southeastern Europe). It can be said that this scenario, where agriculture is based on very small areas dedicated to specific crops, is quite common in different parts of the world. One of the problems that arises is the presence of crop fields with very small areas and small dimensions compared to the spatial resolution of the freely available multispectral imagery. Additionally, if there is no available information about the crop field boundaries or cadastral data (such as in this study) which would enable the application of an object-based classification approach, it is of great significance to develop methods that effectively exploit available data and provide an improvement over the existing pixel-based classification approaches through the combination of different data sources. Therefore, this paper proposes a data fusion method that is successfully utilized in combination with a robust random forest classifier17 in improving overall classification performance, as well as in enabling application of satellite imagery with a coarser spatial resolution in the given specific cropland classification task. A random forest classifier was chosen due to its recent use in remote sensing and because it has been accepted as an efficient tool in crop classification.18–20 The proposed method exploits different spectral and spatial resolutions of two different data sources in order to mitigate the described problem. Through feature-level fusion where composite features are extracted from all available multisensor data,21 a data integration, which is considered as a low-level data fusion, is employed. It addresses the problem by using one mosaic multispectral image of the observed area, which is formed by mosaicking images acquired in the short time interval by the constellation of commercial satellites with a higher spatial resolution as an addition to the freely available Landsat-8 multispectral time series with a coarser spatial resolution which is acquired over a longer time interval that covers the phenological development of all observed crop types. This approach is related to a recent study,22 which also tried to exploit the advantages of different data sources, but with application in the detection of vegetation changes. Through data fusion, it extends the applicability of the pixel-based classification using freely available satellite imagery with a coarser spatial resolution to the classification scenarios that demand finer spatial resolution due to the agricultural practices that are characterized by the growth of different crop types on the very small parcels of farmland. 2.Study AreaThe analyses presented in this study were conducted over the region of Vojvodina, with an area of , which is situated in northern Serbia and represents the southern part of the Pannonian Plain spreading over East-Central Europe. It is by far the most important agricultural area in the region23 and presents a major contributor to the gross domestic product of the Republic of Serbia. According to the results of the conducted census of agriculture23 which included 45 municipalities in Vojvodina as shown in Fig. 1, there were 1,606,896 ha of utilized agricultural area, from which 1,466,176 ha are arable land, while the rest are pastures and meadows. It is a highly fertile soil (Chernozem) with a moderate continental climate that is suitable for the production of a variety of crops. However, 23 of the arable land in 2012 was utilized for the production of five selected crop types, which are the subject of the cropland classification study presented here. Fig. 1Map of the study area (Vojvodina) with borders of municipalities shown on the chart in Fig. 3.  In the beginning of 2013, the Provincial Secretariat for Agriculture, Water Economy and Forestry of Vojvodina decided to support the development of the study that would enable annual mapping of the most common crop types in Vojvodina using remote sensing and the production of an appropriate annual cropland data layer. The aim of the study was to investigate current techniques and their cost, as well as to propose a new low-cost solution that would match the needs of the local provincial government. The main problem that came up from the given constraints was that the agricultural practice that has been employed in this region over a very long period of time (many decades) imposed the requirement to use the high-resolution satellite imagery as part of a possible solution. Namely, almost all agricultural holdings in this region are family holdings,23 and the key characteristic of employed agricultural practice is that a significant number of farmlands and fields have a very small size. Furthermore, on adjacent fields, different crop types are usually grown, while crop rotation is also present. This agricultural practice is very common across different parts of Europe and an example from Vojvodina is given in Fig. 2. Fig. 2Illustration of crop field sizes in Vojvodina as a consequence of agricultural policy—standard length of fields is 400 m, while the width can be as small as 10 m (image source: RapidEye, near Kucura, June 2013). The same area is also used for visualization of classification results in Fig. 5.  The small sizes of the fields and their variability as such should not present a problem for pixel-based classification, but since high-resolution multispectral images of the described region were not freely available, the cost factor associated with them became the major issue. Additionally, the selected crop types have misaligned phenological development, which means that the multitemporal classification approach that utilizes an image time series is necessary, making the cost of the classification system even higher. One possible solution was to efficiently exploit the currently available free satellite imagery, which is provided by the United States Geological Survey and their recently launched Landsat-815 satellite. Since the standard length of crop fields in Vojvodina is 400 m, and the spatial resolution of the Landsat-8 images is 30 m, the use of Landsat-8 time series as the primary and only source of data would mean that all crop parcels smaller than (60 m width) would be wide, which would make the classification of such pixels very unreliable. If this situation would appear only sporadically, this kind of error would be perhaps acceptable. However, as shown in Fig. 3, which illustrates the distribution of crop fields over the municipalities in Vojvodina (according to the official data available in Ref. 24), there is a significant number of crop fields that are in this category—on average, . Therefore, the issue of an insufficient spatial resolution cannot be neglected. As a result, Landsat-8, such as it is, cannot be fully utilized in the particular case of the described agricultural practices and employment of imagery with higher spatial resolution is necessary. Fig. 3Distribution of crop fields smaller than 3 ha over the 45 municipalities in Vojvodina listed above. Each bar (red+blue) represents the total agricultural area in the particular municipality in thousands of hectares, while the red part represents the portion of fields , given also in % above the chart. The chart was produced using the official census data.24  Although sensors with high spatial resolution onboard some other commercial satellites, like RapidEye or SPOT, are more appropriate with respect to small field sizes, spectral characteristics of the Landsat-8 imaging instruments are better tuned for the applications like cropland classification. However, this only holds when the fields are large enough. In the given situation with a huge number of fields , it was decided that an additional complementary source of information in the form of commercial high-resolution multispectral satellite imagery should be used. However, there were some recent studies25,26 that were aimed at determining the adequate spatial resolution of multispectral images for supervised crop classification depending on the desired level of classification accuracy and other performance criteria. Their results showed that although a small pixel size is favorable in the case of small fields, it can increase within-class variability. As has been already said, due to the misaligned phenological phases, multispectral time series are needed to enable classification of selected crop types (when winter wheat was to be harvested, corn and soybean were at very early stage—third week of June). The price of the most affordable satellite products with adequate spatial and spectral resolution, defined time window of acquisition, and guaranteed percentage of cloud cover in the case of observed study area was euros per acquisition. This would mean that the total price of the time series would be several times higher, which is often not acceptable for small public services with constrained budgets. On the other hand, if there are enough cloud free days during the season, Landsat-8 products alone could be a perfect match for a time series. Therefore, in this paper, we present a study that utilizes a method for fusion of Landsat-8 time series with purchased images of higher spatial resolution from RapidEye, which are acquired in a single data acquisition campaign (one order), i.e., in a single pass of a commercial satellite or in a very short time interval by a constellation of commercial satellites. The study shows that in this way, even in the case of small fields, a very high classification accuracy with a satisfactory spatial resolution can be achieved and that the use of the proposed method is also an affordable way to extend the application of Landsat-8 imagery to the classification problems that usually require data with higher spatial resolution. 3.DataIn this study, multispectral satellite images from two different data sources are utilized. The first consists of a multispectral time series acquired between June and September 2013 over the study area described in Sec. 2 by the Landsat-815 medium-resolution imaging instruments, and the second source is a mosaic of several high-resolution multispectral images taken between the 9th and 22nd of June 2013 by the constellation of commercial RapidEye16 satellites. These two data sources were used separately and combined in the analyzed pixel-based cropland classification task in order to explore their applicability and enhancements arising from the proposed data fusion method. 3.1.Satellite ImagerySimilar to Landsats 4, 5, and 7, Landsat-8 has a 16-day repeat cycle, where data are acquired in 185 km swaths and segmented into scenes defined in the second World-wide Reference System of path (groundtrack parallel) and row (latitude parallel) coordinates. It was launched in February 2013 and it carries two imaging instruments onboard, Operational Land Imager (OLI) and Thermal Infrared Sensor (TIRS) pushbroom sensors, which acquire images in visible, near-infrared (NIR), short-wave, and thermal infrared spectrum with 12 bits of precision. The OLI sensor provides eight different spectral bands with a spatial resolution of 30 m and one panchromatic band with a resolution of 15 m. In addition to the blue, green, red, and NIR bands which are also present onboard the RapidEye satellites, it also has four additional spectral bands. These are deep (coastal) blue, two short-wave infrared (SWIR) bands, and an additional SWIR band for cirrus cloud detection. The coastal blue band (0.43 to ) is intended for improved sensitivity to chlorophyll.15 The TIRS sensor provides emitted radiance in two thermal infrared bands with a spatial resolution of 100 m, but the bands are resampled by cubic convolution to 30 m and coregistered with the 30 m OLI spectral bands. The Landsat-8 images are delivered as level 1 terrain-corrected products. In the case of the presented study area, roughly half of the area belongs to the Landsat path 186 (rows 28 and 29), while the other half belongs to the same rows in path 187, with a significant overlap between the paths, Fig. 4(a). Since the aim was to use the Landsat-8 multispectral time series in a supervised classification task, utilization of Landsat sidelaps, as proposed by Reynold,13 would provide additional time samples in a series. However, a significant part of labeled ground-truth data was in the nonoverlapping parts of paths 186 and 187, so images from these two paths were used separately and independently from each other. Accordingly, in the rest of the paper, datasets consisting of images from paths 186 and 187 (rows 28 and 29) are designated as path186 and path187, respectively. Fig. 4Illustrations of Landsat-8 World-wide Reference System of path and row coordinates over the study area—Vojvodina (a). RapidEye partition of the study area into 69 tiles (b).  The information obtained from both OLI and TIRS sensors, excluding the panchromatic band, i.e., 10 spectral measurements associated with each spatial pixel, were used in all analyses involving Landsat data. The number of images with low cloud coverage in the time series between May (the first month of Landsat-8 providing data for the study area) and September (the end of the last harvest) varied between the two paths—5 and 4 for paths 186 and 187, respectively. Acquisition dates of used Landsat-8 images with a low cloud coverage are given in Table 1. In the case of classifiers based on images from path 186, one extra image acquired in July leads to a better classification performance when compared to the classifiers based only on four Landsat-8 images from path 187, Sec. 5. The images were provided through the Landsat repository maintained by the United States Geological Survey,27 using a very convenient web service.28 Table 1Acquisition dates of Landsat-8 images used in time series.

When it comes to data provided by the constellation of five RapidEye satellites,16,29 multispectral images associated with 69 tiles of the same size into which the study area was partitioned were provided. All partition tiles are shown in Fig. 4(b). The selected imaging time window was during June, just before the harvest of winter wheat. However, corn and soybean were at the early stages of phenological development. Due to the bad weather conditions, the duration of the final image acquisition time interval was five days longer than was initially planned and a higher number of images was taken in order to achieve cloud cover per tile. Although the RapidEye constellation is capable of collecting 4 million of Earth every day, if the time interval between the first and the last image is too long, the spectral signature of the same crop can vary significantly over the interval. The data were delivered as level 3A16 orthorectified products in the form of tiles with a spatial resolution of 5 and 500 m wide sidelaps. Using all available images, after an additional preprocessing step involving atmospheric correction of tiles, a mosaic image of the study area with the guaranteed percent of cloud cover was formed. Parts of the tiles outside the study area were initially removed from the data. All RapidEye satellites have the same orbital plane and they are equipped with identical, equally calibrated imaging instruments. These sensors provide five spectral bands (blue, green, red, red-edge, and NIR) with the same nominal ground sampling resolution of 6.5 m,29 which is enhanced to 5 m after additional processing. The red-edge channel (0.69 to )30 is very sensitive to changes in chlorophyll content,31 which can improve classification performance directly31 or indirectly through the design of new features.32 3.2.Ground-Truth Data Collection CampaignIn order to perform a supervised cropland classification, an appropriately labeled dataset was made through the conducted data collection campaign. The campaign was mostly carried out during June 2013 and it consisted of the work on the ground, where different crop types were manually annotated by the experts from the Extension Service who gathered information in the fields. A certain part of the campaign was completed through an innovative crowdsourcing method based on a specially designed user application implemented on a smartphone platform. Users were sending geo-tagged images of crop in their field via Android application AgroSense.apk, where each image contains the label designating the crop type selected by the user. Images are automatically synchronized with the server where, after verification and parcel plotting, corresponding pixels are added to a reference dataset. In return, contributors would get free access to the products based on the processed satellite images of the same field through the geographic information system portal. The ground-truth data collected from both sources were then consolidated and used for the extraction and labeling of multispectral measurements corresponding to individual pixels, which after this process formed the final labeled dataset used for training and testing of classifiers in different classification scenarios. The process of extraction and labeling of pixels from satellite images, in the case of any of the data sources described in the previous subsection, was performed on the level of individual fields (parcels), i.e., the information about the association of each pixel with the particular field was preserved during the data extraction process in order to avoid the situation that pixels from the same field appear later in both training and test sets. The precise delineation of labeled fields was performed manually by using the collected global positioning system coordinates of each field and creating high-resolution georeferenced RapidEye mosaic image as described in Sec. 3.1. The total number of annotated fields was 1414, selected in such a way to sample as uniformly as possible over the whole study area (Vojvodina) in order to take into account the spatial variability of spectral signatures of the same crop types over the area. During the campaign, parcels with 24 different vegetation categories were annotated Among them were 20 different crop types, while the remaining categories were meadows, pastures, and similar land cover types or other uncategorized cultivated plants. Among the 20 categorized crop types, only 5 had a significant number of samples over the whole study area and were annotated as corn, wheat, soybean, sugar beet, and sunflower, while the parcels corresponding to all the remaining categories were joined together in one separate class and named as “other.” The result is that the final labeled dataset consisted of six different classes, where 1184 parcels correspond to five selected crop types. In order to increase the variability of samples in the class other and better simulate real working conditions, the class other was expanded with 105 additional shapes (polygons) representing vegetation in urban areas and asphalt roads. Categories like forests and various water bodies were excluded from the dataset since they can be easily detected in advance from the high-resolution multispectral images by different methods due to the specific spectral and texture properties of forest areas and the specific spectral response of water. Similarly, the final ground-truth dataset in class other does not contain samples of clouds, since both Landsat-8 and Rapideye products are delivered with cloud cover masks15,16 obtained by the prepocessing of acquired images using some of the supervised classification algorithms: neural networks based expanded automated cloud-cover assessment (ACCA), proposed by Scaramuzza et al.,33 or SPARCS,34 which can be used as an alternative to ACCA. 3.3.Training/Test Sets for Cross-ValidationThe evaluation of the performance of the proposed features and the corresponding classifiers presented in Sec. 4 was, in all cases, performed by the 10-fold cross-validation method utilizing one partition consisting of 10 randomly created nonoverlapping subsets. Each parcel was attributed to only one subset of the partition. In each experiment, nine subsets were used as a single training set, and the remaining subset from the partition was used as the corresponding test set. The final performance is then obtained as an average of 10 independent classification experiments. The reference dataset used in different experimental setups (classification scenarios) was partitioned in such a way that pixels belonging to the same parcel were always kept together. In addition, all parcels in the reference dataset were randomly associated with 10 independent subsets in such a way that the subsets are as uniform as possible regarding the class content at the parcel level. However, due to the variable sizes of parcels, the obtained partition subsets still have different total numbers of pixels. Therefore, subsets were additionally equalized in an iterative manner by exchanging shapes of the same class with the biggest and the smallest number of pixels between the two chosen subsets that have the largest discrepancy in the total numbers of pixels, respectively. This equalization procedure is performed until the standard deviation of the total numbers of pixels per each subset is less than one percent of the average numbers of pixels per subset. As a result, subsets of the partition at the end contain an almost equal number of shapes per each class, as well as the total numbers of pixels, but the distribution of the numbers of pixels between classes is still different among the subsets. This is due to the variable shape sizes and the chosen subset creation criteria, which is chosen in order to increase the number of samples from different shapes, i.e., spatial regions, per class in each subset. In this way, a higher spatial variability of samples is achieved per subset, making them more heterogeneous (difficult) and, consequently, more appropriate for the evaluation of a pixel-based classifiers’ performance over the larger spatial region. 4.MethodologyPrior to classification, a preprocessing of satellite images has been performed with the aim of achieving a reduction in scene-to-scene variability—different physical conditions of the scenes during acquisitions. Therefore, in the cases of both data sources Landsat-8 and RapidEye, the original quantized and calibrated pixel values, i.e., digital numbers, were first converted to the corresponding radiance values and then translated into dimensionless surface reflectance values through the process of atmospheric correction. Consequently, the variations of the scattering and absorbing effects of atmospheric gases and aerosols were reduced. Atmospheric correction was performed with FLAASH procedure based on the MODTRAN radiative transfer model.35 In the case of RapidEye, all 69 image tiles were first atmospherically corrected and subsequently combined into a single mosaic image used in the classifier design. It is this image that was then used in the classifier design. All Landsat-8 images listed in Table 1 had of cloud coverage in the study area and were included in experiments. Atmospheric correction of Landsat-8 images was performed on bands 1 to 7 of the OLI sensor, while bands 8 and 9 were converted to the top-of-atmosphere reflectance. For paths 186 and 187, described in Sec. 3, two separate classifiers were created in all classification scenarios. This was the case in experiments utilizing only Landsat-8 data as well as in experiments based on the proposed method for fusion of Landsat-8 and RapidEye data. Landsat-8 images from the same path but taken at different points in time (parts of the same time series) were considered as independent sources of information. The usefulness of the Landsat-8 panchromatic band for crop classification was also investigated through the set of experiments based only on Landsat-8 data in which all 30 m Landsat-8 OLI bands were jointly pan-sharpened to 15 m by using the Gram-Schmidt orthogonalization algorithm.36 TIRS thermal bands were not pan-sharpened, but only upsampled to 15 m resolution. In addition, the panchromatic band was also used as an input feature, which was not the case in the experiments with the original 30 m Landsat-8 bands. The feature vectors for pixel-based cropland classification using different types of input data were created in the following way. In the case of classification scenarios including only Landsat-8 data with the original 30 m resolution, the feature vector is formed through concatenation of 10 multispectral measurements from the time series, which correspond to the same spatial pixel. This results in a 40-dimensional feature vector in the case of 30 m Landsat path187 and a 50-dimensional feature vector in the case of 30 m Landsat path186 due to four and five Landsat-8 images in each time series, respectively. When it comes to 15 m pan-sharpened classification including only Landsat-8 data, the feature vector contains 11 multispectral measurements from each image in the time series. This results in a 44-dimensional feature vector in the case of 15 m Landsat path187 and a 55-dimensional feature vector in the case of 15 m Landsat path186. The values in feature vectors are first ordered according to the position of the corresponding Landsat-8 images in the time series, and then the values corresponding to individual spectral measurements at different wavelengths (which are extracted from each image in time series) are ordered in the same way as the spectral bands in the original Landsat-8 image. In case of a single mosaic RapidEye image, the feature vector consists of 45 spectral measurements per pixel. These values correspond to five spectral measurements of the center pixel and its eight closest neighbors ( neighborhood), which gives a total of nine five-dimensional measurements at nine spatially close locations. On the contrary, in the case of Landsat-8, the same neighborhood approach was not used due to the coarse spatial resolution. In classification scenarios based on the fusion of 30 m Landsat-8 and 5 m RapidEye data, one feature vector was formed for each spatial pixel. This was performed by concatenating feature vectors described in scenarios involving only the 30 m Landsat-8 time series with the 45-dimensional RapidEye feature vector involving a neighborhood. In order to combine data at the same spatial resolution and provide spatial alignment, Landsat-8 images were upsampled by a factor of 36 using the nearest-neighbor interpolation method, which is the most appropriate choice in this case. This method maintains the original crop structure without unnecessary degradation of the field borders that would be introduced by more sophisticated interpolation methods. By doing so, we created a 85-dimensional feature vector for path 187 and a 95-dimensional feature vector for path 186. After extensive simulations and numerous experiments, seven experiments are presented here in total to evaluate the performance of the proposed system.

We also investigated the possibility of combining 15 m pan-sharpened Landsat-8 with 5 m RapidEye data using the proposed fusion method, but the results of the experiments showed it to be inferior to fusion with the 30 m Landsat-8 data. The classifier that was used in all experiments was random forest.17 It belongs to nonparametric ensemble learning methods, which boost diversity among the models in an ensemble through random sampling of the data space. Diversity is achieved by random selection of both samples and features. Random forest uses decision trees as the base classifier. There is no pruning of the constructed decision trees, so it can be said that the final decision trees are partially overfitted to their own bootstrap sample of the data. The second aspect of the ensemble diversity is achieved through random selection of features. This means that at each branch in the particular tree, the decision of which feature to split on is restricted to a random subset of all features. The size of a random subset is predefined in advance, but a new subset is always formed for each branching point. The size of the subset is usually taken to be , where is the dimensionality of the feature space. The random forest classifier was chosen due to its good properties18 and widespread application in remote sensing tasks, like land cover37 as well as cropland18–20,38,39 classification based on different types of data. 5.Experimental Results and DiscussionExperiments were performed by using MATLAB® programming language and Waikato Environment for Knowledge Discovery (Weka) library,40 which is an open-source data mining and machine learning tool. MATLAB® was used for labeling ground-truth data, extraction of the feature vectors, and creation of training and test sets, while the implementation of random forest classifier17 from Weka was used in all classification experiments. Tables 2Table 3Table 4Table 5Table 6Table 7–8 provide accuracy assessment matrices and performance statistics for different classification scenarios (the values typeset in bold represent the numbers of correctly classified instances). The accuracy assessment matrix (confusion matrix) is a representation of misclassification errors and ideally contains nonzero values only on the main diagonal. The values outside the diagonal show the number of pixels of a class indicated on the top (reference data) that have been labeled by the classification algorithm as the class indicated in the left row (classified data). At the bottom of each table, the overall performance of a classifier is presented by using two aggregating measures: overall accuracy of the classification and Cohen’s kappa coefficient.41–43 The kappa coefficient is computed by subtracting the agreement expected by chance from the observed agreement and dividing the result by the maximum possible agreement. In addition, values given in the right-most column represent the precision (user’s accuracy) per class, while the bottom row contains hit rates or recalls, i.e., producer’s accuracies. In order to statistically test the significance of differences in classifcation accuracies expressed by tables representing different experiments, an appropriate test43 based on statistics of Coehn’s kappa coefficients was performed between all pairs of experiments (two set of pairs were constructed, one for each reference dataset—path186 and path187). The results of tests with a 95% confidence interval were positive in all cases, i.e., they confirmed that there are statistically significant differences between the results of different experiments based on the same reference dataset. Table 2Accuracy assessment matrix for classifier using only 30 m Landsat-8 data, path 186.

Table 3Accuracy assessment matrix for classifier using only 30 m Landsat-8 data, path 187.

Table 4Accuracy assessment, classifier using only 15 m pan-sharpened Landsat-8 data, path 186.

Table 5Accuracy assessment, classifier using only 15 m pan-sharpened Landsat-8 data, path 187.

Table 6Accuracy assessment matrix for classifier using only RapidEye data.

Table 7Classifier based on proposed method using 5 m RapidEye and 30 m Landsat-8, path 186.

Table 8Classifier based on proposed method using 5 m RapidEye and 30 m Landsat-8, path 187.

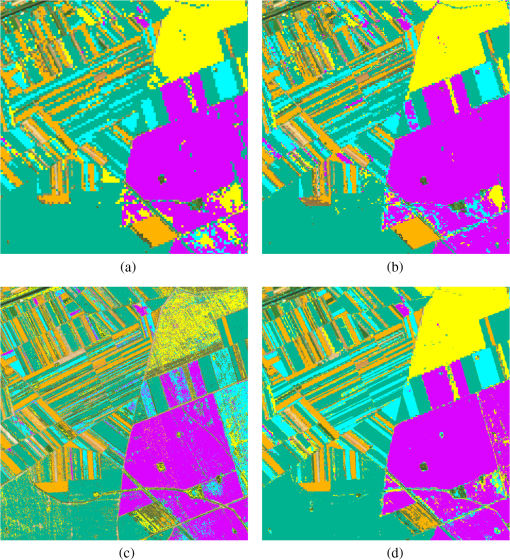

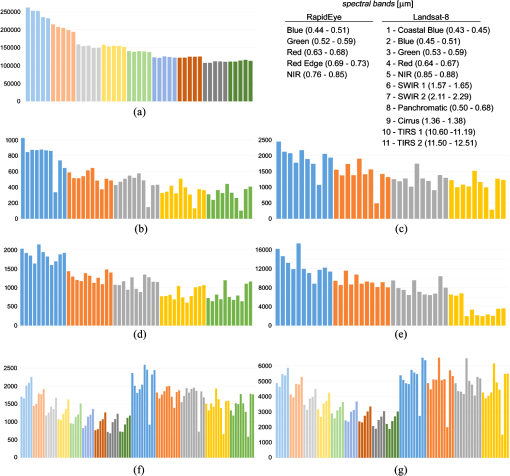

It can be observed that the proposed data fusion method has a similar performance in comparison to the classifier based only on the 30 m Landsat-8 time series for path186, while it shows a significant improvement in the case of path187. However, pure 30 m Landsat-8 has inadequate spatial resolution for small crop fields, as described in Sec. 2, as of arable land in the study area is composed of small parcels below its spatial resolution. An attempt to improve the Landsat-8 resolution through pan-sharpening to 15 m deteriorated its classification performance, while the achieved resolution is still not comparable with 5 m resolution of the proposed data fusion method. In addition, results in Table 6 show that classifier based only on a single RapidEye mosaic image composed of images acquired in a short time interval that is only a very small part of a total time period covering phenological development of all selected crop types, exhibits a poor quality and cannot be used as a reliable source of information for further analyses. When it comes to analysis of classification results for individual crops, all classifiers showed a similar behavior. Crops that are the easiest to distinguish are sugar beets and corn. The hardest crops to classify are soybean and sunflowers, which are often confused with each other and with corn. The class other is one that represents a mixture of a variety of agricultural crops; a good generalization in the case of this class is hard to achieve. We can see that class other in most classification scenarios is misclassified as wheat or corn. A visual comparison of classifiers’ performances is shown in Fig. 5. The area that is chosen for comparison of classifiers is located in the middle of the Vojvodina region (Fig. 2) and contains both very small parcels (central and top-left part of the figure), as well as large parcels (right and bottom-right part of the image). In the middle-left part of the image, medium-sized parcels are also present. We can notice that classification with the data from the RapidEye satellite only, Fig. 5(c), has the finest spatial resolution and shows the best separation of different parcels in the central part of the image. However, it is very noisy. This phenomenon can be easily noticed in the part of the area with large parcels. It also shows the worst performance in classifying soybean and sunflowers, which is expected from the results in Table 6. Classification with a classifier based on the 30 m Landsat-8 time series, Fig. 5(a), shows the worst results in the part of the area with small parcels. It is noticeable that neighboring parcels with different crops are merged into one due to the coarse spatial resolution. On the other hand, it shows rather good results of classification in the part of the area with large- and medium-sized parcels. The performance of the classifier based only on the pan-sharpened 15 m Landsat-8 time series is shown in Fig. 5(b). It illustrates an improvement over Fig. 5(a) in areas with smaller parcels; however, it has a lower classification accuracy, as can be confirmed by comparison of the results in Tables 2Table 3Table 4–5. Similar to the classifier based only on 30 m Landsat-8, Fig. 5(a), it also exhibits better performance over large fields in comparison with the classifier based only on RapidEye data, as well as a better overall accuracy, which is a consequence of the utilized time series that is not available in the case of RapidEye data. Classification with the fusion of RapidEye and 30 m Landsat-8 data shows a very good performance in all three areas and in all analyzed aspects. A good separation of small parcels is shown in the central part of the image. In the area with large- and medium-sized parcels, it shows very similar results to the 30 m Landsat-8 classifier, while its spatial resolution is much higher and is comparable with RapidEye. Fig. 5Visual comparison of classification results, example of crop fields in the vicinity of village Kucura in the municipality of Vrbas (location inside Landsat path 187): (a) 30 m Landsat-8 classifier; (b) 15 m pan-sharpened Landsat-8 classifier; (c) 5 m RapidEye classifier; and (d) 5 m classifier based on the proposed data fusion method for combining 30 m Landsat-8 and 5 m RapidEye data. Color interpretation: green: corn, orange: wheat, yellow: soybean, purple: sugar beet, blue: sunflower. Background is the image shown in Fig. 2 and represents the class “other.”  Finally, we investigated the significance of individual spectral bands and time samples in the created trees of different random forest classifiers. The results are shown in Fig. 6, where histograms representing the number of appearances of different components of the feature vectors (corresponding to some particular spectral band or particular image in time series) in decision trees of the used random forest classifiers are shown. In this way, we tried to estimate the importance of certain images in the time series, as well as the importance of individual spectral bands in different classification scenarios. In the case of classifiers using 30 m Landsat-8 data, each group of 10 bars with the same color corresponds to 10 spectral measurements of a particular pixel at some point in the time series, while in the case of the pan-sharpened 15 m Landsat-8 data, there are 11 bars due to the added panchromatic band. In the case of RapidEye data, each group of five bars with the same color corresponds to five measurements associated with one of the 5 m pixels in the neighborhood of that particular pixel. With classifiers based on the fusion of 5 m RapidEye and 30 m Landsat data, the first 45 features are from the RapidEye data and the following are data are from the Landsat-8 time series: 50 values in the case of path 186 and 40 in the case of path 187. The order of bars inside the groups in all cases is the same as the order of the corresponding spectral bands in images (their wavelengths are given in the legend). Fig. 6Significance of individual spectral bands and time samples in used random forest classifiers: (a) 5 m RapidEye; (b) 30 m Landsat-8 path 186; (c) 30 m Landsat-8 path 187; (d) 15 m Landsat-8 path 186; (e) 15 m Landsat-8 path 187; (f) 5 m fusion of RapidEye and Landsat path 186 data; (g) 5 m fusion of RapidEye and Landsat path 187 data.  When considering the significance of different spectral bands, we can conclude that all images exhibit almost the same pattern. This means that the NIR band has a significant influence in classifiers that use the proposed data fusion method, which can be seen in Figs. 6(f)–6(g), where in the first half of the feature vector (corresponding to RapidEye data), the NIR band is dominant in each of the groups corresponding to different pixels in the local neighborhood. It is also dominant in Figs. 6(b) and 6(c), especially in groups of the bars in the second part of the histograms, which correspond to images acquired in the later phases of the crops’ phenological development. It is interesting that the new coastal blue and thermal bands of Landsat-8 have quite a significant importance. In Figs. 6(b) and 6(c), as well as 6(f) and 6(g), the least importance is attributed to the spectral band that was designed for the detection of cirrus clouds. By looking at Figs. 6(a), 6(f), and 6(g), one can see that in the case when RapidEye data are used, i.e., the neighborhood of the central RapidEye pixel, the most important pixel that participates in the classifier’s decision is the central pixel of the neighborhood—the first group of bars in the histograms. Also, it is interesting to note that the role of RapidEye components significantly changes when they are combined with Landsat-8 data. This can be seen by comparing Fig. 6(a) with the first half of the histograms in Figs. 6(f) and 6(g). Histograms in Fig. 6 also show the degree of complexity of different random forest classifiers through differences in the scales of ordinates in each histogram. Namely, it can be said that classifiers with a higher complexity are characterized by more complex decision trees, which result in a higher number of appearances of each of the feature vector’s components. The described behavior is visible in Fig. 6, where a classifier based only on single RapidEye mosaic image, Fig. 6(a), has a histogram with several orders of magnitude higher values compared to histograms that correspond to other classifiers that utilize Landsat-8 data. This can be explained by the fundamental difference in the structures of the used RapidEye and Landsat-8 data, Sec. 3, since RapidEye mosaic image covers only a very small fraction of the whole phenological development of selected crops, while Landsat-8 data in all classification scenarios were used as a time series covering a much longer time period. The result is that the classifier that corresponds to the histogram in Fig. 6(a) has a much harder task and has a lower generalization capacity compared to others. This also confirms comparison of the cross-validation results presented in Tables 2Table 3Table 4Table 5Table 6Table 7–8, where the classifier corresponding to the histogram in Fig. 6(a) exhibits the worst performance and the classifier corresponding to the histogram in Fig. 6(b) has the best performance among the classifiers utilizing 30 m Landsat-8 data due to the longer time series. Also, the classifier with corresponding histogram in Fig. 6(f) has a smaller complexity and better performance than the classifier that is based on the proposed data fusion method and is described by the histogram in Fig. 6(g), which is related to the number of images in the time series. Finally, the classifier from Table 2 and Fig. 6(f) has the best trade-off between complexity and classification performance, as well as the best overall accuracy, which further justifies the proposed data fusion method in addition to the crop fields’ size issue. 6.ConclusionSupervised cropland classification is a very important tool for the efficient monitoring of agricultural production on a regional and state level. Moreover, with the recent fidelity of remote sensing data sources, it is coming into the notice of different parties, including individual producers interested in precision farming. In this paper, a cropland classification study using different data sources has been presented. It investigated and analyzed the applicability of different pixel-based classification approaches in the case of the given study area of the Vojvodina region in northern Serbia characterized by the very small size of crop fields, which is a common agricultural practice across Europe due to specific agricultural policies. The proposed data fusion method provides an alternative to the standard approach that would require use of commercial satellite imagery in the form of a high-resolution multispectral time series, which are, unfortunately, not very affordable, especially for public services with constrained budgets. The presented solution is based on the utilization of freely available data sources, such as the Landsat-8 time series, and the combination of these data with a single commercial high-resolution mosaic image in an attempt to overcoming the problem of inadequate spatial resolution of freely available satellite imagery. The issue with spatial resolution comes from the fact that of arable land in the given case are crop fields with a very small size (), which makes the 30 m Landsat-8 data too coarse for reliable classification. On the other hand, temporal resolution of Landsat-8 enables the capturing of the misaligned phenological development of selected crop types, which makes it a good choice as a source of time series data. Classification results from experiments including Landsat-8 and RapidEye data confirm the applicability of the proposed data fusion method and the possibility of achieving high-resolution crop classification with limited resources. They also confirm the advantage over pan-sharpened 15 m Landsat-8 data both in resolution and classification accuracy. Further improvements of classification accuracy are possible by the introduction of ensembles of different classifiers, which is the scope of future work. Also, a wider use of innovative crowdsourcing for ground-truth gathering can significantly improve the amount and quality of reference data and, consequently, the classification results. This presents an ongoing activity that should lead to higher engagement of end users in the whole process and wider application of remote sensing in the agricultural community. AcknowledgmentsThis research work has been supported by the Ministry of Science and Technology of Republic of Serbia through research projects III43002. We would also like to thank the Provincial Secretariat for Agriculture, Water Economy and Forestry of Vojvodina for its support. ReferencesF. Gallegoet al.,

“Efficiency assessment of using satellite data for crop area estimation in Ukraine,”

Int. J. Appl. Earth Obs. Geoinf., 29 22

–30

(2014). http://dx.doi.org/10.1016/j.jag.2013.12.013 ITCJDP 0303-2434 Google Scholar

Q. Liet al.,

“County-level rice area estimation in southern China using remote sensing data,”

J. Appl. Remote Sens., 8

(1), 083657

(2014). http://dx.doi.org/10.1117/1.JRS.8.083657 1931-3195 Google Scholar

G. PanG.-J. SunF.-M. Li,

“Using QuickBird imagery and a production efficiency model to improve crop yield estimation in the semi-arid hilly Loess Plateau, China,”

Environ. Model. Softw., 24

(4), 510

–516

(2009). http://dx.doi.org/10.1016/j.envsoft.2008.09.014 EMSOFT 1364-8152 Google Scholar

D. Lobell,

“The use of satellite data for crop yield gap analysis,”

Field Crops Res., 143 56

–64

(2013). http://dx.doi.org/10.1016/j.fcr.2012.08.008 FCREDZ 0378-4290 Google Scholar

V. WilliamsW. PhilipsonW. Philpot,

“Identifying vegetable crops with Landsat Thematic Mapper data,”

Photogramm. Eng. Remote Sens., 53

(2), 187

–191

(1987). PERSDV 0099-1112 Google Scholar

C. YangJ. EverittD. Murden,

“Evaluating high resolution SPOT 5 satellite imagery for crop identification,”

Comput. Electron. Agric., 75

(2), 347

–354

(2011). http://dx.doi.org/10.1016/j.compag.2010.12.012 CEAGE6 0168-1699 Google Scholar

K. JiaB. WuQ. Li,

“Crop classification using HJ satellite multispectral data in the North China Plain,”

J. Appl. Remote Sens., 7

(1), 073576

(2013). http://dx.doi.org/10.1117/1.JRS.7.073576 1931-3195 Google Scholar

B. TapsallP. MilenovK. Tasdemir,

“Analysis of RapidEye imagery for annual landcover mapping as an aid to European Union (EU) common agricultural policy,”

in Proc. of the ISPRS Technical Commission VII Mid-Term Symp. - 100 Years ISPRS,

568

–573

(2010). Google Scholar

G. Hanuschak, Obtaining Timely Crop Area Estimates Using Ground-Gathered and Landsat Data, Dept. of Agriculture, Economics, Statistics, and Cooperatives Service, US Govt. Print. Off., US

(1979). Google Scholar

R. A. RyersonR. N. DobbinsC. Thibault,

“Timely crop area estimates from Landsat,”

Photogramm. Eng. Remote Sens., 51

(11), 1735

–1743

(1985). PERSDV 0099-1112 Google Scholar

I. L. Castillejo-Gonzálezet al.,

“Object-and pixel-based analysis for mapping crops and their agro-environmental associated measures using QuickBird imagery,”

Comput. Electron. Agric., 68

(2), 207

–215

(2009). http://dx.doi.org/10.1016/j.compag.2009.06.004 CEAGE6 0168-1699 Google Scholar

P. HelmholzF. RottensteinerC. Heipke,

“Automatic quality control of cropland and grassland GIS objects using IKONOS satellite imagery,”

in Proc. of the ISPRS Technical Commission VII Mid-Term Symp. 100 Years ISPRS,

5275

–5280

(2010). Google Scholar

C. Reynold,

“Utilizing Landsat sidelaps to monitor global agriculture by a multi-platform system,”

in Proc. of the Global Geospatial Conf. Africa GIS,

(2013). Google Scholar

J. AschbacherM. P. Milagro-Pérez,

“The European Earth monitoring (GMES) programme: status and perspectives,”

Remote Sens. Environ., 120 3

–8

(2012). http://dx.doi.org/10.1016/j.rse.2011.08.028 RSEEA7 0034-4257 Google Scholar

D. Royet al.,

“Landsat-8: science and product vision for terrestrial global change research,”

Remote Sens. Environ., 145 154

–172

(2014). http://dx.doi.org/10.1016/j.rse.2014.02.001 RSEEA7 0034-4257 Google Scholar

Satellite Imagery Product Specifications, Version 4.1,

(2012). Google Scholar

L. Breiman,

“Random forests,”

Mach. Learn., 45

(1), 5

–32

(2001). http://dx.doi.org/10.1023/A:1010933404324 MALEEZ 0885-6125 Google Scholar

D. C. DuroS. E. FranklinM. G. Dubé,

“A comparison of pixel-based and object-based image analysis with selected machine learning algorithms for the classification of agricultural landscapes using SPOT-5 HRG imagery,”

Remote Sens. Environ., 118 259

–272

(2012). http://dx.doi.org/10.1016/j.rse.2011.11.020 RSEEA7 0034-4257 Google Scholar

F. Löwet al.,

“Per-field crop classification in irrigated agricultural regions in middle Asia using random forest and support vector machine ensemble,”

Proc. SPIE, 8538 85380R1

(2012). http://dx.doi.org/10.1117/12.974588 PSISDG 0277-786X Google Scholar

R. Sonobeet al.,

“Random forest classification of crop type using multi-temporal TerraSAR-X dual-polarimetric data,”

Remote Sens. Lett., 5

(2), 157

–164

(2014). http://dx.doi.org/10.1080/2150704X.2014.889863 IGRSBY 1545-598X Google Scholar

R. LuoC. YihK. Su,

“Multisensor fusion and integration: approaches, applications, and future research directions,”

Sens. J., 2

(2), 107

–119

(2002). http://dx.doi.org/10.1109/JSEN.2002.1000251 ISJEAZ 1530-437X Google Scholar

A. Teweset al.,

“Monitoring vegetation dynamics in semi-arid rangelands of South Africa by fusion of high temporal resolution MODIS data with high spatial resolution RapidEye data,”

in Gemeinsame Jahrestagung der DGfK, der DGPF, der GfGI und des GiN,

(2014). Google Scholar

(2013). Google Scholar

(2007). Google Scholar

F. LöwG. Duveillerb,

“Determining suitable image resolutions for accurate supervised crop classification using remote sensing data,”

Proc. SPIE, 8893 88930I1

(2013). http://dx.doi.org/10.1117/12.2028634 PSISDG 0277-786X Google Scholar

G. DuveillerP. Defourny,

“A conceptual framework to define the spatial resolution requirements for agricultural monitoring using remote sensing,”

Remote Sens. Environ., 114

(11), 2637

–2650

(2010). http://dx.doi.org/10.1016/j.rse.2010.06.001 RSEEA7 0034-4257 Google Scholar

Landsat repository, United States Geological Survey (USGS),

(2013) https://lta.cr.usgs.gov/ Google Scholar

USGS Global Visualization Viewer, United States Geological Survey (USGS),

(2013) http://glovis.usgs.gov/ Google Scholar

G. Tycet al.,

“The RapidEye mission design,”

Acta Astronaut., 56

(1), 213

–219

(2005). http://dx.doi.org/10.1016/j.actaastro.2004.09.029 AASTCF 0094-5765 Google Scholar

BlackBridge AG, “The RapidEye red edge band,”

(2014) http://blackbridge.com/rapideye/upload/Red_Edge_White_Paper.pdf October ). 2014). Google Scholar

C. SchusterM. FörsterB. Kleinschmit,

“Testing the red edge channel for improving land-use classifications based on high-resolution multi-spectral satellite data,”

Int. J. Remote Sens., 33

(17), 5583

–5599

(2012). http://dx.doi.org/10.1080/01431161.2012.666812 IJSEDK 0143-1161 Google Scholar

C. Conradet al.,

“Potenziale des ‘Red Edge’ Kanals von RapidEye zur Unterscheidung und zum Monitoring landwirtschaftlicher Anbaufrüchte am Beispiel des Usbekischen Bewässerungssystems Khorezm,”

RapidEye Science Archive (RESA) - Vom Algorithmus zum Produkt, 203

–217 Springer, Berlin

(2012). Google Scholar

P. L. ScaramuzzaM. A. BouchardJ. L. Dwyer,

“Development of the Landsat data continuity mission cloud-cover assessment algorithms,”

IEEE Trans. Geosci. Remote Sens., 50

(4), 1140

–1154

(2012). http://dx.doi.org/10.1109/TGRS.2011.2164087 IGRSD2 0196-2892 Google Scholar

M. J. HughesD. J. Hayes,

“Automated detection of cloud and cloud shadow in single-date Landsat imagery using neural networks and spatial post-processing,”

Remote Sens., 6

(6), 4907

–4926

(2014). http://dx.doi.org/10.3390/rs6064907 17LUAF 2072-4292 Google Scholar

A. Berket al.,

“Exploiting MODTRAN radiation transport for atmospheric correction: the FLAASH algorithm,”

in Proc. of the Fifth Int. Conf. on Information Fusion,

798

–803

(2002). Google Scholar

Y. ZhangR. Mishra,

“From UNB PanSharp to Fuze Go—the success behind the pan-sharpening algorithm,”

Int. J. Image Data Fusion, 5

(1), 39

–53

(2014). http://dx.doi.org/10.1080/19479832.2013.848475 Google Scholar

M. M. HayesS. N. MillerM. A. Murphy,

“High-resolution landcover classification using random forest,”

Remote Sens. Lett., 5

(2), 112

–121

(2014). http://dx.doi.org/10.1080/2150704X.2014.882526 IGRSBY 1545-598X Google Scholar

J. A. Longet al.,

“Object-oriented crop classification using multitemporal ETM+ SLC-off imagery and random forest,”

GISci. Remote Sens., 50

(4), 418

–436

(2013). http://dx.doi.org/10.1080/15481603.2013.817150 Google Scholar

C. G. Souzaet al.,

“Algorithms and attributes for coffee mapping in southern Minas Gerais, Brazil,”

South East. Eur. J. Earth Obs. Geomat., 3

(2S), 651

–655

(2014). Google Scholar

M. Hallet al.,

“The WEKA data mining software: an update,”

ACM SIGKDD Explor. Newslett., 11

(1), 10

–18

(2009). http://dx.doi.org/10.1145/1656274.1656278 Google Scholar

J. Cohen,

“A coefficient of agreement for nominal scales,”

Educ. Psychol. Meas., 20

(1), 37

(1960). http://dx.doi.org/10.1177/001316446002000104 EPMEAJ 0013-1644 Google Scholar

R. G. Congalton,

“A review of assessing the accuracy of classifications of remotely sensed data,”

Remote Sens. Environ., 37

(1), 35

–46

(1991). http://dx.doi.org/10.1016/0034-4257(91)90048-B RSEEA7 0034-4257 Google Scholar

R. CongaltonK. Green, Assessing the Accuracy of Remotely Sensed Data: Principles and Practices, CRC Press, Boca Raton, Florida

(2008). Google Scholar

BiographyVladimir Crnojević is the head of Department of Electrical Engineering and professor at the University of Novi Sad, Serbia. At the same time, he is the director of BioSense Center devoted to application of state-of-the-art ICT solutions in agriculture, forestry, and environment. His research interests include image processing, pattern recognition, computer vision, evolutionary algorithms, and wireless sensor networks. Predrag Lugonja is a researcher at the BioSense Center. He graduated in 2008 at the Faculty of Technical Sciences, University of Novi Sad, Department of Power, Electronic and Telecommunication Engineering. He is currently pursuing his PhD in electrical engineering at the Faculty of Technical Sciences. Since 2009, he has been working at BioSense Center in the fields of GIS and remote sensing. Branko Brkljač is a PhD student of electrical engineering at the Faculty of Technical Sciences, University of Novi Sad, Serbia, and researcher at the BioSense Center. He obtained BSc in electrical engineering in 2009 and MSc degree in telecommunications and signal processing in 2010, both from the same university. His current research interests are mainly in the fields of signal (image) processing and pattern recognition. Borislav Brunet is a senior specialist in the Provincial Secretariat for Agriculture, Water Economy and Forestry of Vojvodina, where he has been employed since 2003. He received his BSc degree from the Faculty of Agriculture, University of Novi Sad, and is working in the fields of GIS and application of information technologies in agriculture. |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||