|

|

1.IntroductionSince the 1970s earth observation remote sensing satellites have been providing an enormous amount of information in the form of images for monitoring various environmental phenomena.1 These images can be categorized into several classes on the basis of their spectral characteristics, such as (i) visible (i.e., in the range 0.4 to ); (ii) near-infrared (NIR: 0.7 to ); (iii) shortwave infrared (SWIR: 1.0 to ); (iv) thermal infrared (TIR: 3 to ); and (iv) microwave (i.e., 3 mm to 3 m). In general, different satellites may acquire images over a particular spectral range; however, these images may differ significantly in both spatial and temporal resolutions. Usually the relatively high spatial resolution images have low temporal resolution, and vice versa.2 For example, the moderate-resolution imaging spectroradiometer (MODIS) and Landsat satellites have similar spectral ranges and also provide consistent surface reflectance3–5 while they vary in their spatial and temporal resolutions (see Table 1 for details). It is interesting to note that some environmental issues (e.g., agricultural drought, irrigation management, and grassland) require high spatial (e.g., 30 m) and high temporal (e.g., weekly) resolutions6–8 due to the rapid changes in these applications. In order to address this, a new data fusion research area (called as spatiotemporal data fusion) has emerged during the past several years. These techniques have been applied and successfully predicted synthetic high resolution images for different environmental issues such as vegetation indices,5,9 evapotranspiration,10 urban heat island,11 forest change detection,12 and surface temperature.2 In most of these instances, these fusion techniques have merged Landsat with MODIS images in order to generate synthetic images at the spatial resolution of Landsat (i.e., 30 m) and the temporal resolution of MODIS (i.e., 1 to 8 days).13–18 In addition, some researchers have used other data, such as: (i) Landsat images and medium resolution imaging spectrometer (MERIS) having spatial resolution in the range 300 to 1200 m with 3 days temporal resolution;19,20 and (ii) HJ-1 CCD satellite images having 30-m spatial resolution with 4 days temporal resolution and MODIS.21 In general, these techniques can be broadly divided into three groups, (i) the spatial–temporal adaptive reflectance-fusion model (STAR-FM)-based techniques, (ii) unmixing-based fusion techniques, and (iii) sparse representation-based fusion techniques. Some of the example cases are briefly described in Table 2. Table 1Comparison between spectral, spatial, and temporal resolutions of MODIS and Landsat-8 images.

Table 2Description of some of the spatiotemporal data fusion techniques implemented over the visible and shortwave infrared spectral bands.

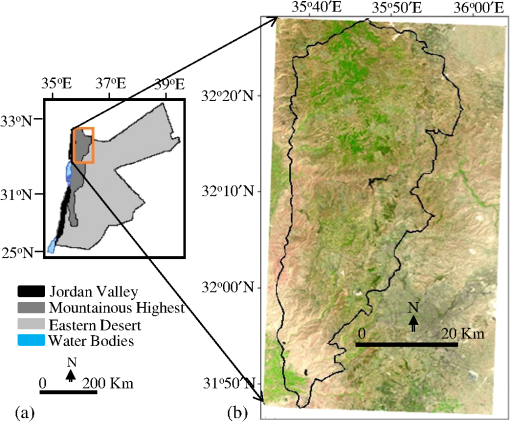

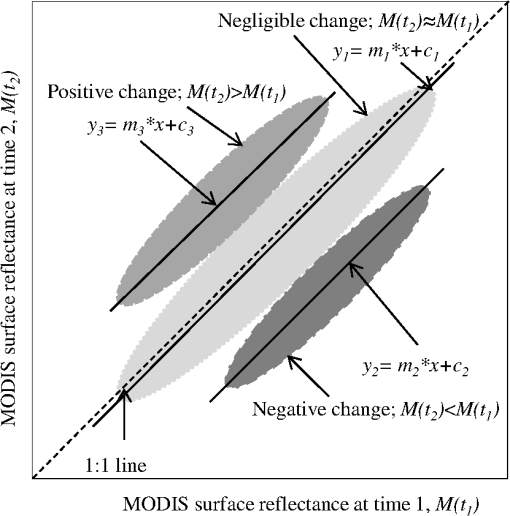

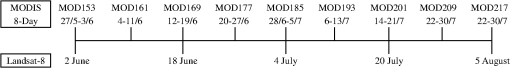

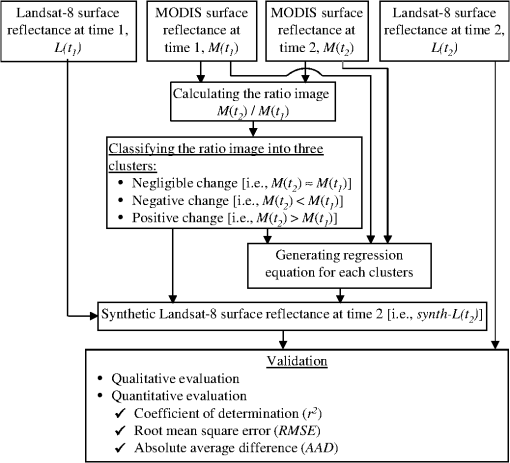

In general, the above mentioned techniques were complex and required relatively long processing times. In order to address these, we developed a new data fusion technique called spatiotemporal image-fusion model (STI-FM) and demonstrated its effectiveness in enhancing the temporal resolution of Landsat-8 surface temperatures using MODIS data.24 Here, we proposed to evaluate the applicability of the STI-FM with required modifications in generating a synthetic Landsat-8 image [i.e., synth-] for the red, NIR, and SWIR spectral bands by integrating a pair of MODIS images [i.e., and ] and a Landsat-8 image [i.e., ]. The rationale behind choosing these spectral bands were that these had been widely used in the calculation of vegetation greenness and wetness conditions and are effective in monitoring these parameters in short time periods, such as the plants’ growing seasons. Our aim with regard to this paper was to implement this technique over a heterogeneous agriculture-dominant semiarid region in Jordan, Middle East. 2.Materials2.1.General Description of the Study AreaThe country of Jordan is located in the Middle East. It is divided into three major geographic areas (i.e., Jordan Valley, Mountainous Heights Plateau, and Eastern Desert) [see Fig. 1(a)]. Our study area [Fig. 1(b)] is located in the northwestern part of the Mountainous Heights Plateau, where the elevation varies in the range 600 to 1100 m above mean sea level. Geographically, it is located between 31°47′N to 32°29′N and 35°37′E to 36°00′E covering approximately [see Fig. 1(b)]. In terms of climate, it experiences Mediterranean climatic conditions with a hot and dry summer (i.e., average temperature with no rainfall during May to August); and cool and wet winter (i.e., average temperature to 7°C with 250 to 650 mm rainfall during November to February); and two transition seasons (i.e., spring during March and April; and fall during September and October). In general, about 70% of the annual evaporation is observed in the dry season (i.e., May–August) with an annual potential evaporation of . In our study area, the major agriculture activities include: (i) agricultural cereal crops (i.e., wheat and/or barley grown between November and July) account for of the area; (ii) orchards (i.e., olives, apple, nectarine, etc.) occupy ; and (iii) grazing and forestry account for .25,26 2.2.Data Requirements and Its PreprocessingIn this study, we employed remote sensing data acquired by two satellite systems: (i) Landsat-8 Operational Land Imager freely available from United States Geological Survey (USGS); and (ii) MODIS freely available from National Aeronautics and Space Administration (NASA). For both satellites, we selected the spectral bands of red (i.e., in the range 0.62 to ), NIR (i.e., 0.84 to ), and SWIR (i.e., 2.10 to ). For Landsat-8, we obtained five almost cloudy-free images acquired between June and August 2013 at 30-m spatial resolution. On the other hand, we obtained nine MODIS-based 8-day composite of surface reflectance products (i.e., MOD09A1) at 500-m spatial resolution for the same period. The 8-day composite images would lessen the probability of cloud-contamination of the daily MODIS products.27 The selected dates are presented in Fig. 2. Fig. 2Acquisition dates of moderate-resolution imaging spectroradiometer (MODIS) and Landsat-8 imagery used in the study.  2.2.1.Preprocessing of MODIS imagesThe acquired MODIS surface reflectance products were originally provided in sinusoidal projection. We used the MODIS reprojection tool (MRT 4.1)28 to subset the images into the spatial extent of the study area and reproject them into the coordinate system of Landsat-8 images [i.e., Universal Transverse Mercator (Zone 36N-WGS84)]. Then, we coregistered these images using Landsat-8 images to allow for accurate geographic comparisons and to reduce the potential geometric errors (e.g., position and orientation) as effects of spatial miss-registration can influence the derived information. Finally, we also checked the cloud-contaminated pixels by using the quality control band (i.e., 500 m flag; another layer available in the MOD09A1 dataset) and excluded them from further analysis. 2.2.2.Preprocessing of Landsat-8 imagesThe Landsat-8 images were available in the form of digital numbers (DN). These DN values were converted into top of atmosphere (TOA) reflectance using the following equation illustrated in Ref. 29: where is the band-specific Landsat-8 TOA reflectance; is the band-specific multiplicative rescaling factor; is the band-specific additive rescaling factor; and is the local sun elevation angle at the scene center. The values of , , and were available in the metadata file of each image.In order to transform the TOA reflectance into surface reflectance, we employed a simple but effective atmospheric correction method that would not require any information about the atmosphere conditions during the image acquisition period. This was done using MODIS surface reflectance images based on the fact that MODIS and Landsat have consistent surface reflectance values.3,4,13,30 This was accomplished in three distinct steps. In the first step, we employed an averaging method over a moving window of (i.e., approximately the equivalent of ) for up-scaling pixels of Landsat-8 images into the spatial resolution of MODIS images (i.e., 500 m). This was done in order to make both the Landsat-8 and MODIS images similar in the context of their spatial resolutions and to increase the spectral reliability.31 In this way, the spectral variance between the images would decrease while the spatial autocorrelation would increase; these were investigated in different studies.32–35 In the second step, we determined linear relationships between the up-scaled Landsat-8 and MODIS images for each of the spectral bands by generating scatter plots between them. The coefficients of the linear relationships (i.e., slope and intercept) were then used with the original Landsat-8 images (i.e., 30 m) in order to generate Landsat-8 surface reflectance images in the scope of the third step. It would be worthwhile to mention that the use of the Landsat Ecosystem Disturbance Adaptive Processing System atmospheric correction algorithm was not applicable for Landsat-8 due to the absence of climate data records.36 Finally, we employed the Landsat-8 quality assessment (QA) band for determining the cloud-contaminated pixels and excluded them from further analysis. 3.MethodsFigure 3 shows a schematic diagram of the STI-FM framework. It consisted of two major components, (i) establishing the relationships between MODIS images acquired at two different times [i.e., and ], and (ii) generating the synthetic Landsat-8 surface reflectance images at time two [i.e., synth-] by combining the Landsat-8 images acquired at time 1 [i.e., ] and the relationship constructed in the first component and its validation. Fig. 3Schematic diagram of the proposed spatiotemporal image-fusion model for enhancing the temporal resolution of Landsat-8 surface reflectance images.  In order to establish relations between the two MODIS images [i.e., and ], we performed the following steps:

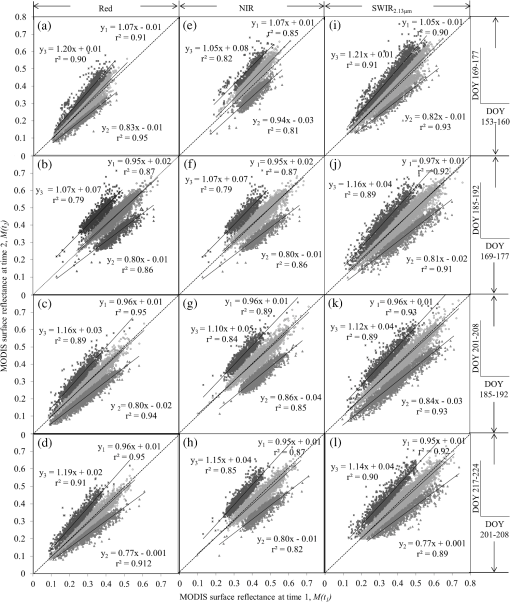

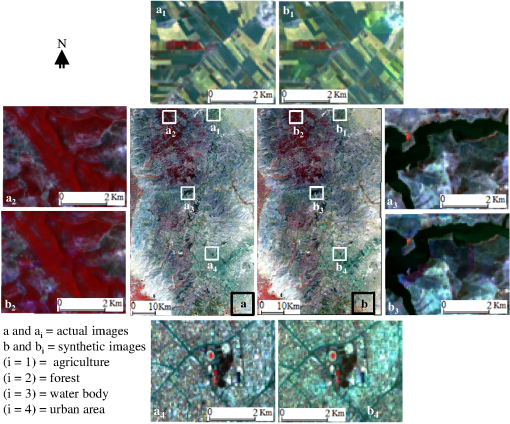

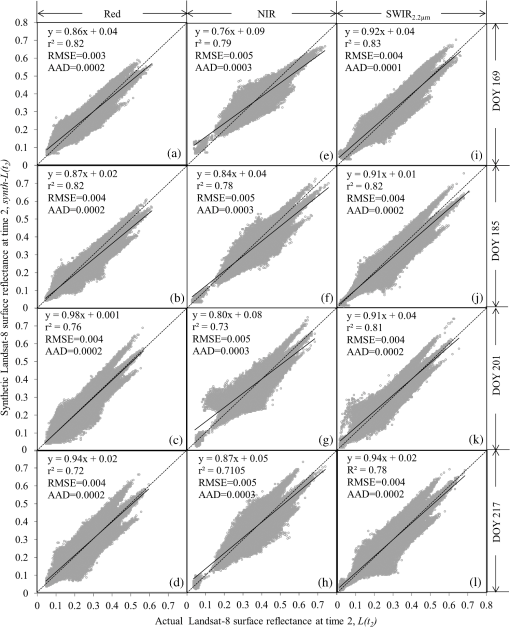

In generating the synthetic Landsat-8 surface reflectance image [i.e., synth-] at 8-day intervals, we employed the Landsat-8 image acquired at time 1 [i.e., ] in conjunction with the classified image and cluster-specific linear regression models derived from the previous steps. In order to perform this combination, we applied different conditional linear functions based on MODIS classified image to assign the surface reflectance value of each pixel in the synthetic image instead of using one linear equation for the entire scene. For example, in order to generate synthetic Landsat-8 image of the day of year (DOY) 185, we first calculated the linear regression between MODIS image on DOY 169 to 176 and DOY 185 to 192, and then we applied the regression coefficients to Landsat-8 LST image of DOY 169. Here, our image-fusion model assumed that the linear relationship between two MODIS images should be applicable and comparable to the linear relationship between the two corresponding Landsat-8 images as they would have consistent values. Therefore, the use of this linear regression would be practical for generating synthetic Landsat-8 images. 3.1.Validating STI-FMUpon producing the synth- images, we evaluated them with the actual images acquired at 16-day intervals as the actual Landsat-8 were only available at every 16 day temporal resolution. In this case, we used two methods: (i) qualitative evaluation that involved visual examination and (ii) quantitative evaluation using statistical measurements, such as coefficient of determination (), root mean square error (RMSE), and absolute average difference (AAD). The equations for these statistical measures are as follows: where and are the actual and the synthetic Landsat-8 surface reflectance images; and are the mean values of the actual and the synthetic Landsat-8 images; and is the number of observations.4.Results and Discussion4.1.Evaluation of the Relationships Between MODIS Images Acquired at Two Different TimesFigure 5 shows the relation between 8-day composites of MODIS images acquired in two different dates for the spectral bands of red, NIR, and during the period June 2 to August 12, 2013. It revealed that a strong relation existed for each of the clusters (i.e., negligible change, negative change, and positive change) over all of the spectral bands during the period of observation. For example: the , slope, and intercept values were in the range: (i) 0.85 to 0.95, 0.95 to 1.07, and 0.003 to 0.01, respectively, for the negligible change cluster; (ii) 0.81 to 0.94, 0.77 to 0.94, and 0.0005 to 0.04, respectively, for the negative change cluster; and (iii) 0.79 to 0.91, 1.05 to 1.21, and 0.01 to 0.08 respectively, for the positive change cluster. Regression analysis showed that the negligible change clusters revealed the highest correlation values because no significant changes occurred in the study area during the two 8-day composite MODIS images of interest at 16-day intervals. Note that we were unable to compare our findings as there were no similar studies found in the literature so far. Although the use of 8-day composites of MODIS might reduce the cloud-contamination, it might bring another issue. For example, two consecutive MODIS images might potentially be apart in the range of 2 to 16 days as the 8-day composite were generated based on the minimum-blue criterion,27 which coincided with the most clear-sky day during the composite period of interest. The quantification of the impact of such an 8-day composite against daily data was, in fact, beyond the scope of this paper, but might be an interesting issue for further exploration. Fig. 5Relation between 8-day composite of MODIS surface reflectance images acquired at time 1 [i.e., ] and time 2 [i.e., ] for the spectral bands of red [(a)–(d)], near-infrared (NIR) [(e)–(h)], and shortwave infrared () [(i)–(l)] during the period June 2 to August 12, 2013 [i.e., day of year (DOY) between 153 and 224]. Note that for each of the panels, three clusters (i.e., negligible change, negative change, and positive change) are formed as per Fig. 3. Also, the dotted and continue lines represent and regression line, respectively.  4.2.Evaluation of the Synthetic Landsat-8 Surface Reflectance ImagesPrior to conducting quantitative evaluations, we performed qualitative evaluations by comparing the actual and synthetic Landsat-8 images. In these cases, we generated pseudocolor composite images by putting the NIR, red, and SWIR spectral bands in the red, green, and blue color planes of the computer; and such an example is shown in Fig. 6. Figure 6 shows the actual Landsat-8 image acquired on June 18, 2013 (DOY 169) [Fig. 6(a)] and its corresponding synthetic image Landsat-8 image [Fig. 6(b)], which was produced using an image pair of Landsat-8 and MODIS acquired in the DOY 153 and one MODIS image acquired in the DOY 169. In fact, we evaluated four different land cover types [i.e., agricultural lands in Figs. 6(a1) and 6(b1); forests in Figs. 6(a2) and 6(b2); water bodies in Figs. 6(a3) and 6(b3); and urban areas in Figs. 6(a4) and 6(b4)]. In general, we observed that the visual clues (e.g., location, shape, size, and texture in particular) were reproduced in the synthetic images with negligible differences in comparison to that of the actual images. However, the tones (i.e., the DN representing the surface reflectance values) had some differences. These might happen due to the use of 500-m spatial resolution MODIS surface reflectance images in calculating the Landsat-8 surface reflectance values at 30-m resolution. Fig. 6Example comparison between pseudocolor composite images by putting the NIR, red, and SWIR spectral bands in the red, green, and blue color planes of the computer, respectively, for actual and synthetic Landsat-8 images during June 18, 2013. Note that the panels [(a1), (b1)], [(a2), (b2)], [(a3), (b3)], and [(a4), (b4)] show enlarged images for agricultural land, forest, water body, and urban area, respectively, for both actual and synthetic images. Note that the synthetic image was produced using an image pair of Landsat-8 and MODIS acquired in the DOY 153 and one MODIS image acquired in the DOY 169.  Figure 7 shows the relationship between the actual Landsat-8 surface reflectance images and the synthetic Landsat-8 surface reflectance images for red, NIR, and spectral bands for the DOY 169, 185, 201, and 217. It demonstrated that strong relations existed between the actual and synthetic images for all the spectral bands of interest over the period of study. In the context of linear regression analysis, the , slope, and intercept values were in the range: (i) 0.72 to 0.82, 0.86 to 0.98, and 0.0009 to 0.042, respectively, for red spectral band; (ii) 0.71 to 0.79, 0.80 to 0.87, and 0.166 to 0.0800, respectively, for NIR spectral band; and (iii) 0.78 to 0.83, 0.91 to 0.94, and 0.0096 to 0.0367, respectively, for spectral band. In the context of RMSE analyses, they were: (i) in between 0.003 an d0.004 for red spectral band; (ii) 0.005 for NIR spectral band; and (iii) 0.004 for spectral band. In addition, the AAD values were 0.0002, 0.0003, and between 0.0001 and 0.0002 for the red, NIR, and spectral bands, respectively. Fig. 7Relation between the actual Landsat-8 surface reflectance image and its corresponding synthetic Landsat-8 surface reflectance images for the red panels [(a)–(d)], NIR [(e)–(h)], and [(i)–(l)] spectral bands during the DOY 169 (i.e., June 18, 2013) [(a), (e), (i)], DOY 185 (i.e., July 4, 2013) [(b), (f), (j)], DOY 201 (i.e., July 20, 2013) [(c), (g), (k)], and DOY 217 (i.e., August 5, 2013) [(d), (h), (l)]. The dotted and continued lines represent and regression line, respectively.  It would be worthwhile to note that our findings were quite similar or even better in some cases compared to other studies. For example: (i) Gao et al.13 implemented STARFM over boreal forest and obtained AAD values of 0.004, 0.0129, and 0.0078 for red, NIR, and spectral bands, respectively; (ii) Roy et al.14 applied a semiphysical fusion model over two study sites in the United States (Oregon and Idaho) and got AAD values of 0.015, 0.22, and 0.28 for Oregon site for red, NIR, and spectral bands, respectively; (iii) Zhu et al.15 applied ESTARFM over heterogeneous regions and achieved AAD values of 0.0095 and 0.0196 for red and NIR spectral bands, respectively; (iv) Walker et al.5 used STARFM to generate synthetic Landsat ETM+ surface reflectance images over dry-land forests; and found that the values were 0.85 and 0.51 for red and NIR spectral bands; (v) Song and Hang23 employed a sparse representation-based synthetic technique over boreal forests and found values of 0.71 and 0.90; RMSE values of 0.02 and 0.03; and AAD values of 0.01 and 0.21 for red and NIR spectral bands, respectively; and (vi) Zhang et al.18 used ESTDFM and observed values of 0.73 and 0.82, and AAD values of 0.009 and 0.0167 for red and NIR spectral bands, respectively. It is also important to mention that the proposed model would be applicable for other satellite systems that would have similar spectral and orbital characteristics, such as other Landsat series, MODIS, MERIS, and ASTER. In addition, it is also interesting to point out that the model is a relatively simple and easily reproducible approach, which might satisfy most of the user’s needs; such a simple and less sophisticated method might be the most suitable for different applications. Although our results demonstrated strong relations between actual and synthetic Landsat-8 images, some issues would be worthwhile to consider for further improvements, such as:

5.Concluding RemarksIn this study, we demonstrated the applicability of the STI-FM technique for enhancing the temporal resolution of Landsat-8 images from 16 to 8 days using 8-day MODIS based surface reflectance images and demonstrated its implementation over heterogeneous agriculture-dominant semiarid region in Jordan. Our results showed that the proposed method could generate synthetic Landsat-8 surface reflectance images for red, NIR, and SWIR spectral bands with relatively strong accuracies (, RMSE, and AAD values were in the range 0.71 to 0.83; 0.003 to 0.005; and 0.0001 to 0.0003, respectively). In general, our method would be considered as a simple one because it would not require any correction parameters or high quality land-use maps in order to predict the synthetic images. Despite the accuracy and simplicity, we would recommend that the proposed method should be thoroughly evaluated prior to adoption in other environmental conditions except for semiarid regions like ours. AcknowledgmentsWe would like to thank Yarmouk University in Jordan for providing partial support in the form of a PhD scholarship to Mr. K. Hazaymeh; and the National Sciences and Engineering Research Council of Canada for a Discovery grant to Dr. Q. Hassan. We would also like to thank USGS and NASA for providing Landsat-8 and MODIS images free of cost. ReferencesJ. Jensen, Remote Sensing of the Environment: An Earth Resource Perspective, 2nd ed.Pearson Prentice Hall, New Jersey

(2007). Google Scholar

Q. WengP. FuF. Gao,

“Generating daily land surface temperature at Landsat resolution by fusing Landsat and MODIS data,”

Remote Sens. Environ., 145 55

–67

(2014). http://dx.doi.org/10.1016/j.rse.2014.02.003 RSEEA7 0034-4257 Google Scholar

J. G. Maseket al.,

“A Landsat surface reflectance dataset,”

IEEE Trans. Geosci. Remote Sens., 3

(1), 68

–72

(2006). http://dx.doi.org/10.1109/LGRS.2005.857030 IGRSD2 0196-2892 Google Scholar

M. Fenget al.,

“Quality assessment of Landsat surface reflectance products using MODIS data,”

Comp. Geosci., 38

(1), 9

–22

(2012). http://dx.doi.org/10.1016/j.cageo.2011.04.011 CGEODT 0098-3004 Google Scholar

J. J. Walkeret al.,

“Evaluation of Landsat and MODIS data fusion products for analysis of dryland forest phenology,”

Remote Sens. Environ., 117 381

–393

(2012). http://dx.doi.org/10.1016/j.rse.2011.10.014 RSEEA7 0034-4257 Google Scholar

A. TongY. He,

“Comparative analysis of SPOT, Landsat, MODIS, and AVHRR normalized difference vegetation index data on the estimation of leaf area index in a mixed grassland ecosystem,”

J. Appl. Remote Sens., 7

(1), 073599

(2013). http://dx.doi.org/10.1117/1.JRS.7.073599 1931-3195 Google Scholar

C. Rochaet al.,

“Remote sensing based crop coefficients for water management in agriculture,”

Sustainable Development-Authoritative and Leading Edge Content for Environmental Management, 167

–192 InTech, Croatia

(2012). Google Scholar

C. Atzberger,

“Advances in remote sensing of agriculture: context description, existing operational monitoring systems and major information needs,”

Remote Sens., 5

(2), 949

–981

(2013). http://dx.doi.org/10.3390/rs5020949 17LUAF 2072-4292 Google Scholar

T. Udelhoven,

“Long term data fusion for a dense time series analysis with MODIS and Landsat imagery in an Australian Savanna,”

J. Appl. Remote Sens., 6

(1), 063512

(2012). http://dx.doi.org/10.1117/1.JRS.6.063512 1931-3195 Google Scholar

C. Cammalleriet al.,

“A data fusion approach for mapping daily evapotranspiration at field scale,”

Water Resour. Res., 49

(8), 4672

–4686

(2013). http://dx.doi.org/10.1002/wrcr.20349 WRERAQ 0043-1397 Google Scholar

B. Huanget al.,

“Generating high spatiotemporal resolution land surface temperature for urban heat island monitoring,”

IEEE Geosci. Remote Sens. Lett., 10

(5), 1011

–1015

(2013). http://dx.doi.org/10.1109/LGRS.2012.2227930 IGRSBY 1545-598X Google Scholar

T. Hilkeret al.,

“Generation of dense time series synthetic Landsat data through data blending with MODIS using a spatial and temporal adaptive reflectance fusion model,”

Remote Sens. Environ., 113

(9), 1988

–1999

(2009). http://dx.doi.org/10.1016/j.rse.2009.05.011 RSEEA7 0034-4257 Google Scholar

F. Gaoet al.,

“On the blending of the Landsat and MODIS surface reflectance: predicting daily Landsat surface reflectance,”

IEEE Trans. Geosci. Remote Sens., 44

(8), 2207

–2218

(2006). http://dx.doi.org/10.1109/TGRS.2006.872081 IGRSD2 0196-2892 Google Scholar

D. P. Royet al.,

“Multi-temporal MODIS–Landsat data fusion for relative radiometric normalization, gap filling, and prediction of Landsat data,”

Remote Sens. Environ., 112

(6), 3112

–3130

(2008). http://dx.doi.org/10.1016/j.rse.2008.03.009 RSEEA7 0034-4257 Google Scholar

X. Zhuet al.,

“An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions,”

Remote Sens. Environ., 114

(11), 2610

–2623

(2010). http://dx.doi.org/10.1016/j.rse.2010.05.032 RSEEA7 0034-4257 Google Scholar

D. Fuet al.,

“An improved image fusion approach based on enhanced spatial and temporal the adaptive reflectance fusion model,”

Remote Sens., 5

(12), 6346

–6360

(2013). http://dx.doi.org/10.3390/rs5126346 17LUAF 2072-4292 Google Scholar

M. Wuet al.,

“Use of MODIS and Landsat time series data to generate high-resolution temporal synthetic Landsat data using a spatial and temporal reflectance fusion model,”

J. Appl. Remote Sens., 6

(1), 063507

(2012). http://dx.doi.org/10.1117/1.JRS.6.063532 1931-3195 Google Scholar

W. Zhanget al.,

“An enhanced spatial and temporal data fusion model for fusing Landsat and MODIS surface reflectance to generate high temporal Landsat-like data,”

Remote Sens., 5

(10), 5346

–5368

(2013). http://dx.doi.org/10.3390/rs5105346 17LUAF 2072-4292 Google Scholar

R. Zurita-Millaet al.,

“Downscaling time series of MERIS full resolution data to monitor vegetation seasonal dynamics,”

Remote Sens. Environ., 113

(9), 1874

–1885

(2009). http://dx.doi.org/10.1016/j.rse.2009.04.011 RSEEA7 0034-4257 Google Scholar

R. Zurita-Millaet al.,

“Using MERIS fused images for land-cover mapping and vegetation status assessment in heterogeneous landscapes,”

Int. J. Remote Sens., 32

(4), 973

–991

(2011). http://dx.doi.org/10.1080/01431160903505286 IJSEDK 0143-1161 Google Scholar

J. MengX. DuB. Wu,

“Generation of high spatial and temporal resolution NDVI and its application in crop biomass estimation,”

Int. J. Digital Earth, 6

(3), 203

–218

(2013). http://dx.doi.org/10.1080/17538947.2011.623189 1753-8947 Google Scholar

B. HuangH. Song,

“Spatiotemporal reflectance fusion via sparse representation,”

IEEE Trans. Geosci. Remote Sens., 50

(10), 3707

–3716

(2012). http://dx.doi.org/10.1109/TGRS.2012.2186638 IGRSD2 0196-2892 Google Scholar

H. SongB. Huang,

“Spatiotemporal satellite image fusion through one-pair image learning,”

IEEE Trans. Geosci. Remote Sens., 51

(4), 1883

–1896

(2013). http://dx.doi.org/10.1109/TGRS.2012.2213095 IGRSD2 0196-2892 Google Scholar

K. HazaymehQ. K. Hassan,

“Fusion of MODIS and Landsat-8 surface temperature images: a new approach,”

PLoS One, http://dx.doi.org/10.1371/journal.pone.0117755 1932-6203 Google Scholar

, “Country pasture/forage resource profiles, Jordan,”

(2014) http://www.fao.org/ag/agp/agpc/doc/counprof/PDF%20files/Jordan.pdf October ). 2014). Google Scholar

, “National strategy and action plan to combat desertification,”

(2014) http://ag.arizona.edu/oals/IALC/jordansoils/_html/NAP.pdf June ). 2014). Google Scholar

E. F. VermoteA. Vermeulen,

“MODIS algorithm technical background document, atmospheric correction algorithm: spectral reflectances (MOD09), Version 4.0,”

(2014) http://modis.gsfc.nasa.gov/data/atbd/atbd_mod08.pdf June ). 2014). Google Scholar

G. DwyerM. J. Schmidt,

“The MODIS reprojection tool,”

Earth Science Satellite Remote Sensing, Vol. 2, Data, Computational Processing, and Tools, 162

–177 Springer, Tsinghua University Press, China

(2006). Google Scholar

, “Using the USGS Landsat 8 product,”

(2014) http://landsat.usgs.gov/Landsat8_Using_Product.php June ). 2014). Google Scholar

M. Fenget al.,

“Global surface reflectance products from Landsat: assessment using coincident MODIS observations,”

Remote Sens. Environ., 134 276

–293

(2013). http://dx.doi.org/10.1016/j.rse.2013.02.031 RSEEA7 0034-4257 Google Scholar

Y. Linget al.,

“Effects of spatial resolution ratio in image fusion,”

Int. J. Remote Sens., 29

(7), 2157

–2167

(2008). http://dx.doi.org/10.1080/01431160701408345 IJSEDK 0143-1161 Google Scholar

H. S. HeS. J. VenturaD. J. Mladenoff,

“Effects of spatial aggregation approaches on classified satellite imagery,”

Int. J. Geogr. Inf. Sci., 16

(1), 93

–109

(2002). http://dx.doi.org/10.1080/13658810110075978 1365-8816 Google Scholar

D. G. GoodinG. M. Henebry,

“The effect of rescaling on fine spatial resolution NDVI data: a test using multi-resolution aircraft sensor data,”

Int. J. Remote Sens., 23

(18), 3865

–3871

(2002). http://dx.doi.org/10.1080/01431160210122303 IJSEDK 0143-1161 Google Scholar

J. JuS. GopalE. D. Kolaczyk,

“On the choice of spatial and categorical scale in remote sensing land cover classification,”

Remote Sens. Environ., 96

(1), 62

–77

(2005). http://dx.doi.org/10.1016/j.rse.2005.01.016 RSEEA7 0034-4257 Google Scholar

M. D. Nelsonet al.,

“Effects of satellite image spatial aggregation and resolution on estimates of forest land area,”

Int. J. Remote Sens., 30

(8), 1913

–1940

(2009). http://dx.doi.org/10.1080/01431160802545631 IJSEDK 0143-1161 Google Scholar

, “Landsat surface reflectance climate data record,”

(2014) http://landsat.usgs.gov/CDR_LSR.php October ). 2014). Google Scholar

T. R. Oke, Boundary Layer Climates, 2nd ed.Routledge, New York

(1987). Google Scholar

C. E. AhrensC. D. JacksonP. L. Jackson, Meteorology Today: An Introduction to Weather, Climate, and the Environment, 8th ed.Brooks/Cole Cengage Learning, Stamford

(2012). Google Scholar

E. H. ChowdhuryQ. K. Hassan,

“Use of remote sensing-derived variables in developing a forest fire danger forecasting system,”

Nat. Hazards, 67

(2), 321

–334

(2013). http://dx.doi.org/10.1007/s11069-013-0564-7 NAHZEL 0921-030X Google Scholar

, “Landsat climate data record (CDR) surface reflectance. Product guide, version 4,”

(2014) http://landsat.usgs.gov/documents/cdr_sr_product_guide_v40.pdf September ). 2014). Google Scholar

BiographyKhaled Hazaymeh received his BA degree in geography and spatial planning from Yarmouk University, Jordan, in 2004 and his MSc degree in remote sensing and GIS from the University Putra Malaysia in 2009. He is currently pursuing his PhD degree in earth observation in the Department of Geomatics Engineering at the University of Calgary, Canada. His research interests focus on environmental modeling using remote sensing techniques. Quazi K. Hassan received his PhD degree in remote sensing and ecological modeling from the University of New Brunswick, Canada. He is currently an associate professor in the Department of Geomatics Engineering at the University of Calgary. His research interests include the integration of remote sensing, environmental modeling, and GIS in addressing environmental issues. |