|

|

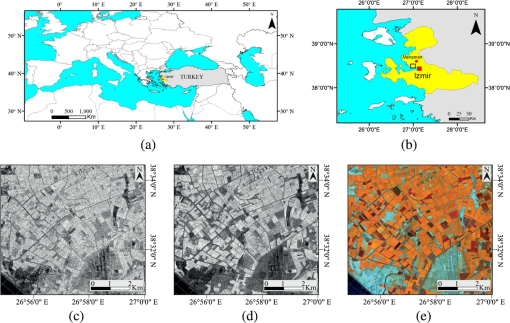

1.IntroductionA wide variety of remote sensing satellite sensors provide data with diverse spectral and spatial resolution for the observation of many phenomena on the Earth. Land use and cover mapping require both the high spectral and spatial resolution for an accurate analysis and interpretation. The data fusion is a key preprocessing method to integrate multisensor and multiresolution images, which has advantages over the result of each individual data set.1,2 Image fusion is an active topic for researching the performance of image fusion techniques for different sensors using both qualitative and quantitative analyses.3 Previous studies in the literature proved that the fusion of the synthetic aperture radar (SAR) and multispectral (MS) images improves the spatial information while preserving the spectral information.4,5 As part of image processing, several approaches of data fusion methods were proposed, and the contributions of the fusion techniques in image classification accuracies were studied.3,6 For different applications of the SAR and MS data fusion, various satellite images were used and various results have been achieved for each study.4,7,8 A generalized intensity modulation for the fusion of MS LANDSAT and ERS-1 SAR images is addressed.7 The benefit of fusion is demonstrated by the maximum likelihood classifier (MLC). In this study, the classification accuracy of vegetation did not improve when using the SAR data, but the discrimination of urban areas was enhanced. The high-resolution spotlight modes of TerraSAR-X and RapidEye are fused using principal component (PC) substitution, Ehlers fusion (EF), Gram–Schmidt (GS), high-pass filtering (HPF), modified intensity hue saturation (M-IHS), and Wavelet algorithms.8 In both visual and statistical analyses, the HPF method gave better results. Another study suggest that PC, color normalization, GS, or the University of New Brunswick methods should only be used for a single date and a single sensor dataset.4 Ehlers method is the only one that preserved the spectral information of the MS data, which is suitable for classification.4 Dual-polarized HH-HV (H-Horizontal, V-Vertical) RADARSAT and PALSAR data are fused with LANDSAT-TM data for the comparison of land cover classification.2 The study indicated that among the discrete wavelet transform (DWT), HPF, principal component analysis (PCA), and normalized multiplication methods, only DWT improved the overall accuracy of the MLC. In a previous study, authors used PALSAR and RADARSAT images to fuse them with an Satellite for Observation of Earth (SPOT) image employing five fusion approaches (IHS, PCA, DWT, HPF, and EF).9 In the results of the fused images, EF and HPF gave satisfactory results for agricultural areas. As a second study, different combinations of the optical-SAR and SAR-SAR fusion results were compared. The EF demonstrated better visual and statistical results.9 This paper extends the previous study of Ref. 10, which focuses on the fusion of the RapidEye data with VV polarized TerraSAR-X SAR data. For the analysis, three pixel-based fusion methods, namely adjustable SAR-MS fusion (ASMF), EF, and HPF were examined. The ASMF method is able to fuse high-resolution SAR data with low-resolution MS data and vice versa. It is possible to use the scaled values for each of the SAR and MS images.11,12 The EF is a hybrid approach, which uses IHS transform with a Fourier domain filtering. A low-pass filter is used to filter the intensity and an inverse high-pass filter is used on high-resolution images.4 In the HPF method, the high-pass filtered high-resolution image is included in each MS band of the low-resolution image.2,5 At the end, visual and statistical analyses were presented and compared. In the previous study, four metrics were used for the statistical analysis. Among all methods, HPF conserved better spectral information. In addition to the previous study, in this study, the VH image of the TerraSAR-X data is also considered. The contribution of the dual-polarized (VV–VH) TerraSAR-X SAR data to RapidEye over agricultural land types is investigated using different image fusion methods. Furthermore, the statistical analyses were extended by adding quality metrics. In total, seven metrics were applied. The bias of the mean (BM) is the difference of the original image and the fused image relative to the original MS data,13 the difference in variance (DIV) measure is the difference of variance values relative to the original MS data,13 entropy is a measure that indicates the additional information in the fused image,13 relative average spectral error (RASE) provides a value for the average performance of the fusion approach,14 and correlation coefficient (CC) gives the correlation between the original MS image and the fused image. The universal image quality index (UIQI) measures the combination of various factors, such as luminance distortion, contrast distortion, and loss of correlation.15 Relative Dimensionless Global Error in Synthesis-Erreur Relative Globale Adimensionnelle de Synthese (ERGAS) is a global metric which calculates the spectral distortion in a fused image.16 Additionally, Bayesian data fusion (BDF) is also applied for both the TerraSAR-X VV and VH polarized data. BDF was rarely used in the literature. It was performed successfully for high-resolution IKONOS images and recommended as a promising technique for optical/SAR image fusion.17 It is adapted within the ORFEO Toolbox.18 The BDF method allows the user to adjust the images during the fusion processes for emphasizing spectral information via selecting a small weighting coefficient.17 As a part of this study, it is also intended to investigate the contribution of different polarizations of the SAR data to MS data via various fusion techniques for land use image classification. The land use classification needs robust classification methods, which can help in the accurate mapping of land use or land cover classes. There are many studies in the literature with SAR and MS data used separately or together using support vector machines (SVMs)19 and MLC20 classification methods. A decision fusion strategy for joint classification of multiple segmentation levels with the SAR and optical data was also evaluated in the literature.21 Ensemble learning and kernel-based classification methods have been confirmed to improve the land use and land cover classification accuracy in remote sensing areas. An assessment of the effectiveness of random forests (RF) for land-cover classification was investigated.22 A comprehensive analysis for the choice of the kernel function and its parameters for the SVMs were presented for the land cover classification.23 In another study, the multiclass SVMs were used and compared with MLC and artificial neural network classifiers for land cover classification.24 The classification of multitemporal SAR and MS data was achieved by the fusion of SVMs.25 In this study, the original outputs of each discriminant function were used instead of fusing the final classification outputs. In our proposed study, the contribution of the dual-polarized SAR to optical data for the accuracy of the land use classification is investigated. The fusion methods’ effect on classification accuracies are explored by comparing the SVMs as a kernel-based learning method, RF as an ensemble learning method, k-nearest neighbor (k-NN) as a fundamental machine learning classifier, and MLC as a statistical model. 2.Study Area and Data2.1.Test SiteThe study area Menemen Plain is located on the west of Turkey in Izmir Province as shown in Fig. 1. The Aegean Sea lies on the west and Izmir Bay on the south, which shapes the border of the study area. The area covers mostly agricultural fields and is approximately . The crop species depend on the harvesting period and the characteristics of the soil. In this study area, fields were covered with summer crops such as corn, cotton, watermelon, and meadow when the RapidEye data were acquired. There are also some residential areas and small bodies of water in the region. The topographic relief of the study area is lower than 1%, which reduces the effects of topography in image processing. 2.2.Data SetIn this experiment, the dual-polarized (VV and VH) TerraSAR-X SAR data and MS RapidEye data were used. The TerraSAR-X image has ground resolution, and it was preprocessed to the Enhanced Ellipsoid Corrected product type (e.g., radiometrically enhanced). It was acquired on August 29, 2010, in an ascending pass direction. Data were taken on the Strip Map mode. Detailed specifications of the data sets are given in Table 1. Table 1Specifications of data set.

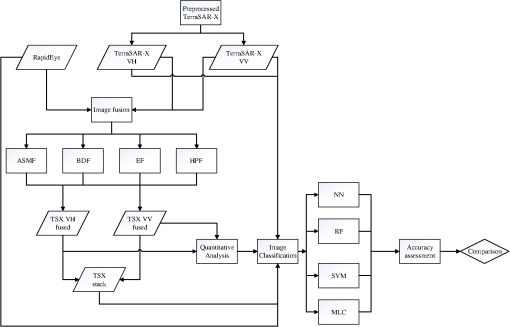

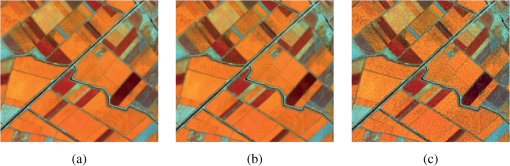

The RapidEye image was acquired on August 10, 2010, as an L3A format, which was a radiometrically calibrated and orthorectified data resampled to ground resolution (WGS 84 Datum UTM projection, zone 35). The RapidEye provides five optical spectral bands, which range between 400 and 850 nm. The RapidEye differs significantly from the standard high-resolution MS satellite sensors (e.g., IKONOS, QuickBird, SPOT), having an extra spectrum called the red edge (690 to 730 nm). For the classification analysis, fieldwork was carried out and crop types were determined using handheld GPSs in agricultural lands on the same date of the RapidEye MS acquisition. The crop types were defined carefully as representing all types of crops in the test site (i.e., for each crop type, ground-truth data were collected from 5 to 15 different fields). 3.MethodologyIn this section, the methodological approach is represented. First of all, preprocessing steps were applied to SAR images and then different fusion methods were applied with RapidEye data. Subsequently, four fusion methods were utilized and the quality assessment was conducted with various quantitative analyses. Lastly, four image classification methods were used to evaluate the contribution of SAR images to the optical images. The workflow is shown in generalized form with Fig. 2. 3.1.PreprocessingBefore the application of image fusion methods, image preprocessing steps are necessary. First, the SAR images were filtered using a gamma map filter with kernel window to reduce speckles. Then, both the VV and VH polarized TerraSAR-X images were registered to the RapidEye image with a less than pixel root mean square error and resampled to its original pixel size as . Since only one optical data are used in the study and the data are not affected severely by atmospheric conditions, an atmospheric correction has not been applied before the fusion process. 3.2.Image Fusion MethodsIn this study, four different pixel level image fusion approaches have been utilized. They are ASMF, EF, HPF, and BDF. The ASMF method accepts weights for the SAR and MS images separately. In this study, two types of ASMF-based fused images are obtained with different weights. The first image, ASMF-I, is obtained by giving 100% weights to both the SAR and MS images, whereas the second image, ASMF-II, is obtained by giving 50% and 100% weights to the SAR and MS images, respectively. In the Ehlers fused image, the spatial information is improved and spectral characteristics of the MS image are preserved. The HPF method fuses both spatial and spectral information using a band addition approach. In BDF method, the weighting parameter () changes between 0 and 1 for the PAN and MS images. In this study, the TerraSAR-X images are first resampled to the same spatial resolution with the MS data. Afterward, two weighting values (0.5 and 0.1) are selected in the fusion processes and fused images, BDF-I and BDF-II, are produced, respectively. 3.3.Quality Analysis of Image Fusion ResultsThe fusion quality assessment is conducted via statistical analyses. A quantitative analysis is applied to evaluate the spectral quality of the fused image by the BM, DIV, entropy, CC, UIQI, RASE, and ERGAS quality metrics. It is expected to be close to zero for BM and DIV metrics. Conversely, while the CC value is close to one, it signifies a better correlation result. Small values of entropy difference between the original MS and the fused image indicate better spectral quality. The higher UIQI values specify better spectral quality in the image. Small values of the RASE and ERGAS indicate a better spectral quality.16 3.4.Image Classification MethodsSVM is very popular and powerful kernel-based learning method. SVMs are introduced as a kernel-based classification algorithm in machine learning society.26 Kernel-based learning aims to separate data in a high-dimensional feature space by mapping the data points with a kernel function. The SVMs are defined for the binary separation problem of samples with -dimensional feature vector and binary class label , which can be expressed mathematically as . The SVM creates a decision surface between the samples of the different classes by finding the optimal hyperplane that is closest to the deciding training samples (support vectors). In this way, an optimal classification can be achieved for linearly separable classes. In the linearly inseparable cases, kernel versions of the SVM are defined. The main purpose of the kernel approach in the SVM is to transform the data to a higher-dimensional space , where binary classification can again be achieved linearly.27 The SVM utilizes a kernel function that corresponds to the inner product in the higher-dimensional space. The deciding support vectors can be found with the optimization problem that maximizes the Eq. (1) subject to Eq. (2): where denotes the number of training samples, is the penalty parameter, is the kernel function, and is the Lagrange multiplier coefficients.controls the number of misclassified training samples to allow for a better margin maximization. The SVM does not require the explicit definition of the transformation function , but is based on the definition of the inner product result in the high-dimensional space as . Each nonzero value corresponds to a support vector. Given all support vectors , the nonlinear classification result can be given in Eq. (3) for an arbitrary sample where denotes the number of support vectors and sgn stands for the sign function.Widely used kernel functions for the SVM can be given as linear kernel , polynomial kernel with a degree parameter , a scaling factor , and radial basis function kernel with a scaling factor . The multiclass problems can be broken down into several one-against-one binary problems. For an class problem, a total of one-against-one SVMs are calculated. The majority vote from the one-against-one classification for each sample decides for the final result.28 RF is a supervised ensemble learning technique, which has received highlighted interest in machine learning and pattern recognition society.29 The RF is a powerful “classification and regression tree” (CART) type classifier and it is popular in the remote sensing society with applications on MS and hyperspectral images, as well as kernel-based classification methods. In essence, the RF algorithm builds an ensemble of tree-based classifiers and makes use of bagging or bootstraps aggregating to form an ensemble of CART-like classifiers. In the ensemble of classifiers, each classifier produces a single vote for the appointment of the most frequent class label to the input vector and , where the is independent identically distributed random vectors.30 The random term in the RF refers to the way each tree is trained. Thus, each tree is chosen from a random subset of the features in the training data. In this way, the classifier becomes more robust against the minor variations in input data. The RF also tries to minimize the correlation between the tree classifiers in the ensemble in order for the forest to represent the independent identical distributions in each of the classifiers. Because of the nonparametric structure, high classification accuracy, and feature importance capability, the RF is a promising method in the remote sensing area.22 K-NN is a well-known, nonparametric method in CART tasks. The k-NN is an instance-(or sample) based cornerstone classifier in machine learning. It basically predicts labels of the test samples by using labels of the k-nearest training samples in the feature space. Consequently, the majority voting of closest neighbors defines the label which is assigned to the test sample.31 The MLC is a well known and widely used parametric supervised classification method in machine learning and pattern recognition. MLC assumes a multidimensional normal distribution for each class, and computes the probability of a test pixel based on this distribution model for the classification task. In the MLC, the likelihood of a new sample belonging to a class can be calculated with the following discriminant equation: where is an -dimensional data, is the probability that class occurs in the image, is the covariance matrix for class , and is the mean vector for class . The key feature of the MLC is the inclusion of the covariance in the normal class distributions.32,334.Results and Discussions4.1.Spectral Quality of Fused ImagesFor each fused image, seven quality metrics were calculated and an average over five bands were scored, as in Table 2. In the previous work,10 five bands of each fused image were analyzed separately. The last row of Table 2 shows the ideal image values of each metric. It must be noted that each fusion technique gives different results for each quantitative metric. Due to these different results, each metric was ranked for the fusion algorithms. Then the averages of seven metrics were taken, and Table 3 shows the fusion algorithms ranked by their quality scores. The results of the previous study10 indicated that comparing five bands of fused images with the original image was not an efficient way of understanding the comparison, due to the problem of having so many bands. We conclude that the TSX VH fused BDF-II image is the best fused image, in that it preserves the spectral information of the RapidEye data better than the other results. In the BDF, the selection of weights plays an important role for the quality of fusion. Assigning a higher weight value decreases the preservation of spectral characteristics for an optical image. Table 2Statistical results of quality metrics.

Note: BM, bias of mean; DIV, difference in variance; E, entropy; CC, correlation coefficient; UIQI, universal image quality index; RASE, relative average spectral error; and ERGAS, Erreur Relative Globale Adimensionnelle de Synthese. Table 3Rank values of fused images.

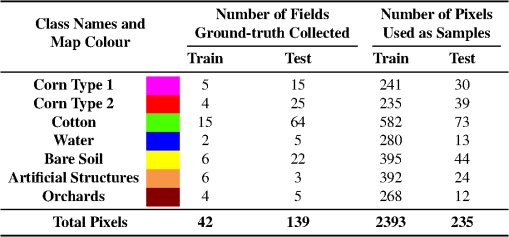

Note: BM, bias of mean; DIV, difference in variance; E, entropy; CC, correlation coefficient; UIQI, universal image quality index; RASE, relative average spectral error, and ERGAS, Erreur Relative Globale Adimensionnelle de Synthese. In the previous study,10 using only the VV image showed that the HPF result of the fusion image gave higher statistical results as compared to the EF and ASMF methods. When comparing the previous results with the recent study, the results show that using the BDF method increased the correlation between the original optical data and the SAR data. Moreover, adding the VH polarized image increased the correlation and introduced the best result among all the fused images using the BDF fusion method. In reference to the polarization of the SAR images, the TSX VV fused images yield slightly better results than the VH fused images (Table 2). The use of more quality metrics and a ranking system demonstrated a better understanding and provided an efficient way of comparison which improved previous results. The best three image fusion results according to quality metrics overall ranking are given in Fig. 3, zoomed into a particular patch for easier inspection. 4.2.Classification ResultsThe class information table of the Menemen test site for seven classes, namely corn type-1, corn type-2, cotton, water, bare soil, artificial structures, and orchards, is shown in Fig. 4. The class of artificial structures represents residential areas and other constructions such as roads and airports. Corn type-1 and 2 represent the crop being in one of the two distinct biological periods that is the result of different planting times. The orchards class represents different kinds of planted trees. The ground-truth data, which are required for training and reference/test samples, have been collected by systematic sampling based on ground control points by the fieldwork. The number of training samples of each class category is based on the ratio of the coverage of the class category on the entire area as shown in Fig. 4. As indicated in Fig. 4, a higher the spatial coverage means a greater number of training samples. The classification results are obtained for the following classifiers representing major classification paradigms in machine learning: the k-NN, RF, SVM, and MLC. In the SVM, linear, polynomial, and radial basis kernel functions (SVM-lin, SVM-poly, and SVM-rbf, respectively) are used. All of the classification experiments are realized in the MATLAB environment. The number of neighbors is chosen as and for the k-NN classifier. The maximum number of trees is set to 500 in the RF, which is composed of several decision trees. The best parameter optimization of the SVM classifiers is accomplished using the grid search method. The penalty parameter of the SVM is evaluated between , with a step size increment of 2 for all kernel function types. In SVM-rbf, the parameter of the kernel function is tested between , with a step size increment of 0.1. The polynomial degree is selected as 3, and the parameter of the polynomial kernel is evaluated between , with a step size increment of 0.1. The best parameter settings are obtained by one-against-one multiclass classifier modeling. In the MLC, the classification task has been realized according to the discriminant function given in Eq. (4). Initially, the classification results for the original SAR and MS images are obtained as the baseline results to compare the fused images. In Table 4, the classification accuracies for two polarization bands for the SAR image (TSX VH and TSX VV), five band RapidEye MS images, and a VH-VV polarization layer-stacked SAR image (TSX VV-VH) are given separately. According to the results from Table 4, the VV polarization of the SAR data produces better classification accuracies as compared to the VH polarization. The RapidEye MS data have a classification accuracy up to 94.89% with the SVM-rbf kernel function classifier. Table 4Original image classification accuracies (in %).

As the second step of the experiment, the classification accuracies of the single polarization SAR and five band MS fused images are given with fusion methods designated for the rows, and classifiers with the columns in Table 5. The overall best classification accuracy is obtained for the ASMF-II_TSX VV with an SVM-rbf as 95.32%. The ASMF-II_TSX VV fused image has the highest classification accuracies of all the proposed classifiers, except the SVM-poly, against other fusion methods. The second most successful case is the ASMF-I_TSX VV fusion, which has the best classification accuracies in the SVM-lin and SVM-poly. The EF_TSX VH fusion method also gives the best result for RF together with the ASMF-II_TSX VV. Table 5Fused images’ classification accuracies (in %).

It must be noted that there is at least one fusion method that surpasses the original RapidEye data in classification accuracy for all the proposed classifiers except RF. It can be concluded that with the SAR and MS fusion, the classification accuracies can be improved as compared to the original MS image. The highest classification accuracies are given in bold in Table 5 for each classification method. According to Table 5, the ASMF-II_TSX VV has the best classification accuracy (95.32%) as compared to Table 4, which includes all the original SAR and MS data. As the third step of the experiment, the fused images are used in a layer-stacked structure with two SAR polarizations cascaded together as in Table 6. In this way, all the SAR and MS information can be used collectively for the scene. The highest classification accuracies are given in bold in Table 6 for each classification method. It can be seen from Table 6 that the layer-stacked fusion results reveal higher accuracies as compared to their single polarization counterparts as in Table 5. The only exception to our conclusion is the best SVM-rbf results for Tables 5 and 6. In these tables, equal results (95.32%) are obtained for the ASMF-II_TSX VV in Table 5 and for the HPF_TSX VV-VH layer-stacked fusion in Table 6. The highest classification accuracy for the test site is obtained by the ASMF-II_TSX VV-VH and BDF-II_TSX VV-VH layer-stacked fusion images with 95.74% in Table 6. Table 6Layer-stacked fused images’ classification accuracies (in %).

4.3.Comparison of ResultsThe statistical significance of the fusion methods’ accuracies compared to the RapidEye classification accuracy is evaluated by McNemar’s test for each classification method. A contingency table is formed based on the agreements and disagreements of the RapidEye classification results and the evaluated fusion method’s classification results. This approach enables us to distinguish the fusion methods’ statistical significance over RapidEye classification accuracies. McNemar’s test score is evaluated as in Eq. (5) according to the contingency matrix given in Table 7 Table 7McNemar’s test confusion matrix.

The results of McNemar’s test should be compared to the table value for 1 degree of freedom.34 McNemar’s test scores for the classification accuracy of each fusion method as compared to the RapidEye classification accuracy per classification method is given in Table 8. The statistically significant results that yield better accuracies as compared to the RapidEye results, with a 90% confidence, are given in italic and bold, whereas the better accuracies compared to the RapidEye results, with a 95% confidence, are given in bold only in Table 8. Table 8McNemar’s test scores for difference of agreement compared to RapidEye classification, for fused and layer-stacked fused images, χ2 critical values at 1 degree of freedom for 90% confidence: 2.71, for 95% confidence: 3.84.

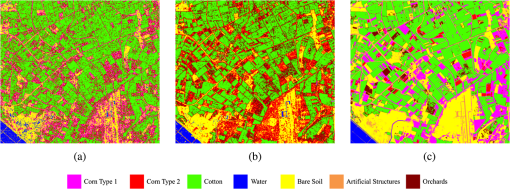

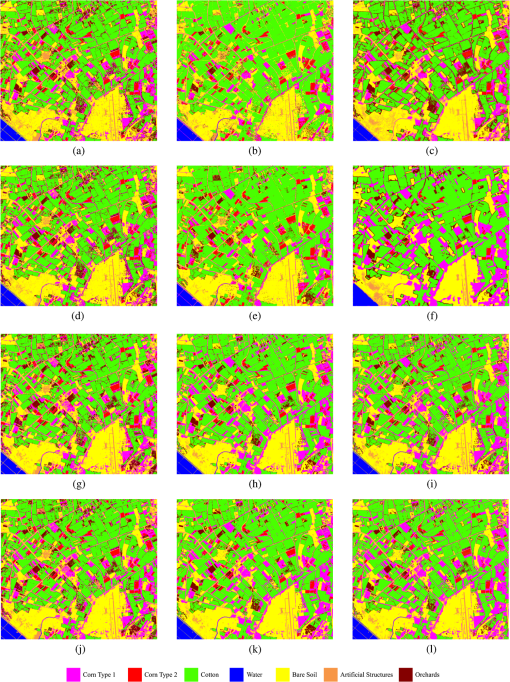

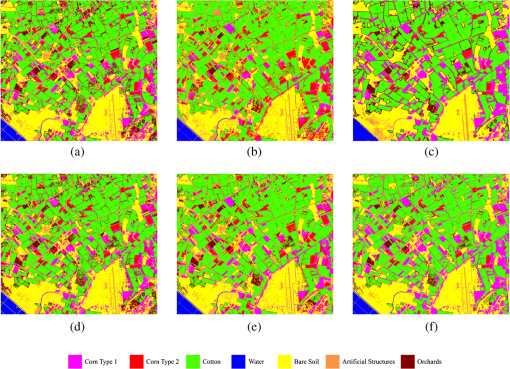

The classification maps enable better visual assessment of the classification accuracies for all the fusion methods. Hence, the classification maps are generated based on the predictions of the whole scene with the trained classifier models. The corresponding classification maps for each fusion method that produces the highest classification accuracy are generated. In Fig. 5, the classification maps for the TSX VH, TSX VV, and RapidEye are given, respectively, according to the obtained training models in Table 4. Since the SAR images are heavily contaminated with speckle noise, the whole scene classification results produced a relatively noisy map. On the other hand, the classification map of the whole scene with the RapidEye data shows mostly homogeneous regions, whereas some boundary regions are lost. Fig. 5Classification maps for raw images: (a) TSX VH (SVM-lin), (b) TSX VV (SVM-rbf), and (c) RapidEye (SVM-rbf).  The classification maps for the single polarization fused images are shown in Fig. 6. The first column of Fig. 6 provides the results for two types of the ASMF fusion for the MS and different SAR polarizations. The second column of Fig. 6 shows the results for both types of the BDF fusion for the MS and different SAR polarizations. The third column of Fig. 6 presents the results for the EF and HPF fusion methods for the MS and different SAR polarizations. If the results are examined, it can be seen that the MS image fusion with the VH polarized SAR data forms more salt and pepper noise-like labeling in all the fusion methods. The visual inspection of Fig. 6 also shows that the BDF type fusion causes deficiencies in the boundary regions. According to Fig. 6, it can also be concluded that the EF spreads the fused features spatially, so the resulting labels are highly affected by the neighboring labels. Fig. 6Classification maps for single polarization fused images: (a) ASMF-I_TSX VH (SVM-rbf), (b) BDF-I_TSX VH (SVM-rbf), (c) EF_TSX VH (RF), (d) ASMF-II_TSX VH (9-NN), (e) BDF-I_TSX VV (RF), (f) EF_TSX VV (SVM-rbf), (g) ASMF-I_TSX VV (SVM-rbf), (h) BDF-II_TSX VH (SVM-rbf), (i) HPF_TSX VH (SVM-rbf), (j) ASMF-II_TSX VV (SVM-rbf), (k) BDF-II_TSX VV (SVM-rbf), and (l) HPF_TSX VV (SVM-rbf).  In Fig. 7, the classification maps for the layer-stacked fused images for all the SAR polarizations are presented. The first column in Fig. 7 provides the results for the layer-stacked ASMF fusion images. The second column shows the results for the layer-stacked BDF fusion images, and finally, the third column gives the classification maps for the EF and HPF layer-stacked images. Fig. 7Classification maps for fused and layer-stacked images: (a) ASMF-I_TSX VV-VH (SVM-rbf), (b) BDF-I_TSX VV-VH (SVM-poly), (c) EF_TSX VV-VH (SVM-rbf), (d) ASMF-II_TSX VV-VH (1NN), (e) BDF-II_TSX VV-VH (SVM-poly), and (f) HPF_TSX VV-VH (SVM-rbf).  In Turkey, agricultural statistical data such as yield and acreage are collected by the local technical staff of the Ministry of Agriculture and based on the declarations of farmers. The government gives subsidies to farmers based on declared crop types, yield, and acreage. These data sometimes could be considered as unreliable due to false declarations from farmers. Therefore, environmental decision makers and local authorities will benefit from the detailed information regarding the crop pattern that can be used for strategic planning and sustainable management of agricultural resources. RapidEye data itself are quite successful; however, there is still the contribution of TerraSAR-X to land use/cover classification. This is an indication that when the RapidEye data are not available (e.g., cloudiness, temporal resolution, etc.) the fusion of TerraSAR-X with any optical imagery might be still considered for land use/cover classification with a higher accuracy. 5.ConclusionIn this study, the influence of the fusion techniques for the dual-polarized SAR and MS images on the classification performance is presented. Several fusion techniques are utilized in the experiments using the microwave X-band dual-polarized TerraSAR-X data and the MS optical image RapidEye data of the Menemen (Izmir) Plain data. The classification performances are investigated using the SVM, RF, k-NN, and MLC methods in a comparative manner. The study shows some insight into the improvement of the individual sensor results of land use/cover monitoring using the interoperability of multisensor data. Within this context, even though not all of the fusion methods improved the classification result using the single polarimetric SAR data, the study provides promising results of the fusion of dual polarimetric SAR data and the optical data for the mapping of land use/cover types. The results also confirm that using both dual polarimetric SAR data and MS fused images yielded higher classification results as compared to the single polarimetric SAR data fused and the original images. Quality metrics may give different results which can cause misunderstanding of results. A ranking method for different quality metrics is more efficient than using them individually. The ranking method could be used with any other data set and region, which makes it a universal application for the assessment of fusion quality. A single fusion technique is inadequate to improve the image quality for land use mapping. Different image fusion techniques should be compared. For the contribution of SAR characteristics to MS images, we recommend the use of image fusion approaches which are developed to combine radar and the MS dataset. In this study, not only a single polarization of an SAR fused image, but also a stack of dual polarization of data were applied to investigate the individual (VV and VH fused images separately) and dual polarization data (VV and VH fused images together) performances. Using the stack of fused dual-polarized SAR data gave better classification accuracies than both the original MS RapidEye data and the single polarized SAR fused data. It is suggested that quality metrics should not be the only way for the interpretation of fused images. Image classification should be applied on all fused images although statistical analysis of an image that has a worse result can give the highest classification accuracy. Although fused images’ classification accuracies are slightly lower than the accuracy of RapidEye (except the one given in Table 5), it is concluded that VV polarized images have a higher accuracy than VH polarized images. Using this dataset on an agriculturally dominant area, it is concluded that some image fusion methods performed better than others and improved the results. The selection of the fusion approach and the classification method can play an important role. Although we suggest this appropriate methodology for other study areas, generalizations have not been performed yet for other applications. As further research, we will investigate merging full polarimetric SAR data with optical data to explore the contribution of polarimetry to other remote sensing applications. We planned to test these fusion algorithms for other topographically heterogeneous areas to determine the contributions of fusion techniques. ReferencesC. Pohl and J. L. Van Genderen,

“Review article multisensor image fusion in remote sensing: concepts, methods and applications,”

Int. J. Remote Sens., 19

(5), 823

–854

(1998). http://dx.doi.org/10.1080/014311698215748 IJSEDK 0143-1161 Google Scholar

D. Lu et al.,

“A comparison of multisensor integration methods for land cover classification in the Brazilian Amazon,”

GISci. Remote Sens., 48

(3), 345

–370

(2011). http://dx.doi.org/10.2747/1548-1603.48.3.345 1548-1603 Google Scholar

A. Ghosh and P. K. Joshi,

“Assessment of pan-sharpened very high-resolution worldview-2 images,”

Int. J. Remote Sens., 34

(23), 8336

–8359

(2013). http://dx.doi.org/10.1080/01431161.2013.838706 IJSEDK 0143-1161 Google Scholar

M. Ehlers et al.,

“Multi-sensor image fusion for pansharpening in remote sensing,”

Int. J. Image Data Fusion, 1

(1), 25

–45

(2010). http://dx.doi.org/10.1080/19479830903561985 Google Scholar

S. Abdikan and F. B. Sanli,

“Comparison of different fusion algorithms in urban and agricultural areas using SAR (PALSAR and RADARSAT) and optical (SPOT) images,”

Bol. Ciênc. Geodésicas, 18 509531

(2012). http://dx.doi.org/10.1590/S1982-21702012000400001 Google Scholar

K. Rokni et al.,

“A new approach for surface water change detection: integration of pixel level image fusion and image classification techniques,”

Int. J. Appl. Earth Obs. Geoinf., 34 226234

(2015). http://dx.doi.org/10.1016/j.jag.2014.08.014 ITCJDP 0303-2434 Google Scholar

L. Alparone et al.,

“Landsat ETM+ and SAR image fusion based on generalized intensity modulation,”

IEEE Trans. Geosci. Remote Sens., 42 2832

–2839

(2004). http://dx.doi.org/10.1109/TGRS.2004.838344 IGRSD2 0196-2892 Google Scholar

C. Berger, S. Hese and C. Schmullius,

“Fusion of high resolution SAR data and multispectral imagery at pixel level: a statistical comparison,”

245

–268 Ghent, Belgium, pp. 2010). Google Scholar

S. Abdikan et al.,

“A comparative data-fusion analysis of multi-sensor satellite images,”

Int. J. Digital Earth, 7

(8), 671

–687

(2014). http://dx.doi.org/10.1080/17538947.2012.748846 Google Scholar

F. B. Sanli et al.,

“Fusion of Terrasar-X and Rapideye data: a quality analysis,”

ISPRS-Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci., XL-7/W2

(2), 27

–30

(2013). http://dx.doi.org/10.5194/isprsarchives-XL-7-W2-27-2013 1682-1750 Google Scholar

Y. Zhang,

“An automated, information preserved and computational efficient approach to adjustable SAR-MS fusion,”

in Proc. IEEE 2011 Int. Conf. on Geoscience and Remote Sensing Symposium,

(2011). Google Scholar

V. Karathanassi, P. Kolokousis and S. Ioannidou,

“A comparison study on fusion methods using evaluation indicators,”

Int. J. Remote Sens., 28

(10), 2309

–2341

(2007). http://dx.doi.org/10.1080/01431160600606890 IJSEDK 0143-1161 Google Scholar

M. Choi,

“A new intensity-hue-saturation fusion approach to image fusion with a tradeoff parameter,”

IEEE Trans. Geosci. Remote Sens., 44 1672

–1682

(2006). http://dx.doi.org/10.1109/TGRS.2006.869923 IGRSD2 0196-2892 Google Scholar

Z. Wang and A. C. Bovik,

“A universal image quality index,”

IEEE Signal Process. Lett., 9 81

–84

(2002). http://dx.doi.org/10.1109/97.995823 IESPEJ 1070-9908 Google Scholar

L. Wald,

“Quality of high resolution synthesised images: is there a simple criterion?,”

Proc. Third Conf. on Fusion of Earth, 99

–103 SEE/URISCA, Sophia Antipolis, France

(2000). Google Scholar

D. Fasbender, J. Radoux and P. Bogaert,

“Bayesian data fusion for adaptable image pansharpening,”

IEEE Trans. Geosci. Remote Sens., 46 1847

–1857

(2008). http://dx.doi.org/10.1109/TGRS.2008.917131 IGRSD2 0196-2892 Google Scholar

C. Lardeux et al.,

“Use of the SVM classification with polarimetric SAR data for land use cartography,”

in IEEE Int. Conf. on Geoscience and Remote Sensing Symposium,

493

–496

(2006). Google Scholar

H. Huang, J. Legarsky and M. Othman,

“Land-cover classification using Radarsat and Landsat imagery for St. Louis, Missouri,”

Photogramm. Eng. Remote Sens., 73 37

–43

(2007). http://dx.doi.org/10.14358/PERS.73.1.37 PGMEA9 0099-1112 Google Scholar

B. Waske and S. van der Linden,

“Classifying multilevel imagery from SAR and optical sensors by decision fusion,”

IEEE Trans. Geosci. Remote Sens., 46 1457

–1466

(2008). http://dx.doi.org/10.1109/TGRS.2008.916089 IGRSD2 0196-2892 Google Scholar

V. F. Rodriguez-Galiano et al.,

“An assessment of the effectiveness of a random forest classifier for land-cover classification,”

ISPRS J. Photogramm. Remote Sens., 67 93104

(2012). http://dx.doi.org/10.1016/j.isprsjprs.2011.11.002 IRSEE9 0924-2716 Google Scholar

T. Kavzoglu and I. Colkesen,

“A kernel functions analysis for support vector machines for land cover classification,”

Int. J. Appl. Earth Obs. Geoinf., 11

(5), 352359

(2009). http://dx.doi.org/10.1016/j.jag.2009.06.002 ITCJDP 0303-2434 Google Scholar

M. Pal and P. M. Mather,

“Support vector machines for classification in remote sensing,”

Int. J. Remote Sens., 26

(5), 1007

–1011

(2005). http://dx.doi.org/10.1080/01431160512331314083 IJSEDK 0143-1161 Google Scholar

B. Waske and J. A. Benediktsson,

“Fusion of support vector machines for classification of multisensor data,”

IEEE Trans. Geosci. Remote Sens., 45 3858

–3866

(2007). http://dx.doi.org/10.1109/TGRS.2007.898446 IGRSD2 0196-2892 Google Scholar

V. N. Vapnik, Statistical Learning Theory, 1st ed.Wiley-Interscience, New York

(1998). Google Scholar

G. Camps-Valls and L. Bruzzone,

“Kernel-based methods for hyperspectral image classification,”

IEEE Trans. Geosci. Remote Sens., 43 1351

–1362

(2005). http://dx.doi.org/10.1109/TGRS.2005.846154 IGRSD2 0196-2892 Google Scholar

B. Schölkopf and A. J. Smola,

“Learning with kernels,”

Support Vector Machines, Regularization, Optimization, and Beyond, Adaptive Computation and Machine Learning, The MIT Press, Cambridge, Massachusetts

(2002). Google Scholar

L. Breiman,

“Random forests,”

Mach. Learn., 45

(1), 5

–32

(2001). http://dx.doi.org/10.1023/A:1010933404324 MALEEZ 0885-6125 Google Scholar

P. O. Gislason, J. A. Benediktsson and J. R. Sveinsson,

“Random forests for land cover classification,”

Pattern Recognit. Lett., 27

(4), 294

–300

(2006). http://dx.doi.org/10.1016/j.patrec.2005.08.011 PRLEDG 0167-8655 Google Scholar

H. Franco-Lopez, A. R. Ek and M. E. Bauer,

“Estimation and mapping of forest stand density, volume, and cover type using the k-nearest neighbors method,”

Remote Sens. Environ., 77

(3), 251

–274

(2001). http://dx.doi.org/10.1016/S0034-4257(01)00209-7 RSEEA7 0034-4257 Google Scholar

J. R. Otukei and T. Blaschke,

“Land cover change assessment using decision trees, support vector machines and maximum likelihood classification algorithms,”

Int. J. Appl. Earth Obs. Geoinf., 12

(Suppl. 1), S27S31

(2010). http://dx.doi.org/10.1016/j.jag.2009.11.002 ITCJDP 0303-2434 Google Scholar

P. M. Mather and M. Koch, Computer Processing of Remotely-Sensed Images: An Introduction, Wiley-Blackwell, Chichester, West Sussex

(2011). Google Scholar

G. M. Foody,

“Thematic map comparison: evaluating the statistical significance of differences in classification accuracy,”

Photogramm. Eng. Remote Sens., 70 627633

(2004). http://dx.doi.org/10.14358/PERS.70.5.627 PGMEA9 0099-1112 Google Scholar

BiographySaygin Abdikan received his MSc and PhD degrees in geomatics engineering, remote sensing, and GIS program from Yildiz Technical University (YTU), Istanbul, Turkey, in 2007 and 2013, respectively. He is currently working as an assistant professor in the Department of Geomatics Engineering at Bulent Ecevit University. His main research activities are in the area of synthetic aperture radar (SAR), image fusion, SAR interferometry, and digital image processing. Gokhan Bilgin received his BSc, MSc, and PhD degrees in electronics and telecommunication engineering from YTU, Istanbul, Turkey, in 1999, 2003, and 2009, respectively. He worked as a postdoctorate researcher at IUPUI, Indianapolis, Indiana, USA. Currently, he is working as an assistant professor in the Department of Computer Engineering at YTU. His research interests are in the areas of image and signal processing, machine learning, and pattern recognition with applications to biomedical engineering and remote sensing. Fusun Balik Sanli received her MSc degree in ITC, The Netherlands, in 2000. She received a PhD in geomatics engineering, remote sensing, and GIS program from YTU, Istanbul, Turkey, in 2004. Currently, she is working as an academic staff in the Photogrammetry Division of the Geomatic Engineering Department, YTU. Her research includes optical and radar remote sensing, image fusion, information extraction from SAR and optical images. Erkan Uslu received his MS and PhD degrees in computer engineering from YTU, Turkey, in 2007 and 2013, respectively. He has been working as a research assistant at the YTU, Computer Engineering Department since 2006. His research and working fields are image processing, remote sensing, and robotics. Mustafa Ustuner received his MSc degree in geomatics engineering, remote sensing, and GIS program from YTU, Istanbul, Turkey, in 2013. Currently, he is working as a research assistant in the Department of Geomatics Engineering, YTU, Turkey. His research interests include land use/cover classification, image processing, and machine learning in remote sensing. |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||