|

|

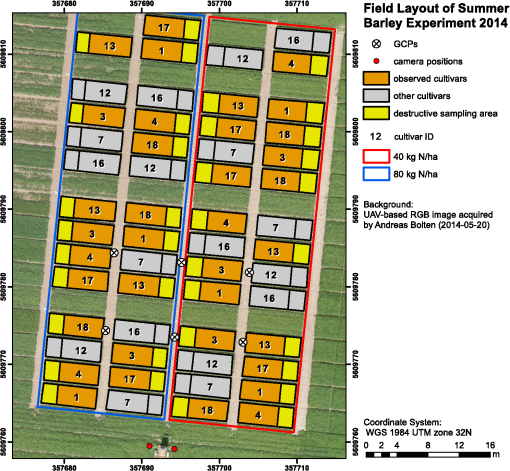

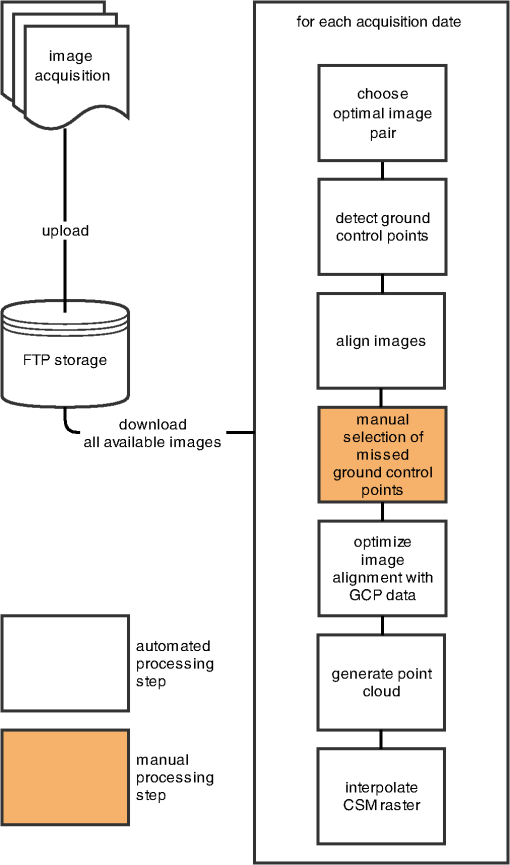

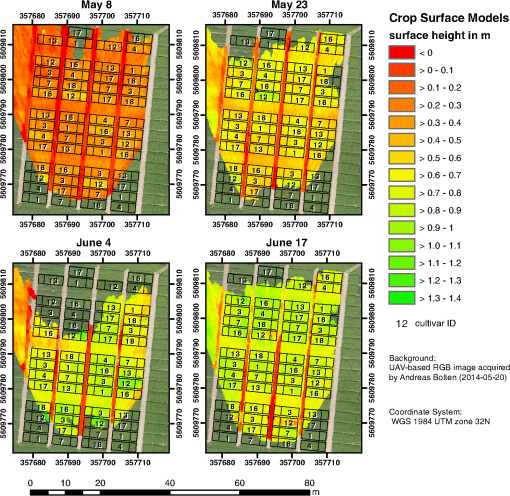

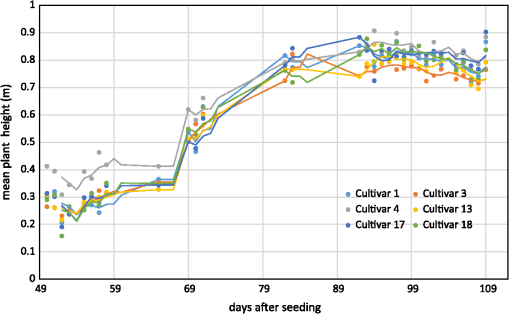

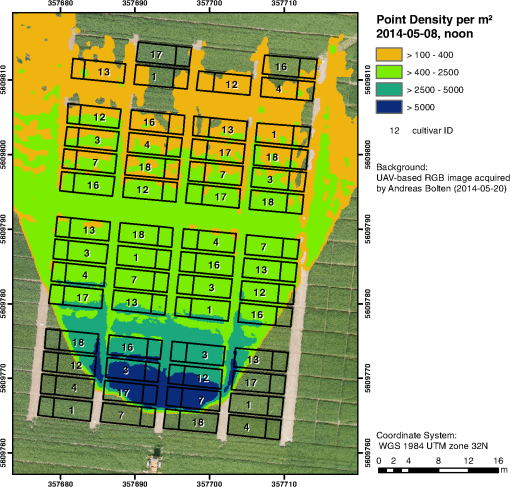

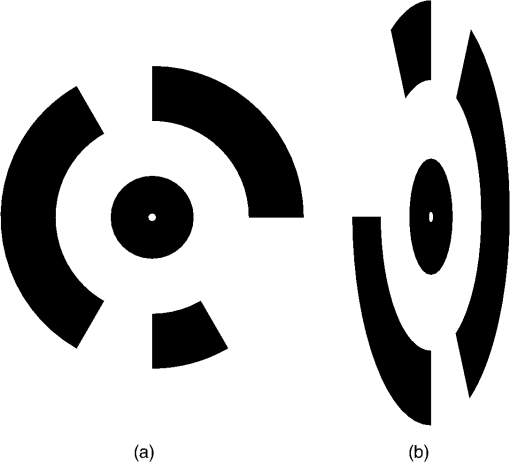

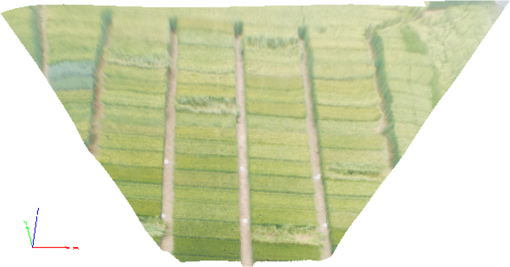

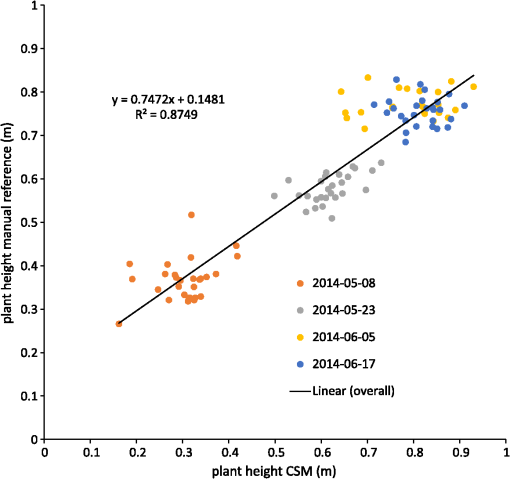

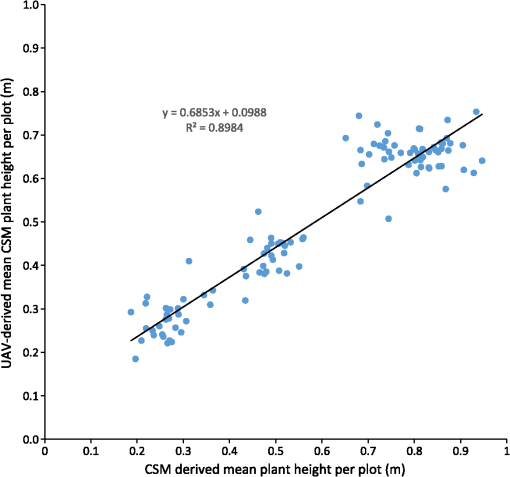

1.IntroductionMonitoring growth, vitality, and stress of crops is a key task in precision agriculture.1 This can be realized by nondestructive monitoring of structural crop parameters such as plant height and growth as well as by monitoring physiological parameters such as chlorophyll or water content. The structural parameters can be monitored using crop surface models (CSMs)2–4 and have been used for biomass estimation on the field level,5,6 while monitoring of physiological parameters is made possible by multi- and hyperspectral or microwave sensors.7–11 Combined approaches using the synergies that structural and physiological crop parameter sensing provide have successfully been investigated.6,–14 In general, remote sensing-based crop monitoring is a very wide research field. A large variety of sensor types are used across different scale levels to benefit precision agriculture.1 These sensors are used from spaceborne, airborne, and terrestrial platforms. The generation of multitemporal CSMs is an analysis approach made possible by the different technologies that allow the retrieval of very high resolution three-dimensional (3-D) data. CSMs are 3-D (more precisely 2.5-D, because only one value is stored per coordinate pair) raster representations of crop canopies. The concept of CSMs has been introduced by Hoffmeister et al.15 for the multitemporal monitoring of crop growth patterns on a field scale across phenological stages using light detection and ranging (LiDAR) data. The crop growth between dates can be derived by subtracting the plant height of successive dates. CSMs have not only been derived from LiDAR data4,16,17 but also from airborne red, green, blue (RGB) imagery.2,5,13 Hyperspectral CSMs have also been derived from hyperspectral snapshot cameras.18 LiDAR is a technology that allows the detection of object points in 3-D space by measuring reflection times and angles of emitted laser pulses. It is used from airborne platforms [airborne laserscanning (ALS)], terrestrial platforms [terrestrial laserscanning (TLS)], and mobile platforms [mobile laserscanning (MLS)] for diverse applications. TLS systems typically achieve subcentimeter accuracies. Examples for using LiDAR in plant monitoring include plant height monitoring and biomass estimation4,16,17 using TLS, forestry applications using ALS for forest inventory,19 TLS for tree crown architecture characterization20 and leaf area density modeling,21 and MLS for canopy volume measurements in olive orchards.22 LiDAR is also combined with hyperspectral remote sensing: full-waveform hyperspectral laser scanning is used for leaf level chlorophyll,9 nitrogen, and carotenoid content23 estimation. Multiple overlapping images from ground-based RGB imagery are used to derive 3-D information using structure-from-motion (SfM) algorithms for rapid phenotyping24 or from aerial imagery to monitor plant height,2 to estimate crop biomass,5 sometimes in combination with RGB-based vegetation indices.13 Such indices can also be derived from single RGB images taken from an elevated position.25 Other examples for plant monitoring using RGB images include plant monitoring via image analysis to measure crop height from single images captured from stationary terrestrial positions,26 crop and weed area monitoring from a mobile tractor-mounted camera27 or the evaluation of seasonal changes in above ground green biomass in grassland.28 Hyper- and multispectral sensors facilitate developing new methods for biomass prediction,29–31 plant nutrition status, and stress monitoring through vegetation indices,32–36 both from terrestrial and airborne platforms, and for measuring sun-induced chlorophyll fluorescence as a measure of photosynthetic activity from an airborne platform.37 New sensors are being introduced at a rapid pace, and now full-frame hyperspectral sensors mountable on unmanned aerial vehicles (UAVs) are available for crop monitoring38 and can be used to generate 3-D hyperspectral CSMs.14 Such 3-D hyperspectral information can also be generated by systems combining traditional push-broom hyperspectral sensors with RGB cameras.39 Thermal imaging sensors are used from airborne platforms to monitor crop water stress.40 All these remote sensing technologies are under investigation to improve crop and plant monitoring. A special case in crop monitoring is phenotyping.41 Crop breeders face the challenge to investigate new genotypes under field conditions (phenotypes) for improving crop varieties. In this context, systems for high-throughput field phenotyping to efficiently evaluate new crop breeds to meet needed yield improvements42 have been established in the last decade. Recent approaches working on a field scale include vehicle-based solutions such as sensor buggies43 or rider sprayers44 mounted with multiple sensors. Fixed field-scale installations such as the SpiderCam field phenotyping platform45 allow the positioning of multiple sensors over individual plots or plants. These fixed field-scale approaches are cost-intensive and/or technically not transferable to the multihectare crop breeding environments. Therefore, the overall objective of this research is the improvement and automation of the low-cost 3-D monitoring system for multiple plots on the field scale introduced by Brocks and Bareth46 and its evaluation using (i) extensive manual measurements and (ii) accurate UAV-derived CSMs. An almost fully automated system for CSM generation for continuous crop growth monitoring is designed, developed, and implemented using oblique imagery acquired from an elevated, static terrestrial platform. This new semiautomated 3-D monitoring system combines consumer-grade hardware with custom software for image acquisition and additional, self-developed and -programmed scripts to semiautomatize the processing performed by existing photogrammetric software. To our knowledge, no comparable system using SfM for a stationary 3-D monitoring exists. An earlier system for crop monitoring from an elevated terrestrial platform has been developed by Lilienthal;47 that system, however, does not retrieve 3-D information. Compared to traditional crop monitoring approaches, the semiautomated system presented here allows monitoring at a much higher temporal frequency with a very high spatial resolution for lower costs. Hardware costs are lower, the data acquisition is less labor-intensive, and the processing is semiautomated. 2.Methods and Data2.1.Structure-from-Motion and Agisoft PhotoScanSfM48 and multiview-stereo (MVS)49 are techniques for deriving 3-D information from overlapping 2-D imagery that are implemented in several software packages, e.g., PhotoScan Professional version 1.1.6 developed by Agisoft (St. Petersburg, Russia) that is used in this study. SfM is a widely applied technique in structural and surface analysis, not only in plant monitoring2,5,13,14,39 but also in geoscience applications50 such as lava flow51 and volcano dome52 monitoring and topography modeling,53 as well as archeology.54 SfM reconstructs the 3-D geometry of a scene, i.e., the position and orientation of the camera, resulting in a sparse point cloud, the camera positions for each image, as well as feature points that were detected during the calculation of the camera positions. The computation of a dense 3-D point cloud follows using different classes of algorithms, e.g., MVS algorithms49 or pair-wise depth map computation.55 PhotoScan matches features across the images by detecting points that are stable under viewpoint and lighting variations and generates a descriptor for each point from its local neighborhood. That descriptor is later used to detect the corresponding points across the images,55 an approach similar to the well-known scale-invariant feature transformation (SIFT) algorithm.56 Then, the internal as well as external camera orientation parameters are first estimated using a greedy algorithm and then refined using a bundle-adjustment algorithm.57 2.2.Data Acquisition2.2.1.Study siteThis study was conducted on a summer barley field experiment located at the Campus Klein–Altendorf (N 50°;37′;27′′, E 6°;59′;16′′), which is part of the Faculty of Agriculture of the University of Bonn. The field experiment was set-up by the CROP.SENSe.net58 interdisciplinary research network that worked toward nondestructively analyzing and screening plant phenotype and crop status such as nutrients and stress. In this field experiment, nine barley cultivars were cultivated in three repetitions with different nitrogen treatments (40 and ). In total, there were 54 randomized plots with a- seeding density and a row spacing of 0.104 m. The seeding date was March 13, 2014. All plots were divided into two parts: A nondestructive measuring area and a area for destructive biomass sampling. Figure 1 shows the layout of the field experiment. Destructive biomass sampling and manual plant height measurements were carried out for a selection of the nine cultivars at five dates spaced evenly throughout the growing period of summer barley. Measurements were carried out on April 23, May 8, May 22, June 5, and June 17. Maximum standing height of the leaves or ears was measured for 10 plants with a precision of 1 cm and averaged. 2.2.2.Data acquisition hardwareFor the image acquisition, we chose the EK-GC100 Samsung Galaxy Camera59 smart camera. It is a consumer-grade smart camera featuring a 4.1- to 86.1-mm focal length (23 to 483 mm, 35 mm equivalent) lens with a optical zoom, a 1/2.3 “ BSI CMOS sensor with a maximum resolution of 16 megapixels (), ISO settings from 100 to 3200, a shutter speed from 16 to 1/2000 s and an aperture from to 8. Wireless local area and mobile network connectivity in combination with the Android 4.1 operating system allowed the development of custom image acquisition applications. To allow the acquisition of two images simultaneously, two cameras of the same model were used. 2.2.3.Data acquisition softwareTo automate the image acquisition, we developed a custom Android application. It automatically acquired images at user-defined settings and transferred them to a server using the File-Transfer-Protocol (FTP) for further processing. The configurable parameters were: the image acquisition rate and the starting time, e.g., every 6 h from the current time, the resolution, the ISO setting, and a filename prefix to be able to uniquely identify the camera that acquired the images. Image acquisition was not directly synchronized but based on the cameras’ internal clock. To account for differing lighting conditions, a constant depth of field, and very good synchronization between the cameras, certain camera parameters were set: for each image acquisition time, three images were taken at three different exposure times: 1/25, 1/50, and 1/100 s and an aperture of . Measures have been taken to minimize power consumption: the application started a timer, which awakened the camera for image acquisition and transferred the images to an FTP server over a wireless local area network. When the file transfer was finished, the camera returned to stand-by mode. The timer activated the camera only during daylight hours. 2.2.4.Monitoring stationThe cameras were placed on a hydraulic hoisting platform at a height of 10 m. Power was provided to the cameras using a USB power pack that was kept charged by a solar panel. The distance between the cameras was set to 3.6 m to maintain a 1:6 base-to-distance ratio compared to the center of the observed field, as suggested in the literature.60 The setup as it was placed on the experimental field is shown in Fig. 2. The placement of the hoisting platform was chosen to get best results for the plots in the front third to center of the observed field, and these plots were of central interest. 2.2.5.Ground referenceTo be able to generate georeferenced CSMs, ground control points (GCPs) needed to be present in the acquired imagery. Six GCPs were placed in the experimental field and measured using the highly accurate TopCon (Tokyo, Japan) HiPer Pro DGPS system,61 c.f., Fig. 1. The GCP targets were sized large enough to be automatically detected by standard algorithms.62 PhotoScan supports the automated detection of GCPs in the imagery. In theory, the standard feature detection algorithms should also have worked on circular GCP targets viewed at oblique angles and thus appearing as ellipses.62 However, preliminary tests revealed that PhotoScan failed to do so. Because an elevated placement of the GCPs to facilitate an appropriate angling of the GCP targets toward the cameras was not possible due to field management, another approach was chosen to enable the automated detection of GCPs. Distorting the GCP targets that are placed flat on the ground allowed an automated detection in PhotoScan, c.f., Fig. 3. Because each GCP had a unique pattern of black-and-white parts, it could be identified and matched with its real-world coordinates. The GCP targets were placed in the area of the plots of central interest in the third of the field closest to the cameras. Fig. 3Sample GCPs: (a) undistorted, used for nadir view from UAV and (b) appropriately distorted and magnified, used for oblique view.  To facilitate this distortion, the 3-D geometry of the image acquisition setup was reconstructed using the open source geometry software GeoGebra.63 For this reconstruction, the camera positions were measured using a Trimble (Sunnyvale, California) M3 Total Station,64 whose position in turn was determined using the TopCon HiPer Pro DGPS system. To calculate the distortion, a square touching the ground at one edge was constructed perpendicular to the axis between the centerpoint between the two camera position and the GCP position. This square was then projected onto the ground surface. The resulting trapezoid encapsulated how the circular GCP image needed to be distorted. To perform the actual distortion of the circular GCP targets, the open source vector graphics software InkScape version 0.91 (Ref. 65) was used. A trapezoid with the dimensions determined in the GeoGebra scene was constructed for each GCP. Then, the vector graphics file for the corresponding GCP was loaded and distorted into the trapezoid shape using the perspective distortion tool. To be able to interpolate a ground elevation raster surface in ArcGIS, 56 additional points throughout the experimental field were measured using the Trimble M3 Total Station. From the elevation of these points, a ground elevation raster was interpolated by first constructing a triangulated irregular network (TIN) out of the points and then interpolating a raster surface with a raster cell size of 1 cm by using a linear interpolation between the TIN triangles. 2.2.6.Unmanned aerial vehicle-based reference imageryFor reference purposes, imagery was also acquired with a Panasonic Lumix GX1 digital camera with an fixed 20 mm lens mounted on a UAV platform (the multirotor MK-Oktokopter). This system was flown at four dates in the growing period (May 6, May 20, June 3, and June 12). Images were acquired from an elevation of 50 m with shutter and aperture settings adapted to lighting conditions (shutter time varied from 1/800 to 1/4000 s, whereas the aperture was set to or ). The acquired images were processed using Agisoft PhotoScan and ArcGIS according to the workflow described by Bendig et al.2 Mean plant heights per plot were calculated by using multitemporal UAV-based CSMs. 2.2.7.Theoretical accuracy considerationsThe maximum achievable accuracy from a stereo image pair can be estimated from the known properties of the scene and the hardware characteristics of the used camera.62 Due to the oblique angle at which images were captured in the setup presented in this study, the accuracy in -, -, and -dimensions varied throughout the scene due to the changing image scale within the images. The ground sampling distance was calculated by multiplying the physical pixel size of the sensor () with the image scale, which was calculated by dividing the camera distance to the object by the camera’s focal length (4.1 mm). It varied from 3.8 mm (camera distance of 12 m) to 6.8 mm in the center of the field to 17.4 mm for the plots farthest from the camera (distance of 55 m). By combining this with an assumed image accuracy of 0.3 to 0.5 pixels, the expected planimetric accuracy was determined to range between 1.14 and 1.9 mm in the plots closest to the camera, between 2.04 and 3.4 mm in the center and between 5.22 and 8.7 mm at the far edge of the field. The planimetric accuracy multiplied with the varying camera distances in the field and divided by the base distance of 3.6 m resulted in a depth accuracy between 3.8 and 6.3 mm in the front, 12.24 and 20.4 mm in the center and 79.8 and 132.94 mm at the far edge of the field. Note that due to the oblique imaging angle, this depth accuracy was the expected accuracy relative to the image axis, i.e., in the viewing direction, not relative to the -axis of the object coordinate system. The accuracy of the -axis of the object coordinate was higher than that relative to the image axis. If the cameras were to be positioned at a higher elevation and thus the viewing angle would be closer to nadir, the differences in expected theoretical accuracy would vary less, because the camera distance would be less variable over the covered area. 2.3.Automated Image Processing ChainTo facilitate the automated generation of CSMs, an automated processing chain was developed. Figure 4 shows an overview of the processing chain. The first step in the processing chain was the automated image acquisition and transmission of the images to an FTP server. This step was implemented in the image acquisition application running on the cameras. The rest of the process chain ran on a desktop computer and was implemented in a set of scripts written in the Python programming language.66 PhotoScan provided a Python application programming interface (API) that allowed the creation of scripts to automate the processing of images to dense point clouds or orthophotos.67 Processing the dense point clouds into CSMs was performed in a separate Python script because ArcGIS 10.3 and PhotoScan use different versions of the Python programming language: the Photoscan part using Python version 3.3 and the ArcGIS part using Python version 2.7. First, all images were downloaded from the FTP server. Then, each acquisition date was processed separately. For each image acquisition time (i.e., morning, noon, and evening of each day), the optimal image pair to be used for the CSM generation had to be selected. For our purposes, we defined “optimal” to mean “contains the most information.” To make this determination, we calculated each image’s Shannon entropy (),68 which is a measure of the available information contained in a signal. It was calculated according to the following equation: where is the probability of the th sample of the signal. By treating the images’ histogram, i.e., the list of pixel counts for each color channel’s 256 intensity values as the signal, we could perform this calculation using the Python library Pillow69 using a custom Python function.We then selected the image pair for further processing where the sum of both images’ Shannon entropy is the highest. Next, PhotoScan’s GCP detection algorithm was run on these two images, resulting in the GCPs being marked in the images. Next, the images were aligned using the alignCamera() function of PhotoScan’s Python API. This resulted in a sparse point cloud containing the camera positions and the detected feature points. In the next step, the image alignment would be optimized using the real-world GCP coordinates that were measured using the DGPS. Unfortunately, the GCP detection algorithm did not always correctly detect all GCPs. The GCPs that were not detected at all or were detected incorrectly (for most image pairs, the three GCPs placed farthest from the cameras were not detected correctly), therefore, needed to be selected manually in the PhotoScan software. This break in the automated chain necessitated a split of the processing script into two scripts, with the manual selection of all missed GCPs for all selected image pairs and each acquisition date performed manually between the two script runs. After the manual selection of the GCPs, the second script was run which then optimized the image alignment as explained above. In the final part of the PhotoScan script, the point cloud was built for the three quality settings medium, high, and ultra. When using the “ultra” quality setting, the original input images were used, whereas “high” and “medium” downscaled the images to half and quarter size, respectively. Finally, the point cloud was exported to a comma-separated-values (.CSV) file. The next part of the processing chain was implemented as a Python script using the “arcpy” site-package that allowed the scripting of ArcGIS processing using Python.70 Each acquisition date was again processed consecutively. The .CSV file containing the dense point cloud that was generated in the last step was converted into a feature class containing 3-D points using the ASCII3DToFeatureClass function, a part of the arcpy 3-D Analyst tool set. Next, a raster surface was interpolated from the dense point cloud using an inverse distance weighted interpolation by using the arcpy. Idw_3d function. For the raster surface interpolation, a resulting cell size of 1 cm was chosen. For each raster cell, the closest 12 points within a distance of 0.5 m were used in the calculation of that raster cell’s elevation so that areas with a very low point density are not misrepresented in the resulting raster surface. From this raster surface that represents height of the canopy above sea level, the CSM representing the absolute plant height was calculated by subtracting the raster surface representing the bare ground elevation by using the spatial analyst function Minus. Additionally, the script then calculated zonal statistics including mean, minimum, and maximum elevation of the generated CSM per plot as well as the mean point density per for each plot for the statistical analysis of the data. This second script mainly consists of standard GIS processing tasks and can thus also be implemented in a different GIS system. To verify that possibility, we performed this part of the analysis using QGIS for some selected dates and received comparable results. 3.Results3.1.Image AcquisitionAt 98 acquisition times from May 2 to June 30, images were successfully acquired. For 95 of these acquisitions, CSMs were successfully generated; in the other three cases, raindrops on the waterproof casing of the cameras prohibited successful image alignment and thus also the CSM generation. Uninterrupted monitoring was not achieved because the USB power pack that was used did not have sufficient capacity to account for weather conditions that prevented successful charging of the power pack via the solar panel. Figure 5 shows four of the generated CSMs, each corresponding to a date at which manual plant height and biomass measurements were undertaken, whereas Fig. 6 shows an interactive scroll-, zoom- and turnable-representation of the CSM for June 17, noon. The areas with a negative elevation between the plots were the bare-soil pathways through the field compressed by people walking on them during the measurement campaigns. Differences between different cultivars as well as within-plot variations of plant height can be clearly observed. Fig. 6Interactive 3-D version of the June 16 CSM, noon acquisition time (use the mouse to zoom, pan, and rotate the scene).  Compared to a fully manual analysis of the acquired data, the semiautomated system significantly reduced the time the user spends during data analysis. The only manual step that needed to be performed was the selection of the GCPs that failed to be detected automatically in each image pair. All other processing was performed without user interaction. 3.2.Statistical Analysis of Generated CSMsOf the three quality settings used during the dense point cloud generation (ultra, high, and medium), the “high” setting was used in the statistical analysis of the results. The medium setting resulted in point densities that were too low ( in approximately half of the monitored plots, especially in the half of the field farthest from the camera positions), whereas the “ultra” setting resulted in large gaps in the point clouds throughout the observed field. For the statistical analysis, all plots with an average point density of were ignored. To ensure the availability of a high-quality CSM to compare to the manual plant height measurements, for each manual measurement date, the CSMs generated for three days were considered: the day before the manual measurement was taken, the day of the measurement and the day after. For the three different image acquisition dates per day (morning, noon, and afternoon), the CSM with the highest count of plots included in the CSM with an average point density was selected. Table 1 shows an overview of the number of plots with point density for the aforementioned CSMs and dates. On this basis, we selected which daily CSM out of the three image acquisitions per day to use for the linear regression of manually measured plant height and CSM-derived plant height. Table 2 shows the coefficients of determination () as well as the root-mean-square error (RMSE) and Willmott’s refined index of model performance ()71 of the three linear regressions that were performed. The noon CSMs performed better than the morning and evening CSMs: the RMSE reaches the lowest values while Willmott’s index of model performance and the coefficient of determination were highest for this acquisition date. Table 1Number of plots with mean point density >100/m2 for relevant CSMs; the bold character represent the selected CSMs.

Note: *No CSM available. Table 2Statistics for the linear regression of CSM-derived and manual height per plot for the three acquisition times of the selected CSMs.

Table 3 shows descriptive statistics including minimum, maximum, mean, and standard deviation of the plot-wise averaged plant heights for all plots that show a point density . Additionally, for each date, the same statistics are shown for the manual measurements of the corresponding date and plots. For both the CSM and the manual measurements, the heights were averaged per plot. For the CSMs, zonal statistics were run for the area covered by all plots with the required point density, and for the manual measurements, all 10 measurements per plot were used. For the three later dates, the CSM derived plant heights showed a higher maximum value. This was most likely caused by the fact that the areas of the plots designated for destructive biomass measurements were marked using plastic poles that rose above the crop surface and were visible in the images used for generating the CSMs. Table 3Descriptive statistics for all plots with point density <100/m2 in the four selected CSMs of the noon acquisition time and the respective manual measurements.

Figure 7 shows the regression model of the plot-wise data of the “noon” CSM, the linear correlation with a high coefficient of correlation () can be seen easily. To exclude low-quality CSMs, all CSMs with plots per CSM with a minimum point density of were excluded from the analysis. Fig. 7Regression of mean CSM-derived plant height and manually measured plant height, noon acquisition time.  Figure 8 shows the mean plant height of the different cultivars over time: cultivar 4 had a faster growth in the early days after seeding. This can also be seen in the CSM for May 8 in Fig. 5. Cultivar 12, which showed the fastest growth in the CSM, was unfortunately not part of the set of cultivars selected for manual plant height measurements and, therefore, not included in the analysis here. Later in the growing season, cultivars 4 and 17 were higher, whereas cultivars 3 and 13 were smaller. The plant height values shown in this diagram are mean values of all mean values of all plots of the respective cultivar over all 3 CSMs/day. Generally, plant height increased until day 92 after seeding. 4.DiscussionThe high correlation values () (c.f., Table 2) and the similarity of the mean plant heights (c.f., Table 3) between CSM-derived plant heights and the manually measured plant heights showed the suitability of the presented system to monitor plant height on a field- to plot scale. The suitability of achieved point densities for different applications has been discussed in the literature72,73 for LiDAR derived point clouds, and the general principles also apply for point clouds derived from stereo imagery. The linear regression of the CSM-derived plant heights with the manual plant height measurements showed the expected lower mean CSM plant heights per plot74 compared to the manual plant height measurements in the early growing stages. The higher CSM plant heights in the late growing stage are not expected because we integrated the plant height measurements over the whole plot for the CSM derived measured. By doing so, lower parts of the plants were also included in the measurements, whereas the manual measurements considered only the maximum height of individual plants per plot. Figure 8 shows that the semiautomated plant height monitoring over time was achieved successfully and that differences in plant height development over time between different cultivars could be observed, such as the fact that cultivar 1 grew faster earlier in the growing period compared to the other five cultivars. The slight downward trend at the end of the observation period can be explained by the fact that with ripening, the barley ears sank and thus height decreased. Small day to day variations in plant height could be explained by several factors: variations could be explained by the accuracy of the presented approach, representing noise in the measurement. Another possible explanation is that plant height could be affected by water availability; after precipitation events, plants can straighten up. A third possibility is wind; during windy conditions, the plants do not reach as high as in windless conditions. Visual inspection of the generated CSMs also show different heights among the different cultivars: cultivar 12 showed faster growth in the first three CMSs shown in Fig. 5 compared to the other cultivars, but later experienced lodging, explaining the within-plot variations shown in the June 17 CSM. For verification purposes, we compared our semiautomatically generated plant heights per plot with CSM-derived plant heights generated from UAV imagery, which have been shown to be reliable.2,3 For this verification, we used the automatically generated CSMs with the highest number of plots with a point density within 1 day of the UAV-based image acquisition. Figure 9 shows the linear regression between the derived mean plant heights per plot. As expected, a high coefficient of determination () was reached. Willmott’s index of model determination reached 0.94 while the RMSE was 0.13 m. The UAV-derived heights are generally lower than the plant heights derived from the 3-D monitoring system introduced in this study. A likely cause for this effect is the oblique image acquisition angle. Due to this angle, smaller plants could be obstructed in the oblique imagery because they were hidden by higher plants closer to the camera. In addition to this, the nadir viewing geometry of the UAV imagery results in more ground points being included in the generated dense point clouds. As the vegetation period continued and the crop canopy closed, less ground points were visible, and thus the difference decreased over time. Other studies74,75 investigating oblique TLS measurements also suggest that plant heights should be higher in oblique viewing geometries when compared to measurements derived from angles closer to nadir. Fig. 9Regression of mean CSM-derived plant height and UAV-derived plant heights, UAV images acquired on May 06, May 20, June 06, and June 12.  It is not clear why the automated GCP detection failed for at least one GCP marker in all image pairs. In some cases, in which weather conditions were sunny, the black and white GCP markers were overexposed, making it impossible for the black-and-white pattern to be automatically detected. However, even for images in which the GCP markers were not overexposed, the GCPs farther from the cameras were not detected, although their size in image space was sufficient (ca. ). One possible explanation is that over time and depending on weather, the GCP markers became partially covered with dirt due to being placed directly on the bare-ground footpaths between the plots. Figure 10 shows the achieved point densities: the point density decreases from (spatial resolution ), in the areas closest to the cameras, to between 2500 and 500, in the front third of the field (allowing a spatial resolution of ), to between 2500 and 400 points in the rest of the field (allowing a spatial resolution of 5 cm). The focus area of key interest is contained in this area. Only for the parts farthest from the cameras, with a point density of at least 100, a spatial resolution of 10 cm is the best possible resolution. As expected, the drop-off in achieved point density with increasing camera distance corresponds roughly to the maximum achievable theoretical accuracy described in Sec. 2.2.7. The extent of the field that was covered by the generated point clouds, and therefore, the generated CSMs, changes between dates, c.f., Fig. 5. The areas that are not covered by all the generated point clouds lie at the far-end field, where the distance to the cameras is highest and the intersection angle is lowest. The depth map calculation algorithm apparently has problems calculating matching feature points at larger distances and lower intersection angles, and this varies from date to date probably due to different external imaging conditions such as wind and lighting. The key area of interest, the plots for which the monitoring design was developed (front third to the center of the field), is not affected by this problem. To be able to achieve a fully automatic monitoring in the future, two approaches need to be evaluated: first, a system in which the camera positions are completely fixed, e.g., by mounting the cameras on a fixed pole without hydraulics, should be examined. In the monitoring station setup presented in this study, where the cameras are mounted on a hydraulic lifting hoist, the camera positions slightly changed due to thermal expansion and compression of the hydraulic fluid. If the camera positions were truly fixed, full automation could be achieved by manually locating the GCP markers in one pair of acquired images just after the monitoring station has been setup and then using these manually determined pixel coordinates for all other image pairs acquired after that. Full automation would be possible because the GCP position in the images would stay fixed as long as both cameras and GCPs are stationary. Second, a custom algorithm for detecting the GCPs could be implemented that takes into account additional information that is known due to the number of GCPs and the relative position of the GCPs and the cameras to each other (six GCPs per image, three GCPs in the top half of the image, two GCPs in each third of the image). This custom algorithm would, however, need to be individually adjusted each time a new field is observed. Another possible improvement to prevent overexposure in sunny conditions would be to change the GCPs to not be black and white, but black and gray instead. To prevent the GCP markers from becoming covered with dirt, they could be mounted slightly elevated. This would also make it possible to place them angled toward the cameras and make it possible to skip the distortion step mentioned earlier. Another point worth discussing is the validity and direct comparability of mean plant heights derived from manual measurements with the CSM-derived plant heights. For the CSM-derived mean plot heights, the points in the dense point cloud do not represent the height of individual plants, but the top of the canopy instead. In contrast, with the manual measurement approach used here, 10 plants in each plot have their height measured individually. Alternative approaches for the manual plant height measurements that result in a better comparability with CSM-derived heights should be investigated in the future. 5.Conclusions and OutlookThe overall aim of this study was to establish a low-cost semiautomated system for crop surface monitoring using oblique red, green, blue (RGB) imagery from consumer-grade smart cameras. 3-D plant height information was successfully derived from these images using SfMand MVS software. Improvements of the system could be implemented by increasing the reliability of the power supply to provide permanent interruption-free monitoring. Compared to other cost-intensive systems for 3-D plant monitoring, such as terrestrial or airborne laser scanning, this study presents a low-cost alternative. This low-cost goal has been mainly achieved by using consumer-grade cameras. While some of the software packages used in this study (Agisoft Photoscan and ArcGIS) are commercial and not free, the GIS processing performed in ArcGIS can also be performed with a free GIS system such as QGIS, as mentioned in Sec. 2.3. Overall, the cost of this system is significantly lower than a permanent installation of a TLS system, because the cheapest TLS systems that are able to cover a similar area cost tens of thousands of US dollars. The cameras used in this study cost just hundreds of dollars, with total costs dollars. Summarizing, this study presents a semiautomated system for 3-D plant monitoring suitable for day-to-day monitoring with potential use in phenotyping. Future work should focus on several areas: (i) The investigation of whether it is possible to adapt this automated system to UAV-based image processing, therefore allowing the monitoring of a larger area by including more images acquired from a single UAV-based camera. To be able to examine how varying camera height and distance between cameras affect the quality of the results, future studies should include variation of these parameters. (ii) The feasibility of this system for biomass prediction based on the CSM-derived plant heights, analogous to the work of Bendig et al.5 and Tilly et al.4 (iii) The option of integrating other consumer-grade cameras with superior optics and larger sensors into this system, such as the Sony ICLE-QX1 mirrorless camera that features an APS-C-size sensor and interchangeable optics. (iv) The possibility of achieving a more even distribution of point density by using multiple stations positioned around one field and merging the point clouds produced by these multiple stations. By doing so, the whole field could be covered with a spatial resolution of , thus achieving uniform data quality which would be more suitable for phenotyping applications. AcknowledgmentsWe acknowledge our colleagues Helge Aasen, Andreas Bolten, and Nora Tilly and the student assistants Simon Bennertz, Jonas Brands, Janis Broscheit, Silas Eichfuss, Sven Ortloff, and Max Willkomm for their great work in collecting data in the field and the staff of Campus Klein-Altendorf (University of Bonn) for managing the field experiment as well as providing the hydraulic lifting hoist and the mounting platform for the cameras. The field experiment was setup within the CROP.SENSe.net project in the context of the Ziel 2-Programm NRW 2007–2013 “Regionale Wettbewerbsfähigkeit und Beschäftigung” by the Ministry for Innovation, Science and Research (MIWF) of the state North Rhine Westphalia (NRW), and European Union Funds for regional development (EFRE) (005-1103-0018). ReferencesD. J. Mulla,

“Twenty five years of remote sensing in precision agriculture: key advances and remaining knowledge gaps,”

Biosyst. Eng., 114 358

–371

(2013). http://dx.doi.org/10.1016/j.biosystemseng.2012.08.009 Google Scholar

J. Bendig, A. Bolten and G. Bareth,

“UAV-based imaging for multi-temporal, very high resolution crop surface models to monitor crop growth variability,”

Photogramm. Fernerkundung Geoinf., 2013 551

–562

(2013). http://dx.doi.org/10.1127/1432-8364/2013/0200 Google Scholar

J. Geipel, J. Link and W. Claupein,

“Combined spectral and spatial modeling of corn yield based on aerial images and crop surface models acquired with an unmanned aircraft system,”

Remote Sens., 11 10335

–10355

(2014). http://dx.doi.org/10.3390/rs61110335 Google Scholar

N. Tilly et al.,

“Multitemporal crop surface models: accurate plant height measurement and biomass estimation with terrestrial laser scanning in paddy rice,”

J. Appl. Remote Sens., 8 083671

(2014). http://dx.doi.org/10.1117/1.JRS.8.083671 Google Scholar

J. Bendig et al.,

“Estimating biomass of barley using crop surface models (CSMs) derived from UAV-based RGB imaging,”

Remote Sens., 6 10395

–10412

(2014). http://dx.doi.org/10.3390/rs61110395 Google Scholar

N. Tilly, H. Aasen and G. Bareth,

“Fusion of plant height and vegetation indices for the estimation of Barley biomass,”

Remote Sens., 7 11449

–11480

(2015). http://dx.doi.org/10.3390/rs70911449 Google Scholar

J. Clevers and A. Gitelson,

“Remote estimation of crop and grass chlorophyll and nitrogen content using red-edge bands on Sentinel-2 and -3,”

Int. J. Appl. Earth Obs. Geoinf., 23

(2013), 344

–351

(2013). http://dx.doi.org/10.1016/j.jag.2012.10.008 Google Scholar

K. Yu et al.,

“Estimate leaf chlorophyll of rice using reflectance indices and partial least squares,”

Photogramm. Fernerkundung Geoinf., 2015 45

–54

(2015). http://dx.doi.org/10.1127/pfg/2015/0253 Google Scholar

O. Nevalainen et al.,

“Fast and nondestructive method for leaf level chlorophyll estimation using hyperspectral LiDAR,”

Agric. For. Meteorol., 198-199 250

–258

(2014). http://dx.doi.org/10.1016/j.agrformet.2014.08.018 Google Scholar

S. Dadshani et al.,

“Non-invasive assessment of leaf water status using a dual-mode microwave resonator,”

Plant Methods, 11

(1), 8

(2015). http://dx.doi.org/10.1186/s13007-015-0054-x Google Scholar

R. Gente, A. Rehn and M. Koch,

“Contactless water status measurements on plants at 35 GHz,”

J. Infrared Millimeter and Terahertz Waves, 36

(3), 312

–317

(2015). http://dx.doi.org/10.1007/s10762-014-0127-3 Google Scholar

M. Marshall and P. Thenkabail,

“Advantage of hyperspectral EO-1 hyperion over multispectral IKONOS, Geoeye-1, Worldview-2, Landsat ETM+, and MODIS vegetation indices in crop biomass estimation,”

ISPRS J. Photogramm. Remote Sens., 108 205

–218

(2015). http://dx.doi.org/10.1016/j.isprsjprs.2015.08.001 Google Scholar

J. Bendig et al.,

“Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley,”

Int. J. Appl. Earth Obs. Geoinf., 39 79

–87

(2015). http://dx.doi.org/10.1016/j.jag.2015.02.012 Google Scholar

H. Aasen et al.,

“Generating 3D hyperspectral information with lightweight UAV snapshot cameras for vegetation monitoring: from camera calibration to quality assurance,”

ISPRS J. Photogramm. Remote Sens., 108 245

–259

(2015). http://dx.doi.org/10.1016/j.isprsjprs.2015.08.002 Google Scholar

D. Hoffmeister et al.,

“High resolution crop surface models (CSM) and crop volume models (CVM) on field level by terrestrial laser scanning,”

Proc. SPIE, 7840 78400E

(2010). http://dx.doi.org/10.1117/12.872315 Google Scholar

N. Tilly et al.,

“Transferability of models for estimating paddy rice biomass from spatial plant height data,”

Agriculture, 5 538

–560

(2015). http://dx.doi.org/10.3390/agriculture5030538 Google Scholar

N. Tilly et al.,

“Precise plant height monitoring and biomass estimation with terrestrial laser scanning in paddy rice,”

ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci., II-5/W2 295

–300

(2013). http://dx.doi.org/10.5194/isprsannals-II-5-W2-295-2013 Google Scholar

H. Aasen et al.,

“Automated hyperspectral vegetation index retrieval from multiple correlation matrices with hypercor,”

Photogramm. Eng. Remote Sens., 80 785

–795

(2014). http://dx.doi.org/10.14358/PERS.80.8.785 Google Scholar

J. Hyyppä et al.,

“Review of methods of small-footprint airborne laser scanning for extracting forest inventory data in boreal forests,”

Int. J. Remote Sens., 29 1339

–1366

(2008). http://dx.doi.org/10.1080/01431160701736489 Google Scholar

I. Moorthy et al.,

“Field characterization of olive (Olea europaea L.) tree crown architecture using terrestrial laser scanning data,”

Agric. For. Meteorol., 151 204

–214

(2011). http://dx.doi.org/10.1016/j.agrformet.2010.10.005 Google Scholar

F. Hosoi and K. Omasa,

“Voxel-Based 3-D modeling of individual trees for estimating leaf area density using high-resolution portable scanning lidar,”

IEEE Trans. Geosci. Remote Sens., 44 3610

–3618

(2006). http://dx.doi.org/10.1109/TGRS.2006.881743 Google Scholar

A. Escolà et al.,

“A mobile terrestrial laser scanner for tree crops: point cloud generation, information extraction and validation in an intensive olive orchard,”

Precision Agriculture ‘15, 337

–344 Wageningen Academic Publishers, The Netherlands

(2015). Google Scholar

W. Li et al.,

“Estimation of leaf biochemical content using a novel hyperspectral full-waveform LiDAR system,”

Remote Sens. Lett., 5 693

–702

(2014). http://dx.doi.org/10.1080/2150704X.2014.960608 Google Scholar

S. Jay et al.,

“In-field crop row phenotyping from 3D modeling performed using structure from motion,”

Comput. Electron. Agric., 110 70

–77

(2015). http://dx.doi.org/10.1016/j.compag.2014.09.021 Google Scholar

T. Motohka et al.,

“Applicability of green-red vegetation index for remote sensing of vegetation phenology,”

Remote Sens., 2

(10), 2369

–2387

(2010). http://dx.doi.org/10.3390/rs2102369 Google Scholar

T. Sritarapipat, P. Rakwatin and T. Kasetkasem,

“Automatic rice crop height measurement using a field server and digital image processing,”

Sensors, 14 9

–26

(2014). http://dx.doi.org/10.3390/s140100900 Google Scholar

T. Hague, N. D. Tillett and H. Wheeler,

“Automated crop and weed monitoring in widely spaced cereals,”

Precision Agric., 7 21

–32

(2006). http://dx.doi.org/10.1007/s11119-005-6787-1 Google Scholar

T. Inoue et al.,

“Utilization of ground-based digital photography for the evaluation of seasonal changes in the aboveground green biomass and foliage phenology in a grassland ecosystem,”

Ecol. Inf., 25 1

–9

(2015). http://dx.doi.org/10.1016/j.ecoinf.2014.09.013 Google Scholar

M. L. Gnyp et al.,

“Hyperspectral canopy sensing of paddy rice aboveground biomass at different growth stages,”

Field Crops Res., 155 42

–55

(2014). http://dx.doi.org/10.1016/j.fcr.2013.09.023 Google Scholar

M. L. Gnyp et al.,

“Development and implementation of a multiscale biomass model using hyperspectral vegetation indices for winter wheat in the North China plain,”

Int. J. Appl. Earth Obs. Geoinf., 33

(1), 232

–242

(2014). http://dx.doi.org/10.1016/j.jag.2014.05.006 Google Scholar

P. S. Thenkabail et al.,

“Selection of hyperspectral narrowbands (HNBs) and composition of hyperspectral twoband vegetation indices (HVIs) for biophysical characterization and discrimination of crop types using field reflectance and hyperion/EO-1 data,”

IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens., 6 427

–439

(2013). http://dx.doi.org/10.1109/JSTARS.2013.2252601 Google Scholar

Q. Cao et al.,

“Non-destructive estimation of rice plant nitrogen status with crop circle multispectral active canopy sensor,”

Field Crops Res., 154 133

–144

(2013). http://dx.doi.org/10.1016/j.fcr.2013.08.005 Google Scholar

K. Yu et al.,

“Remotely detecting canopy nitrogen concentration and uptake of paddy rice in the Northeast China plain,”

ISPRS J. Photogramm. Remote Sens., 78 102

–115

(2013). http://dx.doi.org/10.1016/j.isprsjprs.2013.01.008 Google Scholar

F. Li et al.,

“Evaluating hyperspectral vegetation indices for estimating nitrogen concentration of winter wheat at different growth stages,”

Precision Agric., 11 335

–357

(2010). http://dx.doi.org/10.1007/s11119-010-9165-6 Google Scholar

F. Li et al.,

“Estimating N status of winter wheat using a handheld spectrometer in the North China plain,”

Field Crops Res., 106 77

–85

(2008). http://dx.doi.org/10.1016/j.fcr.2007.11.001 Google Scholar

A. K. Tilling et al.,

“Remote sensing of nitrogen and water stress in wheat,”

Field Crops Res., 104 77

–85

(2007). http://dx.doi.org/10.1016/j.fcr.2007.03.023 Google Scholar

M. Rossini et al.,

“Red and far red Sun-induced chlorophyll fluorescence as a measure of plant photosynthesis,”

Geophys. Res. Lett., 42 1632

–1639

(2015). http://dx.doi.org/10.1002/2014GL062943 Google Scholar

G. Bareth et al.,

“Low-weight and UAV-based hyperspectral full-frame cameras for monitoring crops: spectral comparison with portable spectroradiometer measurements,”

Photogramm. Fernerkundung Geoinf., 2015 69

–79

(2015). http://dx.doi.org/10.1127/pfg/2015/0256 Google Scholar

J. Suomalainen et al.,

“A lightweight hyperspectral mapping system and photogrammetric processing chain for unmanned aerial vehicles,”

Remote Sens., 6

(11), 11013

–11030

(2014). http://dx.doi.org/10.3390/rs61111013 Google Scholar

J. Bellvert et al.,

“Mapping crop water stress index in a ‘Pinot-noir’ vineyard: comparing ground measurements with thermal remote sensing imagery from an unmanned aerial vehicle,”

Precision Agric., 15 361

–376

(2013). http://dx.doi.org/10.1007/s11119-013-9334-5 Google Scholar

J. W. White et al.,

“Field-based phenomics for plant genetics research,”

Field Crops Res., 133 101

–112

(2012). http://dx.doi.org/10.1016/j.fcr.2012.04.003 Google Scholar

J. L. Araus and J. E. Cairns,

“Field high-throughput phenotyping: the new crop breeding frontier,”

Trends Plant Sci., 19 52

–61

(2014). http://dx.doi.org/10.1016/j.tplants.2013.09.008 Google Scholar

D. Deery et al.,

“Proximal remote sensing buggies and potential applications for field-based phenotyping,”

Agronomy, 4 349

–379

(2014). http://dx.doi.org/10.3390/agronomy4030349 Google Scholar

P. Andrade-Sanchez et al.,

“Development and evaluation of a field-based high-throughput phenotyping platform,”

Funct. Plant Biol., 41

(2007), 68

–79

(2014). http://dx.doi.org/10.1071/FP13126 Google Scholar

ETH Zürich, “ETH Zürich field phenotyping platform (FIP),”

(2015) http://www.kp.ethz.ch/infrastructure/FIP November ). 2015). Google Scholar

S. Brocks and G. Bareth,

“Evaluating the potential of consumer-grade smart cameras for low-cost stereo-photogrammetric crop-surface monitoring,”

ISPRS Int. Archives Photogramm. Remote Sens. Spat. Inf. Sci., XL-7 43

–49

(2014). http://dx.doi.org/10.5194/isprsarchives-XL-7-43-2014 Google Scholar

H. Lilienthal, Entwicklung eines bodengestützten Fernerkundungssystems für die Landwirtschaft (Landbauforschung Völkenrode: Sonderheft 254), Technische Universität Braunschweig, Braunschweig

(2003). Google Scholar

S. Ullman,

“The interpretation of structure from motion,”

Proc. R. Soc. London, Ser. B, 203

(1153), 405

–426

(1979). Google Scholar

S. Seitz et al.,

“A comparison and evaluation of multi-view stereo reconstruction algorithms,”

in 2006 IEEE Computer Society Conf. on Computer Vision and Pattern Recognition (CVPR’06),

519

–528

(2006). http://dx.doi.org/10.1109/CVPR.2006.19 Google Scholar

M. Westoby et al.,

“‘Structure-from-motion’ photogrammetry: a low-cost, effective tool for geoscience applications,”

Geomorphology, 179 300

–314

(2012). http://dx.doi.org/10.1016/j.geomorph.2012.08.021 Google Scholar

M. James and S. Robson,

“Sequential digital elevation models of active lava flows from ground-based stereo time-lapse imagery,”

ISPRS J. Photogramm. Remote Sens., 97 160

–170

(2014). http://dx.doi.org/10.1016/j.isprsjprs.2014.08.011 Google Scholar

M. R. James and N. Varley,

“Identification of structural controls in an active lava dome with high resolution DEMs: Volcán de Colima, Mexico,”

Geophys. Res. Lett., 39

(2012). http://dx.doi.org/10.1029/2012GL054245 Google Scholar

L. Javernick, J. Brasington and B. Caruso,

“Modeling the topography of shallow braided rivers using structure-from-motion photogrammetry,”

Geomorphology, 213 166

–182

(2014). http://dx.doi.org/10.1016/j.geomorph.2014.01.006 Google Scholar

G. Verhoeven,

“Taking computer vision aloft– archaeological three-dimensional reconstructions from aerial photographs with photoscan,”

Archaeological Prospection, 73 67

–73

(2011). http://dx.doi.org/10.1002/arp.399 Google Scholar

D. Semyonov,

“Algorithms used in photoscan,”

(2011) http://www.agisoft.com/forum/index.php?topic=89.msg323#msg323 January 2015). Google Scholar

D. G. Lowe,

“Distinctive image features from scale-invariant keypoints,”

Int. J. Comp. Vision, 60 91

–110

(2004). http://dx.doi.org/10.1023/B:VISI.0000029664.99615.94 Google Scholar

B. Triggs et al.,

“Bundle adjustment—a modern synthesis,”

Lect. Notes Comput. Sci., 1883 298

–372

(2000). http://dx.doi.org/10.1007/3-540-44480-7 Google Scholar

M. Herker,

“Crop.SENSe.net,”

(2015) https://www.cropsense.uni-bonn.de/ July ). 2015). Google Scholar

Samsung, “Specs—Point-and-Shoot EK-GC100,”

(2015) http://www.samsung.com/us/photography/digital-cameras/EK-GC100ZWAATT-specs January ). 2015). Google Scholar

J. Chandler, J. Fryer and A. Jack,

“Metric capabilities of low-cost digital cameras for close range surface measurement,”

Photogramm. Record, 20 12

–26

(2005). http://dx.doi.org/10.1111/j.1477-9730.2005.00302.x Google Scholar

Topcon Positioning Systems, “HiPer pro operator’s manual,”

(2015) http://www.top-survey.com/top-survey/downloads/HiPerPro_om.pdf January ). 2015). Google Scholar

T. Luhmann et al., Close-Range Photogrammetry and 3D Imaging, 2nd ed.Walter de Gruyther, Berlin

(2014). Google Scholar

GeoGebra Institute, “Geogebra,”

(2015) http://www.geogebra.org/ January ). 2015). Google Scholar

Trimble, “Datasheet—Trimble M3,”

(2015) http://trl.trimble.com/dscgi/ds.py/Get/File-262358/022543-155J_TrimbleM3_DS_0414_LR.pdf July ). 2015). Google Scholar

Inkspace Project, “About Inkscape,”

(2015) http://inkscape.org/en/about July ). 2015). Google Scholar

Agisoft, “PhotoScan python reference,”

(2014) http://www.agisoft.com/pdf/photoscan_python_api_1_1_0.pdf December ). 2014). Google Scholar

C. E. Shannon,

“A mathematical theory of communication,”

Bell Sys. Tech. J., 27 379

–423

(1948). http://dx.doi.org/10.1145/584091.584093 Google Scholar

F. Lundh and A. Clark,

“About Pillow,”

(2016) https://pillow.readthedocs.io/ern/3.3.x/about.html July ). 2016). Google Scholar

P. A. Zandbergen, Python Scripting for ArcGIS, Esri Press, Redlands

(2013). Google Scholar

C. J. Willmott, S. M. Robeson and K. Matsuura,

“A refined index of model performance,”

Int. J. Climatol., 32

(13), 2088

–2094

(2012). http://dx.doi.org/10.1002/joc.2419 Google Scholar

M. Hämmerle and B. Höfle,

“Effects of reduced terrestrial LiDAR point density on high-resolution grain crop surface models in precision agriculture,”

Sensors, 14

(12), 24212

–24230

(2014). http://dx.doi.org/10.3390/s141224212 Google Scholar

S. Luo et al.,

“Effects of LiDAR point density, sampling size and height threshold on estimation accuracy of crop biophysical parameters,”

Opt. Express, 24

(11), 11578

(2016). http://dx.doi.org/10.1364/OE.24.011578 Google Scholar

G. Bareth et al.,

“A comparison of UAV- and TLS-derived plant height for crop monitoring: using polygon grids for the analysis of crop surface models (CSMs),”

Photogramm. Fernerkundung Geoinf., 2016

(2), 85

–94

(2016). http://dx.doi.org/10.1127/pfg/2016/0289 Google Scholar

D. Ehlert and M. Heisig,

“Sources of angle-dependent errors in terrestrial laser scanner-based crop stand measurement,”

Comput. Electron. Agric., 93 10

–16

(2013). http://dx.doi.org/10.1016/j.compag.2013.01.002 Google Scholar

BiographySebastian Brocks studied geography at the University of Colgone and received his diploma degree in 2012. Since then, he worked as a PhD student at the working group for GIS and Remote Sensing of University of Cologne’s Institute of Geography. His research interest is focused on low-cost 3-D vegetation monitoring. Juliane Bendig received her diploma degree in geography and her PhD in physical geography from the University of Cologne, Germany, in 2010 and 2015, respectively. She is conducting research in remote sensing with unmanned aerial vehicles (UAVs) in combination with different sensors, concentrating on agricultural applications. Currently, she explores retrieval of sun-induced chlorophyll fluorescence (SIF) with UAVs at the University of Tasmania, Australia. |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||