|

|

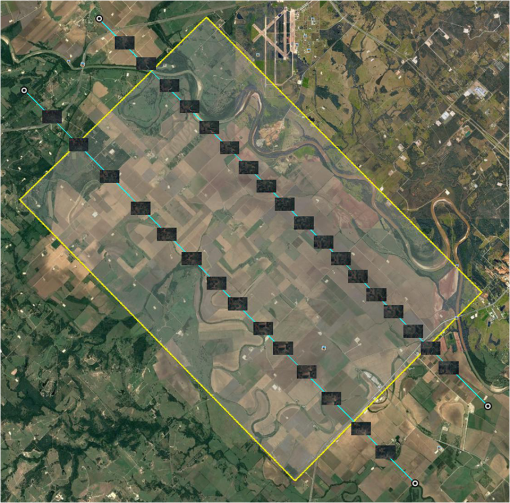

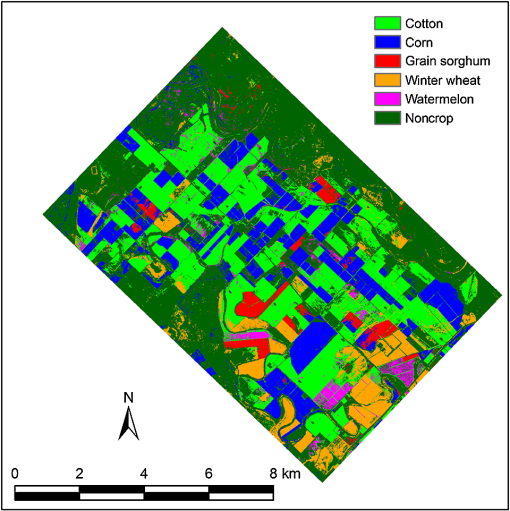

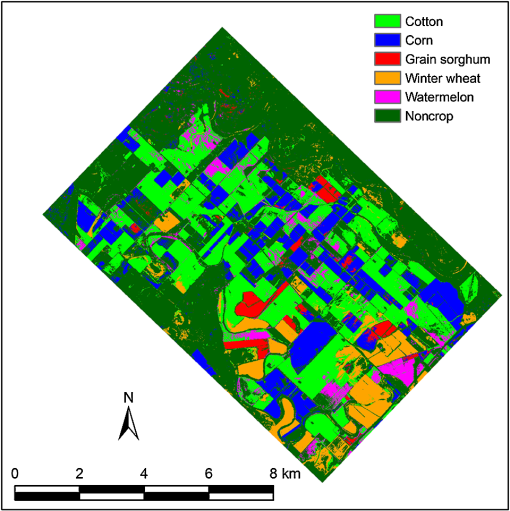

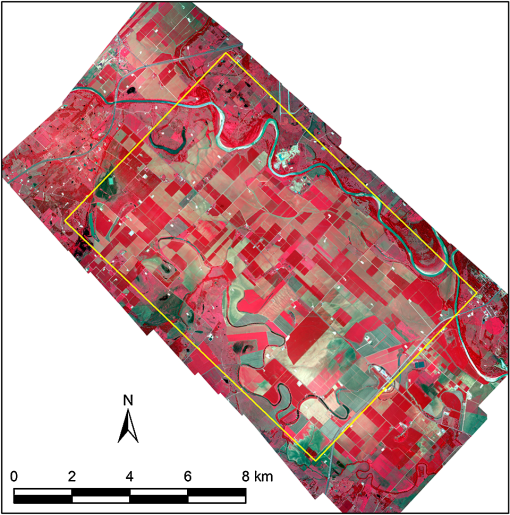

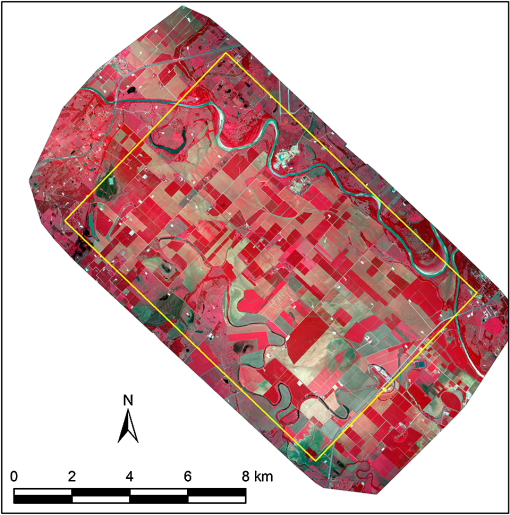

1.IntroductionThe boll weevil (Anthonomus grandis grandis Boheman) is now eradicated from all cotton-producing states in the U.S. except for the Rio Grande Valley of Texas.1 However, the cotton (Gossypium hirsutum L.) growing areas, especially those adjacent to the lower Rio Grande Valley, will remain susceptible to reinfestation from boll weevils that migrate or are transported on cotton harvesting equipment. The eradication program functions by monitoring all cotton fields using pheromone traps to detect incipient weevil populations and by applying insecticides when and where justified by weevil captures or as preventive measures.2 Although cotton producers are required to report the location of planted cotton fields to the Farm Service Agency, this information is belatedly available for the boll weevil eradication program. Therefore, early identification of fields planted in cotton is critical for eradication program managers to effectively monitor boll weevil populations and treat the respective fields in a timely manner. Another important aspect to ensure the success of the eradication program is the mandatory and timely elimination of cotton plants following harvest and the subsequent creation of a host-free period. By law, cotton plants must be destroyed after harvest by a certain date to prevent regrowth of cotton fruit on which weevil populations can survive and reproduce.3 Volunteer cotton plants can also germinate from seed that remains in a field after harvest or that is inadvertently scattered by floods or by transport of harvested cotton to gins. Information on the location and area of planted cotton fields will facilitate the quick detection of potential areas for volunteer and regrowth cotton plants. Multispectral imagery from satellite sensors, such as Landsat and SPOT, has been used for crop identification and area estimation for decades.4–7 As high-resolution satellite sensors, such as IKONOS, QuickBird, and SPOT 5, have become available more recently, imagery from these sensors has also been evaluated for crop identification.8–12 Recent advances in imaging technologies have made consumer-grade digital cameras an attractive option for remote sensing applications due to their low cost, small size, compact data storage, and ease of use. Consequently, consumer-grade digital color cameras have been increasingly used by researchers for agricultural applications.13–16 Unlike traditional sophisticated airborne imaging systems that need to be mounted on designated remote sensing aircraft, imaging systems based on consumer-grade cameras can be easily mounted on any small aircraft with no or minimal modification.17 Furthermore, agricultural aircraft provide a readily available and versatile platform for airborne remote sensing. Single-camera and two-camera imaging systems have been attached to agricultural aircraft to take aerial images for monitoring crop growing conditions and detecting crop pests.15–18 Since aerial imagery has a relatively small ground coverage compared with satellite imagery, a large number of images taken along multiple flight lines with specified minimum overlaps are needed to cover a large geographic region. Image mosaicking techniques are then used to mosaic the images. Most image processing software, such as Erdas Imagine (Intergraph Corporation, Madison, Alabama), requires that the input images be georeferenced to a coordinate system with known projection information before being mosaicked based on their geographic position. Another approach for image mosaicking is based on the content of the images using photogrammetry software, such as Pix4DMapper (Pix4D SA, Lausanne, Switzerland). Pix4DMapper can automatically convert large numbers of aerial images into georeferenced two-dimensional orthomosaics and three-dimensional surface models using the latest innovations in computer vision and photogrammetry. Various pixel-based classification methods have been used to classify remote sensing imagery. These include traditional unsupervised and supervised classification methods, such as iterative self-organizing data analysis and maximum likelihood (ML), as well as more advanced techniques, such as artificial neural networks, support vector machines, and decision trees.19–22 These classifiers and techniques provide varying levels of success for crop identification depending on the complexity of crop growing environments. However, with the pixel-based classification approach, pixels are classified individually based on their spectral characteristics regardless of their spatial aggregation. Another approach for crop identification is object-based classification, which typically relies on segmentation algorithms to define geographical objects that can be classified. Object-based classification can improve classification accuracy.23,24 Therefore, object-based techniques have been increasingly used for image classification.25–27 However, object-based classification generally requires additional software and advanced image processing skills.16,28 For parcel- or field-based classification, field boundaries need to be available or manually digitized.12,24 This can add more processing time and cost for the production of classification maps. The overall goal of this study is to develop practical methodologies for early identification of cotton fields using airborne multispectral imagery. To achieve this goal, accurate and easy-to-use image mosaicking and classification techniques needed to be identified for efficient and quick implementation. As discussed above, satellite imagery has been widely used for crop identification, but limited work has been reported on early identification of cotton fields, especially using aerial imagery. Therefore, the specific objectives of this study are to: (1) evaluate position- and content-based image mosaicking techniques for creating mosaics from overlapped aerial multispectral imagery; (2) compare five commonly used supervised classification methods, including ML, minimum distance (MD), Mahalanobis distance (MAHD), spectral angle mapper (SAM), and spectral correlation mapper (SCM), for crop identification from the mosaics; and (3) identify practical image mosaicking techniques and classification methods that can be easily implemented for identification of cotton fields from aerial imagery before cotton plants begin to flower. 2.Materials and Methods2.1.Study SiteAn cropping area with the center coordinates (30°34′11″N, 96°29′4″W) along the Brazos River near Snook, Burleson County, Texas, was selected for this study (Fig. 1). The soils in the area are very deep and very slowly permeable with slopes ranging from 0% to 3%. The surface layer and subsoil are reddish brown clay underlain by dark gray silty clay loam. The underlying material is mainly composed of clayey and loamy alluvial sediments. The clayey surface layer may delay cultivation after periods of prolonged rainfall. Cotton, corn, and grain sorghum are the main crops. Minor crops, such as winter wheat, soybeans, watermelon, and alfalfa, are also cultivated. Corn and grain sorghum are typically planted in February to March and harvested in July to August, while cotton is planted in April to May and harvested in August to October. In the 2014 growing season, cotton and corn were the two main crops, while grain sorghum, winter wheat, and watermelons were grown in the study area. Other noncrop cover types included mixed herbaceous species (pasture), lush grass (hay), mixed woody species, water bodies, and bare soil/impervious surfaces. 2.2.Image AcquisitionA two-camera imaging system described by Yang et al.14 was used to acquire images from the study area. The system consisted of two Canon EOS 5D Mark II digital cameras with a array (Canon USA Inc., Lake Success, New York). One camera was used to capture red-green-blue (RGB) images, while the other was modified to capture near-infrared (NIR) images by replacing the NIR blocking filter fitted in front of the sensor with a 720-nm longpass filter. The modified camera also had three channels, but only NIR radiation was recorded. As the red channel had the best sensitivity, the image recorded in the red channel was used as the NIR image. The RGB camera was also equipped with a global positioning system (GPS) receiver to geotag images. A remote control was used to trigger both cameras simultaneously. Images from each camera were stored in 16-bit RAW (CR2) and JPEG files in a CompactFlash card. A total of 32 pairs of RGB and NIR images were captured at 3120 m above ground level along two flight lines spaced 3200 m apart. Each image covered a ground area of with a ground pixel size of 1.0 m. A Cessna 206 single-engine aircraft was used to acquire imagery under sunny conditions on June 10, 2014. The side overlap between the flight lines was 43%, and the forward overlap between the successive images was at least 50%. Since the GPS information was included in the metadata of the RAW and JPEG images, Picasa (Google Inc., Mountain View, California) was used to create a KMZ file from the 32 JPEG images, so the geotagged images (thumbnails) can be viewed on Google Earth (Google Inc., Mountain View, California) (Fig. 1). At the time of the image acquisition, cotton plants were predominately at the pinhead to the third-grown square stage with an average height of 20 to 54 cm and an average width of 22 to 55 cm in the sampled fields. Corn had tasseled and grain sorghum started to head, and the canopy cover of these two crops was closed at the time of imaging. 2.3.Image MosaickingBoth traditional position-based and more advanced content-based approaches were used to mosaic the images in this study. Erdas Imagine was selected for the position-based approach, while Pix4DMapper Pro was used for the content-based approach. Before the two mosaicking approaches were applied, the 32 pairs of RAW images were first corrected for vignetting and then converted to 16-bit Tiff using Digital Photo Professional software (Canon USA Inc., Lake Success, New York). The position-based approach started with aligning each pair of RGB and NIR images as a four-band image based on nine ground control points (GCPs) evenly distributed across the pair of images using a second-order polynomial transformation model. The root-mean-squared (RMS) errors for the 32 pairs of images ranged from 0.2 to 0.7 pixels with a mean of 0.4 pixels. Then each aligned image was georeferenced to the World Geodetic System 1984 (WGS84) datum and the Universal Transverse Mercator (UTM) Zone 14N coordinate system. Similarly, nine evenly distributed GCPs were identified on the aligned image. As a large number of GCPs for all the images were needed, the UTM coordinates for the GCPs were directly extracted from the October 3, 2014, Google Earth imagery. The RMS errors for the 32 georeferenced images ranged from 3.3 to 9.6 m with a mean of 6.6 m based on second-order polynomial transformation. All the georeferenced images were resampled to 1-m pixel resolution with the nearest-neighborhood resampling technique. Four mosaicked images were created using four different combinations of color correction methods and overlay functions, including (1) no color correction and a simple overlay, (2) no color correction and the feather function, (3) color balancing and the feather function, and (4) histogram matching and the feather function. Color balancing attempts to adjust each image’s brightness based on the brightness values in the areas it overlaps with in other images. Histogram matching converts the histogram of one band of an image to resemble another histogram to match the overall color and shading among images. A simple overlay stacks the images in the order they were acquired, so the last image is on the top, whereas the feather overlay function employs a linear interpolation of the values for all the pixels in the overlap area. To examine the effect of the number of images on mosaicked images, a subset of 10 images with a minimum of 20% overlap was selected for mosaicking using the four combinations of color correction methods and overlay functions. In addition to the four mosaicked images from all 32 images, a total of eight mosaicked images were created using the position-based approach. The content-based approach did not require the input images to be georeferenced, but the geographic position for the center of each geotagged RGB image allowed the orthomosaics to be georeferenced in the process. Since the NIR images were not geotagged, the geographic information in the RGB images was first saved in a file and then transferred to the NIR images after the images were uploaded into Pix4DMapper. To increase position accuracy, 10 GCPs in the imaging area collected with a 20-cm GPS Pathfinder Pro XRS receiver (Trimble Navigation Limited, Sunnyvale, California) were added. Two orthomosaics, one from the RGB and one from the NIR images, were created with a pixel size of 1.04 m and an RMS error of 0.59 m. In addition, a digital surface model was also created. Although the two orthomosaics were not stacked together by Pix4DMapper, they were already aligned and georeferenced. Erdas Imagine was used to stack the two mosaics into a four-band image, which was then resampled to 1 m. The four possible three-band composites, including the color-infrared (CIR) or NIR–red-green, NIR–red-blue, NIR–green-blue, and RGB composites, were also created for comparison. 2.4.Image ClassificationCrop classes included cotton, corn, grain sorghum, winter wheat, and watermelon, while noncrop was grouped into five classes: mixed herbaceous species, lush grass, mixed woody species, water bodies, and bare soil/impervious surfaces. Because of the variations within each of the 10 major classes, 2 to 6 ground verified areas, or areas of interest, within each major class were selected and digitized on the content-based four-band image as the training areas to represent respective subclasses for each major class. The numbers of digitized training pixels ranged from 2641 to 592,953 among the 40 digitized areas or subclasses. The total number of the sampled pixels was 4,338,159 accounting for about 4.5% of the study area. Signatures files were created for the eight position-based mosaics and the five content-based mosaics using the same training areas. Five supervised classifiers built in Erdas Imagine,29 including ML, MD, MAHD, SAM, and SCM, were applied to the eight position-based mosaics and the five content-based mosaics. Thus, a total of 40 classification maps were generated for the position-based mosaics and 25 classification maps for the content-based mosaics. The 40 subclasses in each of the 65 classification maps were then merged into the five crop classes and one noncrop class. Because of within-field variability and spectral similarity between the classes, multiple classes coexisted within the same field on all classification maps even though only one single crop was grown in the field. To remove some of the small inclusions of other classes within the dominant class, AGGIE-GIS aggregation in Erdas Imagine was applied to all classification maps. Each classification map was divided into windows that produced a single pixel in the output image. The majority value of the window was assigned as the output value of the resulting pixel. After the pixel aggregation, all the classification maps had a pixel resolution of 10 m, and some of the small inclusions were removed. 2.5.Accuracy AssessmentFor accuracy assessment of the classification maps, 1100 points were generated and assigned to the five crop classes and the noncrop class in a stratified random pattern. If a point fell inside a crop field, the classified crop type was compared with the actual crop type that was ground verified around the imaging date. If a point fell outside a crop field, its classified class was compared to the actual noncrop class. Based on the classified classes and the actual classes at these points, an error matrix for each classification map was generated. Classification accuracy statistics, including overall accuracy, producer’s accuracy, user’s accuracy, and kappa coefficients, were calculated based on the error matrices.30 3.Results and DiscussionFigures 2 and 3 present the mosaicked CIR images from the 32 pairs of RGB and NIR images using the position- and content-based approaches, respectively. Visually, the two mosaics look very similar. However, mismatches and color differences around overlapped areas in the position-based mosaic are noticed when the image was enlarged, while no mismatch or artifact is found in the content-based mosaic. Both mosaics reveal distinct differences among the crops and other cover types in the study area. On the CIR images, crops and other vegetation generally had a reddish color. Since corn and grain sorghum were at their peak growth, they had a dark red tone. Cotton plants were relatively small and there was substantial soil exposure, so cotton fields had a pinkish tone. Winter wheat was mature and some fields were even harvested, so it had a gray to dark gray color. There were only a few watermelon fields, and they had a magenta color. Fig. 2Mosaicked CIR image from 32 pairs of RGB and NIR images using the position-based approach with no color correction and the feather overlay function in Erdas Imagine.  Fig. 3Mosaicked CIR image from 32 pairs of RGB and NIR images using the content-based approach in Pix4DMapper.  Figures 4 and 5 show the six-class classification maps generated from the two respective mosaics presented in Figs. 2 and 3 based on the ML classifier. Although the AGGIE-GIS aggregation was applied to the classification maps, many small areas greater than can be seen in the maps. A quick comparison with the CIR images indicates that the classification maps provided good separations among the crops and the noncrop class. Most of the fields on the classification maps had only one dominant class, but all fields contained small inclusions of other classes due to the within-field variability and spectral similarities among some of the classes. Table 1 summarizes the accuracy assessment results for the 40 classification maps generated from the position-based mosaics. Overall accuracy ranged from 64.7% for the 10-image mosaic created using the color balancing and the simple overlay and classified with the SCM classifier to 90.4% for the 32-image mosaic created using no color correction and the feather overlay and classified with the ML classifier. These accuracy values indicated that 65% to 90% of the pixels in the study site were correctly identified in the 40 classification maps using the five classification methods. Overall kappa ranged from 0.508 to 0.860 among the 40 classification maps. Kappa values indicate how well the classification results agree with the reference data. A kappa value of 0 corresponds to a total random classification, while a kappa value of 1 represents a perfect agreement between the classification and reference data. Table 1Classification accuracy assessment results for mosaicked images created from georeferenced four-band images using different combinations of color correction methods and overlay functions for a cropping area.

Among the five classifiers, ML performed better than any of the other four classifiers for all the eight mosaics. MAHD was superior to the other three classifiers in all cases except in one case where MD was better and in two cases where SAM was better. MD had similar performance to SAM, while SCM was the worst among the five classifiers. Between the two overlay functions, the feather function was better than the simple overlay. Among the three color correction methods, no color correction appeared to be better than color balancing or histogram matching, although the differences among the three were marginal. The overall accuracy values using all 32 images were slightly higher than those using only 10 images for three of the four color correction and overlay function combinations. Based on these results, ML applied to either the 10-image or 32-image mosaic created using no color correction and the feather overlay function produced the best classification maps. Table 2 summarizes the accuracy assessment results for the five classification maps generated from the content-based mosaics. Similarly, ML performed best, and SCM had the lowest overall accuracy and overall kappa values among the five classifiers. The best overall accuracy was 91.3% for the four-band mosaic, slightly higher than the best overall accuracy for the position-based four-band mosaic (90.4%). The advantage of the NIR band is clearly seen from the difference in overall accuracy between the four-band mosaic and the RGB composite (91.3% versus 83.8%, respectively). The CIR or NIR–red-green composite had a similar overall accuracy to that of the four-band mosaic. It also had the highest overall accuracy among the four three-band composites, indicating that a single CIR camera is as effective as the combination of an RGB and an NIR camera. Table 2Classification accuracy assessment results for mosaicked four-band and three-band images created from 32 pairs of RGB and NIR images using the content-based approach for a cropping area.

As overall accuracy indicates the overall performance of all classes as a whole in a classification map, producer’s and user’s accuracies are more meaningful for the individual classes. Producer’s accuracy of a class indicates the probability of actual areas for that class being correctly classified, and user’s accuracy of a class indicates the probability that areas classified as that class on the map actually represent the class on the ground. Tables 3 and 4 present the error matrices and accuracy measures for the ML-based classification maps for the position-based four-band mosaic and the content-based four-band mosaic, respectively. Producer’s accuracy varied from 79% for grain sorghum to 97% for winter wheat for the position-based mosaic and from 86% for watermelon and grain sorghum to 97% for winter wheat for the content-based mosaic, whereas user’s accuracy ranged from 75% for winter wheat to 95% for noncrop for the position-based mosaic and from 79% for grain sorghum to 95% for noncrop for the content-based mosaic. The content-based mosaic had slightly higher overall accuracy and kappa values than the position-based mosaic. Table 3An error matrix and accuracy measures for an ML-based classification map for a mosaicked four-band image created from 32 manually georeferenced images with no color correction and the feather overlap function for a cropping area.

Note: Overall accuracy = 90.4%. Overall kappa = 0.860. Table 4An error matrix and accuracy measures for an ML-based classification map for a four-band mosaic created from 32 pairs of RGB and NIR images using the content-based approach for a cropping area.

Note: Overall accuracy = 91.3% and overall kappa = 0.873. For the position-based mosaic, cotton had a producer’s accuracy of 88.0% and a user’s accuracy of 91.4%. These values indicate that 88% of the cotton areas on the ground were correctly identified as cotton, whereas 91% of the estimated cotton areas on the classification map were actually cotton. In other words, the classification map missed 12% (omission error) of the cotton areas on the ground, while about 9% (commission error) of the pixels classified as cotton on the classification map actually belonged to the other classes. Of the 276 points verified on the ground as cotton, 5 points (1.8%) were misclassified as corn, 6 (2.2%) as winter wheat, 12 (4.3%) as watermelon, and 10 points (3.6%) noncrop on the classification map. On the other hand, of the 266 points classified on the classification map as cotton, 2 (0.8%), 1 (0.4%), and 20 points (7.5%) were actually corn, grain sorghum, and noncrop, respectively. The producer’s and user’s accuracies for corn and noncrop were better than those for the other crops. It should be noted that some of these misclassifications were due to the small inclusions in the dominant class. If the whole field was considered as one unit, the classification accuracy would improve. For the other classes, winter wheat had a high producer’s accuracy of 97% and a low user’s accuracy of 75%. This indicates that although 97% of the winter wheat areas on the ground were correctly identified as winter wheat, only 75% of the areas called winter wheat on the classification map were actually winter wheat. In other words, winter wheat areas on the classification map overestimated the actual winter wheat areas. For the content-based mosaic, the producer’s and user’s accuracies for cotton were both close to 91%, indicating 91% of the cotton areas on the ground were correctly identified as cotton and 91% of the areas called cotton on the classification map were actually cotton. Of the 276 points verified on the ground as cotton, 2 points (0.7%) were misclassified as corn, 6 (2.2%) as winter wheat, 3 (1.1%) as watermelon, and 14 (5.1%) as noncrop on the classification map. Moreover, of the 277 points classified as cotton on the classification map, 2 points (0.7%) actually belonged to corn, 1 (0.4%) to grain sorghum, 1 (0.4%) to watermelon, and 22 (7.9%) to noncrop. Similarly, the producer’s and user’s accuracies for corn and noncrop were generally better than those for the other crops. Tables 5 and 6 give area estimates of cotton and noncotton classes from the ML-based classification maps for the eight position-based mosaics and the five content-based mosaics. The eight position-based mosaics provided very similar area estimates for cotton, ranging from 2220 to 2454 ha or 23.0% to 25.4% of the total area, although the four position-based mosaics using all 32 images provided slightly higher estimates than those using only 10 images. In practice, using only 10 images can significantly reduce the time required for image alignment and georeferencing. The area estimates for cotton based on the content-based four-band mosaic, the CIR composite, and the RGB composite (24.7% to 25.4%) were similar to those based on the position-based mosaics. However, the estimates based on the NIR-red-blue and NIR-green-blue composites (21.4% and 21.0%) were 3% to 4% lower. As different classification maps had varying overall accuracy values as well as producer’s and user’s accuracy values, the variation in area estimates was expected. Table 5Area estimates of cotton and noncotton classes from ML-based classification maps for mosaicked images created from georeferenced four-band images using different combinations of color correction methods and overlay functions for a cropping area.

Note: Total area = 9650 ha. Table 6Area estimates of cotton and noncotton classes from ML-based classification maps for mosaicked four-band and three-band images created from 32 pairs of RGB and NIR images using the content-based approach for a cropping area.

Note: Total area = 9650 ha. The best position-based mosaic created from all 32 images using no color correction and the feather overlay function provided an area estimate of 2424 ha or 25.1% for cotton. In comparison, the best content-based four-band mosaic provided a very similar area estimate of 2453 ha or 25.4%. These numbers could be used as the best estimates for cotton. It should be noted that although it is important to estimate the cotton production area, it is more important to identify cotton fields for the boll weevil eradication program. Considering the small inclusions of minor crop classes or noncrop areas in each crop field on the classification maps, further scrutiny of these crop fields may be justified. However, cotton fields could generally be correctly identified from the classification maps if cotton was the dominant class in the fields. Nevertheless, ground surveys are always necessary for verification. Although the imaging area () in this study was relatively small, the approaches can be applied to large cotton growing areas with more diverse cover types. However, as larger areas tend to have more crop variability and cover diversity, classification accuracy may decrease. To improve classification accuracy, it is more appropriate to divide a large imaging area into several smaller subsets and classify the subset images separately based on the signatures derived from individual subset images. 4.ConclusionsThis study demonstrated the accuracy of various methodologies for early identification of cotton fields using aerial RGB and NIR imagery. Two image mosaicking approaches and five image classifiers were evaluated and compared for crop identification and area estimation. Results showed that cotton fields could be accurately identified at relatively early growth stages using mosaicked aerial imagery. Mosaics created from the position- and content-based approaches were effective for cotton identification if proper color correction methods and overlay functions are used for the position-based approach. In this study, the combination of no color correction and the feather overlay function produced the best results. The position-based mosaicking approach provided accurate results but is laborious and time-consuming. If this approach is used, a subset of images with 20% to 30% overlaps may be selected for mosaicking to reduce the image processing time. Although the content-based approach requires additional photogrammetry software, such as Pix4DMapper, it is almost fully automatic except for the minimal manual intervention to add a small number of GCPs. In this study, the time it took for the position-based approach was 2 to 3 weeks, compared with 3 to 4 h for image processing and another 2 to 3 h to collect and add GCPs for the content-based approach. Moreover, the orthomosaics from the content-based approach are more accurate in both geographic position and image content. Among the five classifiers examined, ML performed better than MD, MAHD, SAM, and SCM. As ML is probably the most commonly used classifier and can be found in almost all imaging processing software packages, it will be the best choice for this application. Considering imaging cost and weather constraints, single-date imagery is sufficient for cotton identification as cotton is planted after other major crops. As aerial imagery is relatively cheap to acquire and can be acquired any time weather permits, mosaicked aerial imagery will be a useful tool for boll weevil eradication program managers to quickly and efficiently identify cotton fields and potential areas for volunteer and regrowth cotton plants. As more satellite imagery is becoming available for free or at a low cost, research is needed to examine both high resolution and moderate resolution satellite imagery for early identification of cotton fields. AcknowledgmentsThis project was partly funded by Cotton Incorporated in Cary, North Carolina. The authors wish to thank Denham Lee and Fred Gomez of U.S. Department of Agriculture-Agricultural Research Service, College Station, Texas, for acquiring the aerial imagery for this study, Christopher Maderia, a former graduate student of Texas A&M University at College Station, Texas, for assistance in image alignment and georeferencing, and Derrick Hall, Cody Wall, and Jose Perez of U.S. Department of Agriculture-Agricultural Research Service, College Station, Texas, for ground measurements and verification. Mention of trade names or commercial products in this article is solely for the purpose of providing specific information and does not imply recommendation or endorsement by the U.S. Department of Agriculture. USDA is an equal opportunity provider and employer. ReferencesAnonymous, “2014 boll weevil eradication update,”

(2017) https://www.aphis.usda.gov/plant_health/plant_pest_info/cotton_pests/downloads/bwe-map.pdf January ). 2017). Google Scholar

J. K. Westbrook et al.,

“Airborne multispectral identification of individual cotton plants using consumer-grade cameras,”

Remote Sens. Appl.: Soc. Environ., 4 37

–43

(2016). http://dx.doi.org/10.1016/j.rsase.2016.02.002 Google Scholar

K. R. Summy et al.,

“Control of boll weevils (Coleoptera: Curculionidae) through crop residue disposal: destruction of subtropical cotton under inclement conditions,”

J. Econ. Entomol., 79

(6), 1662

–1665

(1986). http://dx.doi.org/10.1093/jee/79.6.1662 JEENAI 0022-0493 Google Scholar

R. A. Ryerson, P. J. Curran, P. R. Stephens,

“Agriculture,”

Manual of Photographic Interpretation, 365

–397 2nd ed.American Society for Photogrammetry and Remote Sensing, Bethesda, Maryland

(1997). Google Scholar

D. R. Oettera et al.,

“Land cover mapping in an agricultural setting using multiseasonal thematic mapper data,”

Remote Sens. Environ., 76 139

–155

(2001). http://dx.doi.org/10.1016/S0034-4257(00)00202-9 Google Scholar

T. Murakami et al.,

“Crop discrimination with multitemporal SPOT/HRV data in the Saga Plains, Japan,”

Int. J. Remote Sens., 22

(7), 1335

–1348

(2001). http://dx.doi.org/10.1080/01431160151144378 IJSEDK 0143-1161 Google Scholar

J. Schmedtmann and M. L. Campagnolo,

“Reliable crop identification with satellite imagery in the context of common agriculture policy subsidy control,”

Remote Sens., 7 9325

–9346

(2015). http://dx.doi.org/10.3390/rs70709325 Google Scholar

A. Ozdarici and M. Turker,

“Field-based classification of agricultural crops using multi-scale images,”

in Proc. First Int. Conf. on Object-Based Image Analysis (OBIA’06),

(2006). Google Scholar

H. Xie et al.,

“Suitable remote sensing method and data for mapping and measuring active crop fields,”

Int. J. Remote Sens., 28

(2), 395

–411

(2007). http://dx.doi.org/10.1080/01431160600702673 IJSEDK 0143-1161 Google Scholar

C. Yang et al.,

“Using high resolution QuickBird imagery for crop identification and area estimation,”

Geocarto Int., 22

(3), 219

–233

(2007). http://dx.doi.org/10.1080/10106040701204412 Google Scholar

C. Yang, J. H. Everitt and D. Murden,

“Using high resolution SPOT 5 multispectral imagery for crop identification,”

Comput. Electron. Agric., 75 347

–354

(2011). http://dx.doi.org/10.1016/j.compag.2010.12.012 CEAGE6 0168-1699 Google Scholar

M. Turker and A. Ozdarici,

“Field-based crop classification using SPOT4, SPOT5, IKONOS and QuickBird imagery for agricultural areas: a comparison study,”

Int. J. Remote Sens., 32

(24), 9735

–9768

(2011). http://dx.doi.org/10.1080/01431161.2011.576710 IJSEDK 0143-1161 Google Scholar

D. Akkaynak et al.,

“Use of commercial off-the-shelf digital cameras for scientific data acquisition and scene-specific color calibration,”

J. Opt. Soc. Am. A, 31 312

–321

(2014). http://dx.doi.org/10.1364/JOSAA.31.000312 JOSAAH 0030-3941 Google Scholar

C. Yang et al.,

“An airborne multispectral imaging system based on two consumer-grade cameras for agricultural remote sensing,”

Remote Sens., 6

(6), 5257

–5278

(2014). http://dx.doi.org/10.3390/rs6065257 Google Scholar

H. Song et al.,

“Comparison of mosaicking techniques for airborne images from consumer-grade cameras,”

J. Appl. Remote Sens., 10

(1), 016030

(2016). http://dx.doi.org/10.1117/1.JRS.10.016030 Google Scholar

J. Zhang et al.,

“Evaluation of an airborne remote sensing platform consisting of two consumer-grade cameras for crop identification,”

Remote Sens., 8 257

(2016). http://dx.doi.org/10.3390/rs8030257 Google Scholar

C. Yang and W. C. Hoffmann,

“A low-cost dual-camera imaging system for aerial applicators,”

Agric. Aviat., 42

(5), 44

–47

(2015). Google Scholar

C. Yang and W. C. Hoffmann,

“A low-cost single-camera imaging system for aerial applicators,”

J. Appl. Remote Sens., 9 096064

(2015). http://dx.doi.org/10.1117/1.JRS.9.096064 Google Scholar

H. W. Bischof, W. Schneider and A. J. Pinz,

“Multispectral classification of Landsat-images using neural networks,”

IEEE Trans. Geosci. Remote Sens., 30 482

–490

(1992). http://dx.doi.org/10.1109/36.142926 IGRSD2 0196-2892 Google Scholar

D. Lu and Q. Weng,

“A survey of image classification methods and techniques for improving classification performance,”

Int. J. Remote Sens., 28

(5), 823

–870

(2007). http://dx.doi.org/10.1080/01431160600746456 IJSEDK 0143-1161 Google Scholar

A. Mathur and G. M. Foody,

“Crop classification by support vector machine with intelligently selected training data for an operational application,”

Int. J. Remote Sens., 29

(8), 2227

–2240

(2008). http://dx.doi.org/10.1080/01431160701395203 IJSEDK 0143-1161 Google Scholar

D. C. Duro, S. E. Franklin and M. G. Dubé,

“A comparison of pixel-based and object-based image analysis with selected machine learning algorithms for the classification of agricultural landscapes using SPOT-5 HRG imagery,”

Remote Sens. Environ., 118 259

–272

(2012). http://dx.doi.org/10.1016/j.rse.2011.11.020 Google Scholar

M. I. Pedley and P. J. Curran,

“Per-field classification: an example using SPOT HRV imagery,”

Int. J. Remote Sens., 12

(11), 2181

–2192

(1991). http://dx.doi.org/10.1080/01431169108955251 IJSEDK 0143-1161 Google Scholar

A. J. W. De Wit and J. G. P. W. Clevers,

“Efficiency and accuracy of per-field classification for operational crop mapping,”

Int. J. Remote Sens., 25

(20), 4091

–4112

(2004). http://dx.doi.org/10.1080/01431160310001619580 Google Scholar

C. Conrad et al.,

“Per-field irrigated crop classification in arid central Asia using SPOT and ASTER data,”

Remote Sensing, 2 1035

–1056

(2010). http://dx.doi.org/10.3390/rs2041035 Google Scholar

J. M. Peña-Barragán et al.,

“Object-based crop identification using multiple vegetation indices, textural features and crop phenology,”

Remote Sens. Environ., 115 1301

–1316

(2011). http://dx.doi.org/10.1016/j.rse.2011.01.009 Google Scholar

J. Peña et al.,

“Object-based image classification of summer crops with machine learning methods,”

Remote Sens., 6 5019

–5041

(2014). http://dx.doi.org/10.3390/rs6065019 Google Scholar

I. L. Castillejo-González et al.,

“Object- and pixel-based analysis for mapping crops and their agro-environmental associated measures using QuickBird imagery,”

Comput. Electron. Agric., 68 207

–215

(2009). http://dx.doi.org/10.1016/j.compag.2009.06.004 CEAGE6 0168-1699 Google Scholar

ERDAS Field Guide, Intergraph Corporation, Huntsville, Alabama

(2013). Google Scholar

R. G. Congalton and K. Green, Assessing the Accuracy of Remotely Sensed Data: Principles and Practices, Lewis Publishers, Boca Raton, Florida

(1999). Google Scholar

BiographyChenghai Yang is an agricultural engineer with the U.S. Department of Agriculture-Agricultural Research Service’s Aerial Application Technology Research Unit, College Station, Texas. He received his PhD in agricultural engineering from the University of Idaho in 1994. His current research is focused on the development and evaluation of remote sensing technologies for precision agriculture and pest management. He has authored or coauthored 130 peer-reviewed journal articles and is a member of a number of national and international professional organizations. |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||